We can’t trust the fox to guard the henhouse, especially when it comes to AI

The backstory behind Taming Silicon Valley

I have written (or co-written, in one case) six books. Taming Silicon Valley, which I finished yesterday, was the fastest, written with urgency, in rage and in fear.

The premise of the book is that Silicon Valley is increasingly controlling our lives, and indeed the entire world, and that Washington (seat of the US government) is too much in their thrall to stand up tall enough against it. And that we need to act, now.

I don’t want to see what Ian Bremmer calls a “technopolar” world, dominated by a few big tech companies, because I don’t have enough faith in Silicon Valley to do well by us, especially after how they have handled social media. Washington is supposed to be a counterweight, to keep the captain of industries honest. So far, they haven’t done enough.

A lot of my books are about science and technology; this one is mostly about technology policy, and how Silicon Valley is running the table. One of the chapters in the book is called “The moral descent of Silicon Valley”, another chapter is about how Silicon Valley manipulates public opinion. Still another is about how they are playing Washington. Another whole section is about what we should actually be doing.

Novels often having “inciting incidents” that lead their characters on a quest. The book itself had an inciting incident: my own growing sense that Washington was not going to get us to where we need to be.

From my time testifying at the Senate I know Washington wants to do the right thing here. But I no longer feel confident that they will.

Disillusionment began when the White House had its first big meeting/photo opportunity around AI, in May, 2023. VP Harris and (briefly) President Biden grinned along with a bunch of tech CEO’s; skeptical scientists were nowhere to be seen.

What the tech industry wants, in their ideal world, is “self-regulation”, in which they are pretty much free to do what they want. That’s basically what’s happened with social media, and it’s been a disaster.

I really really don’t want to see that happen with AI. But if the White House treats tech CEOs like rock stars, those rock stars are going to call the shots.

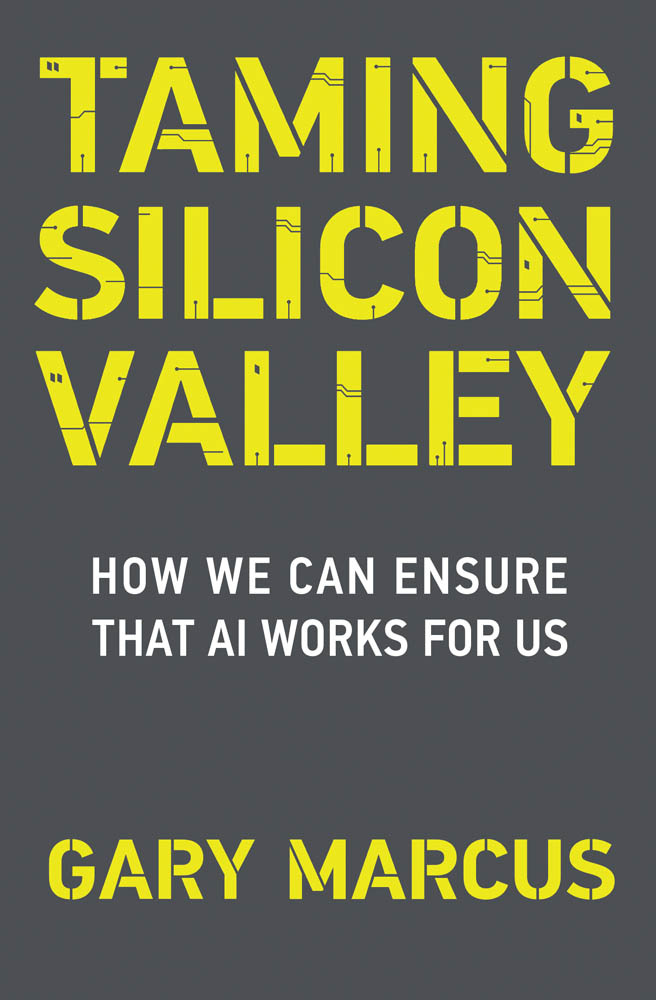

The queasiness I felt after the White House photo op increased in September, with an event organized by Senator Schumer, arguably the single most important player at the moment, since as Majority Leader he decides what legislation around AI might be voted on. In something of a replay of that May White House meeting, Schumer held a widely publicized but closed-to-the-public meeting on AI, again treating the tech CEOs as rock stars, and again largely leaving out independent scientists.

Marietje Schaake, a former member of the European Parliament, wrote some harsh words at the time:

Deb Raji, a spectacular Berkeley PhD student who works on AI policy, is the only one I would recognize there as an independent scientist. Literally nobody who had spent decades researching the technical side AI was invited. That didn’t sit well with me.

Particularly since Silicon Valley is prone to hype, Washington needs independent scientists in the room.

§

Eventually a series of incidents like that – combined with inaction from Schumer, who controls what AI legislation if any is actually voted on – led me to write the book. I grew more and more frustrated that when it came to AI, we just weren’t going to get the legislation we needed.

The book is about what legislation we need, but more than that, it’s a call to arms, an exhortation to citizens to get involved, and to demand what we need. (It’s also a detailed analysis of what we need, and how we got to where we are.)

Although the White House did everything in its power with its AI Executive Order, the White House doesn’t have enough power to write laws; that’s not how our constitution works. They aren’t allowed to write laws. The legislative branch has to write laws. Schumer has had something like nine closed door meetings on AI, but (to my knowledge) yet to bring a single AI-related piece of legislation to the Senate floor. If he doesn’t, we are sitting ducks.

To take but one example, I have been warning for years, in all capital letters, that things were about to get bad with misinformation and elections (e.g. in my December 2022 essay here about LLM’s being AI’s Jurassic Park moment, for example). But nobody did anything about it. Over a year ago in The Atlantic, I urged that we come up with laws and new technological responses to blunt the impact of AI-generated misinformation. So far almost nothing has been done.

This morning The Washington Post reported, exactly as I warned, that “AI deepfakes threaten to upend global elections. No one can stop them. As more than half the global population heads to the polls in 2024, AI-powered audio, images and videos are sowing confusion and clouding the political debate”. We waited too long.

§

One of the major themes of Taming Silicon Valley is the need for independent oversight; as the old proverb so rightly puts it, the fox can’t guard the henhouse. I talk, for example, about how Washington often gets co-opted by lobbyists, and stress the need for independent scientists to be at the table, both as we develop policy and as we make sure that our new technologies are safe.

So it was mega-weird yesterday to finish reviewing the copy-edited manuscript of my book (pretty much the last chance to make significant changes) and see at exactly that moment yet another vivid example of Washington sucking up too much to Silicon Valley: a new blue-ribbon commission, largely overlapping with the one that Schaake rightly criticized, in large part made up of the same tech CEOs. One legit scientist that I respect was added – Fei Fei Li. But basically it’s regulatory capture all over again. Technologist/activist Ed Newton-Rex said it nicely in a X post that mirrors Schaake’s from August:

When I groused about this yesterday to a friend who is well-connected in Washington, the friend reminded me that part of the problem is the revolving door: “Everyone who is in [Washington] and wants to leave [after the election] wants to go to an AI company.”

It’s almost impossible to envision the independence we might need when nobody in Washington knows where they will be come January, and pots of gold await in the Bay Area. Especially when essentially everyone in Washington is underpaid.

§

It’s hard to write about technology these days, with things moving so swiftly. Sometimes I worried, from a personal perspective, that the book would seem obsolete by the time it came out in September.

In the best case, the book would be obsolete for the best of reasons: somehow Washington would get it together, and protect us from disinformation, accidental misinformation, cybercrime, deepfake porn, and all the many other AI-related risks I discuss, finally standing up to Silicon Valley, finally incorporating a cadre of independent scientists as a counterweight to big tech, entirely obviating the need for my book.

We should all be so lucky. Washington remains in Silicon Valley’s thrall, our elections are under siege, deepfake scams are everywhere, and not one law has passed.

Every one of us should be worried.

Gary Marcus desperately wants to see a thriving, AI-positive world, but doesn’t know how to get there unless Washington stands up to Silicon Valley. All profits from Taming Silicon Valley will be donated to charity.

I am an artist and a mother, and I am feeling extremely worried about the current state of the world. As much as I hate to admit it, I feel powerless when it comes to making a difference. I know there are many others like me who care deeply about the issues at hand, but we don't know where to begin when it comes to advocating for change.

The reality of everything you've been writing hit me the other day when I was looking into buying a common household item—a bug spray, what with it starting to get hotter where I am—and doing as I always do when buying anything, I tried to do a little research first, searching for some reviews or info on what people say works best.

I was halfway down reading a list of reviewed items that popped up in the search results when I realised what I was reading was unmistakably written by ChatGPT, with its characteristic writing style repeating through each "review" and none of them written by a human, with sentences like (e.g.): "When considering to purchase a X, there are a few things to consider. First..."

Amazed, I went back to look at the other results only to realise almost all of them had to be brand new AI spam sites pumped up in the results by SEO tactics, or perhaps copying other websites' metadata to displace them as I recalled seeing a CEO mentioned as doing (probably from this publication). It was not long ago every result was written by a human; now, I could scarcely find a single search result that was.

I had slightly more luck looking for a vacuum cleaner finding human reviews. On the other hand, I was looking for some new cat toys and found AI cats (of course never labelled) mixed into video results and marketing images (formerly photos).

What is the world coming to when there is so much junk AI "information" to wade through making everyday purchases? And if businesses can so quickly pollute the Internet with junk on things that don't matter that much, what are the better motivated like states, intelligence, their stakeholders, and other political actors doing that I haven't noticed? If business is doing it, they must be.

The consequences of LLMs are not a future issue but a present one, and the way they're being used is mortifying, drowning the Internet in a sea of crap.