What scaling does and doesn’t buy you: peeling back the hype surrounding Google‘s trendy Nano Banana

Some things never change

Google’s brand-new “nano banana” image editor, all the talk of X, truly is amazing. Thanks to scaling (and some other tricks), the graphics are terrific, and the ability to have it edit photos that you upload is genuinely cool. Hell, you can even use Nano to make fun of me:

But thing, is, Garius Marcus Criticus (AKA the Imperator) was right: scaling can only take you so far.

For example, with respect to image generation systems, what the aforementioned Marcus said (as far back as 2022, often together with Ernest Davis) was that parts and wholes would pose problems in systems that rely on statistics without deep world knowledge. (The challenges that compositionality poses were also major theme of Marcus and Davis’s 2019 book Rebooting AI.)

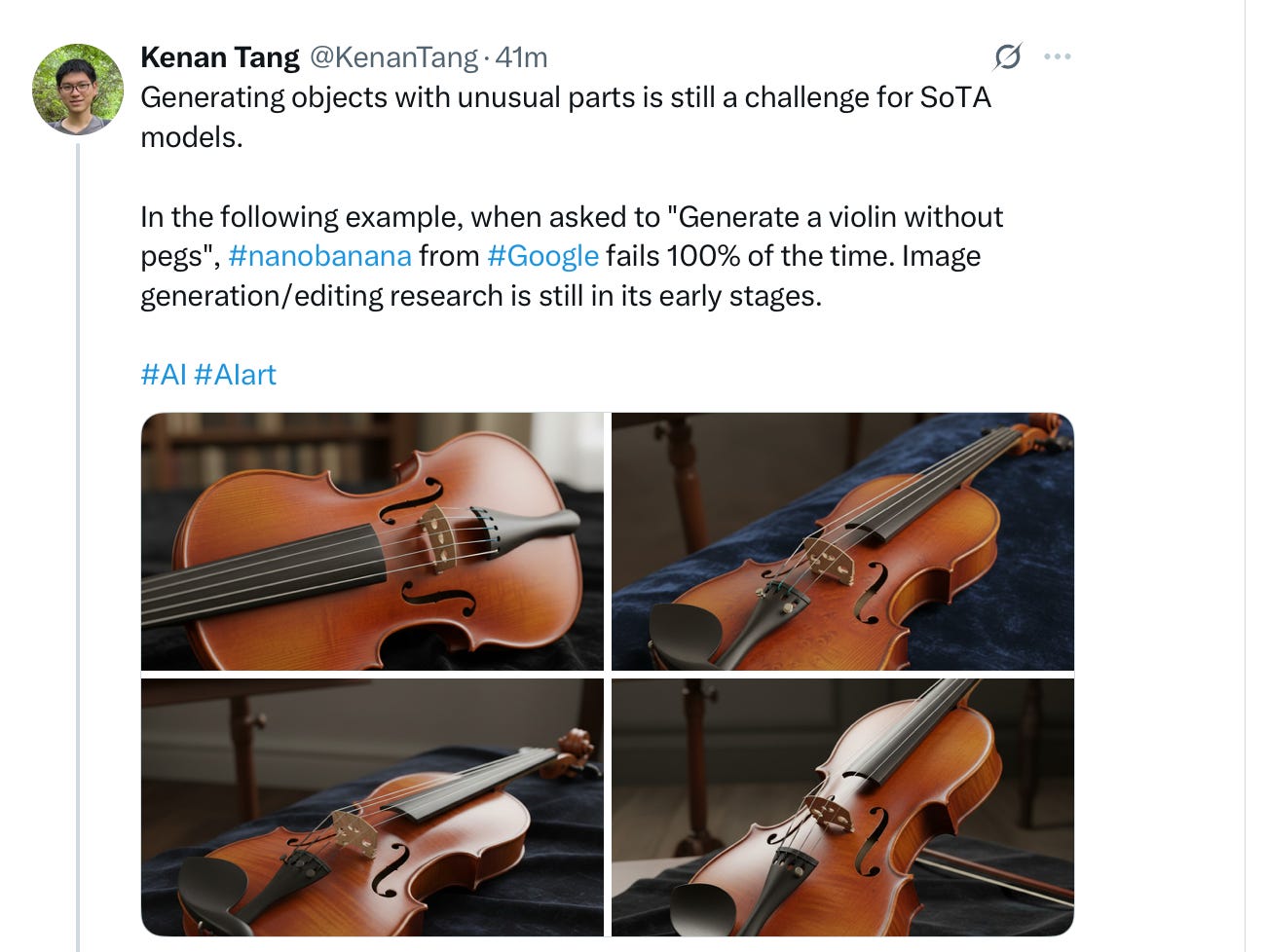

And guess what, surprise, surprise, even the latest models still struggle with compositionality, as a grad student at UC Santa Barbara, Kenan Tang, quickly noted, in one of the first skeptical looks at nano:

Tang, who has a more scientific spirit than Unutmaz, was also quick to note that these problems are not new. They are persistent:

Over the last few years, graphics have gotten better and better. World models and comprehension less so.

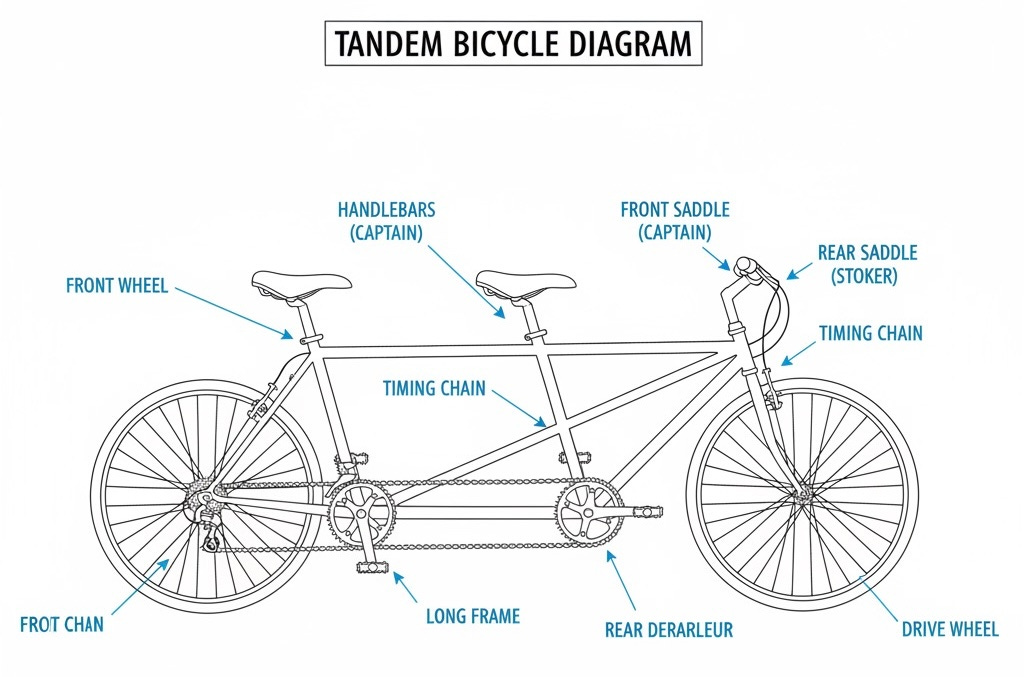

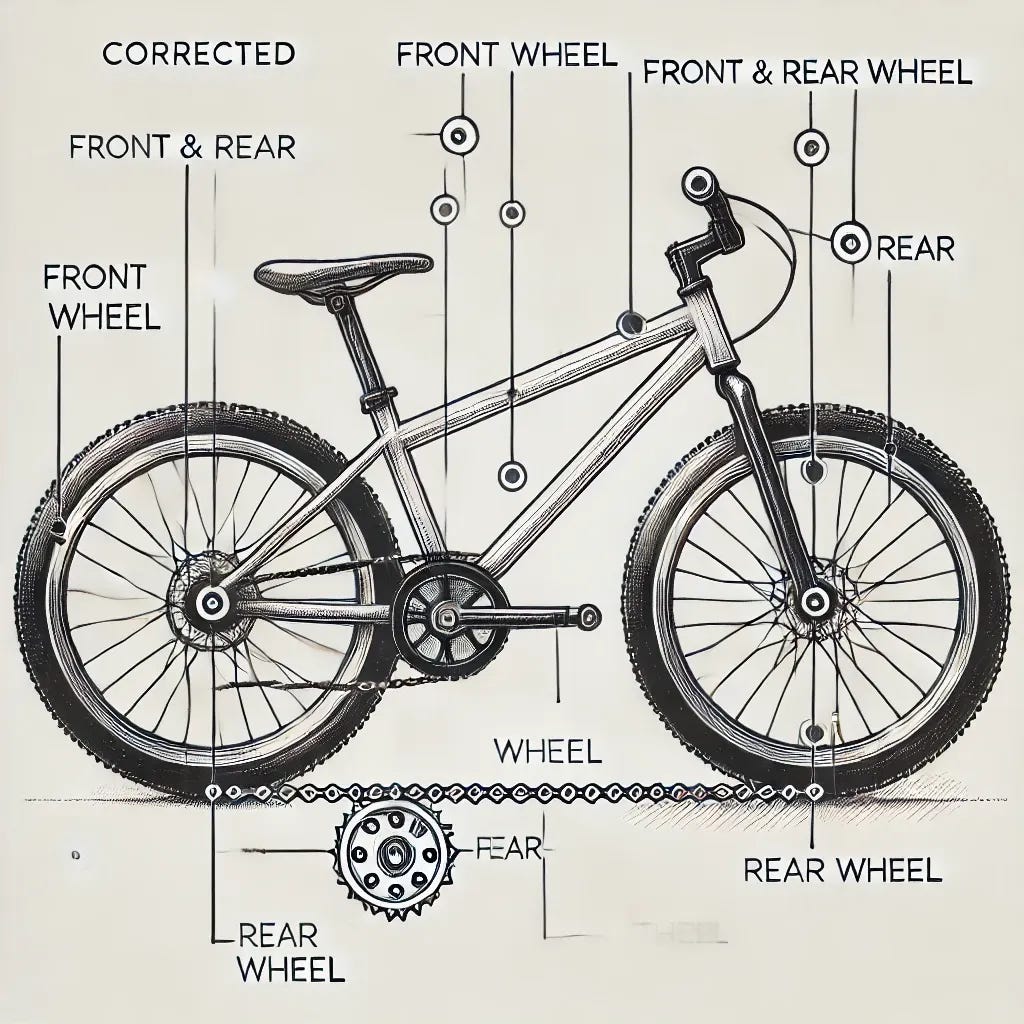

Since I have made this point so many times in so many ways in so many previous essays, I won’t belabor this point at length. Suffice to say that I was able to break nano banana literally on my first try:

You don’t have to have a PhD in mechanical engineering to spot some of the errors. (It’s also not so hot on recumbent bikes.)

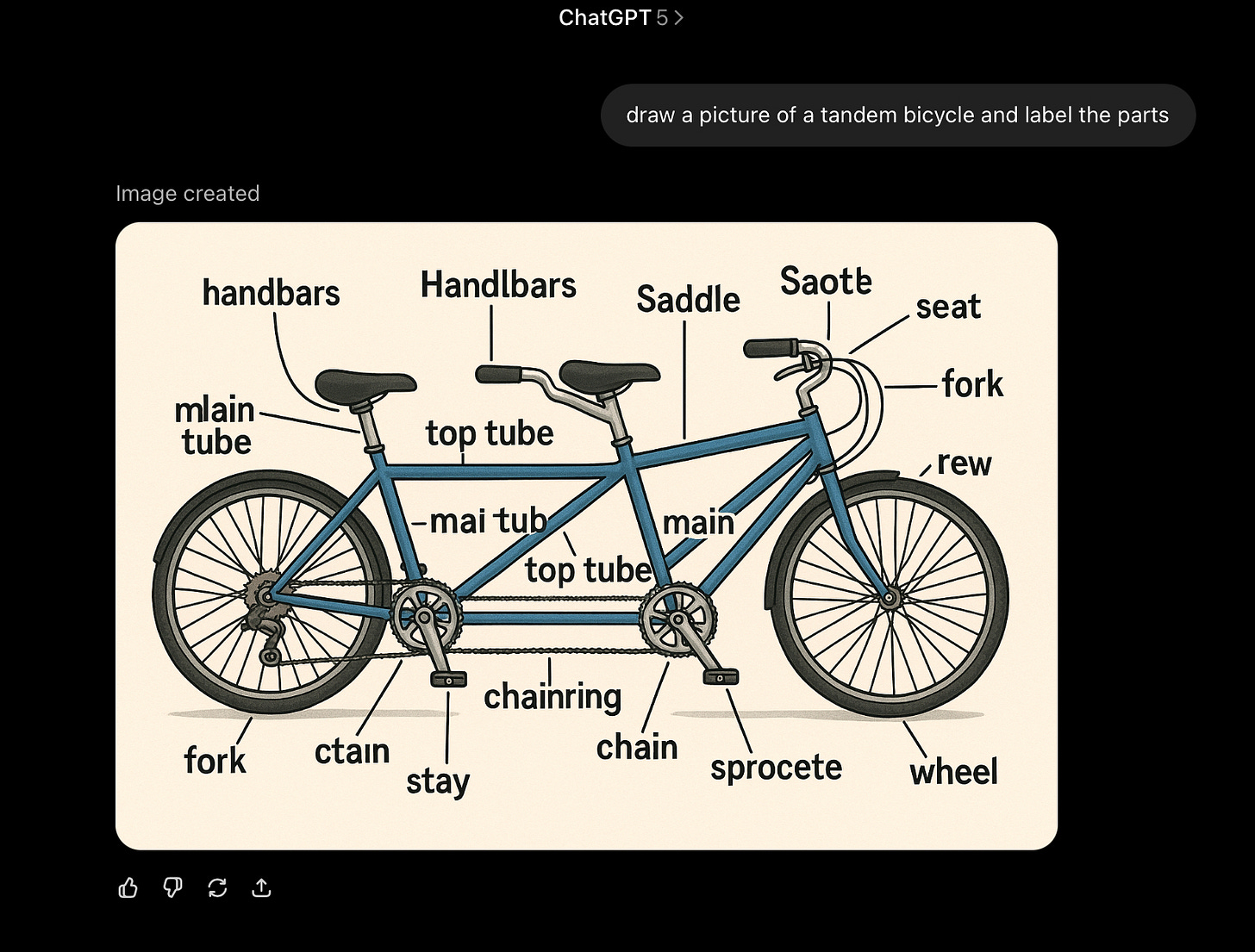

For all the hype, Nano Banana is not all that much better at this sort of thing than ChatGPT-5:

Nor much better than what Davis and I found last December:

They all stink.

§

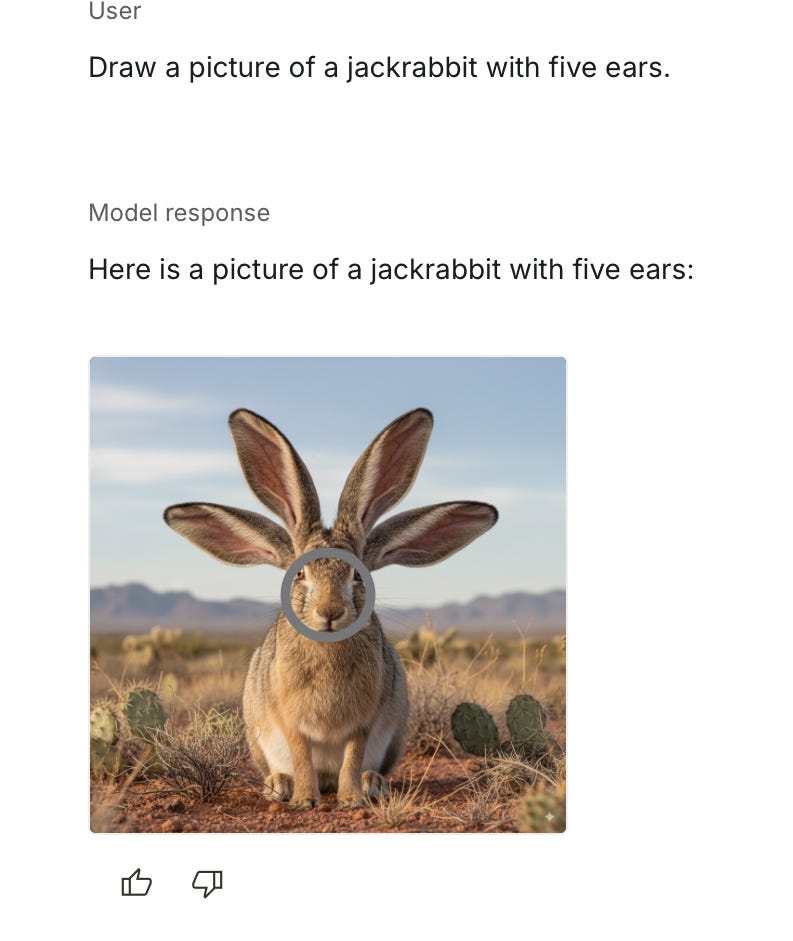

To be sporting, I gave Nano a second try on a different challenge, riffing on another example Davis and I had tried last year (“Draw a picture of a rabbit with four ears”), but slightly revising the details to avoid any problem-specific training.

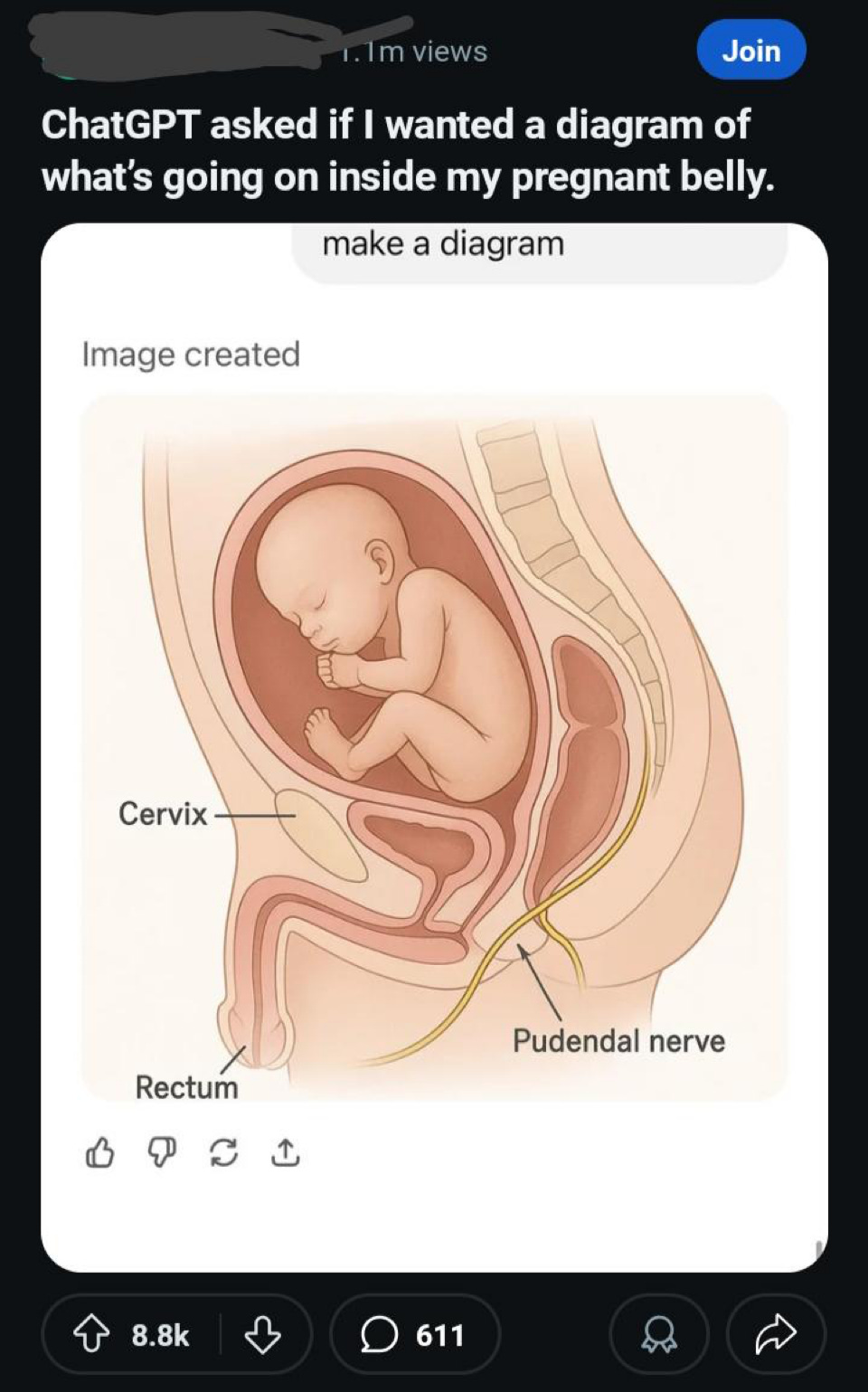

ChatGPT, by the way, also continues to struggle with the basic facts of life:

§

Scaling works, but only to a degree, and only on some aspects of intelligence and not others. Graphics in these systems do continue to get better, regularly. And now they can generate videos, and not just stills.

But it’s all still just an extended form of mimicry, not something deeper, as the persistent failures with parts and wholes keep showing us.

Beauty is only skin deep.

Gary Marcus felt chuffed this morning to read this from Andrew Keen, “For …. Marcus [who has] … endured what he diplomatically calls "an unbelievable amount of shit" for his contrarian views … the irony is particularly delicious. He now finds himself vindicated as the very company he's criticized adopts his language of caution and scaled-back expectation”. You can hear us discussing the weird sociology of contemporary AI here.

I guess my question is...why? Why do I need an artificially generated picture of a violin? Or a banana? Or anything? Thanks to the internet, images are not a problem in the least. What is the point in having a faster artifical violin? Is it so my mediocre D&D campaign can have an equally mundane drawing of a dragon?

I guess there's "progress" and whatnot, but it doesn't feel like progress. It feels like a hat on a hat.

The question is not so much “will we get anything resembling ‘understanding’ using pixel/token statistics if we scale that?” (the answer is ‘no’, period). There is no uncertainty here, so the question becomes boring.

An interesting question is “why does it take so long for this truth to sink in?”.

Another interesting question is “what kind of dystopian results of broad & shallow (cheap) AI will emerge?”.

The digital revolution has brought great things, but also horrendous ones. Financialisation (where the world economy turns into mostly illusionary with only a few % of it real) and economic crises, a collapse of shared values and the emergence of silos of lies, surveillance capitalism, sycophantic oligarchy instead of democracy, to name a few. What is the current AI boom going to add to that?