What should we learn from OpenAI’s mistakes and broken promises?

It’s increasingly clear that OpenAI has not been consistently candid. What follows from that?

A year ago, Sam Altman was treated like a head of state; OpenAI could do no wrong. But lately, he and they have made a lot of mistakes, and broken a lot of promises. Here’s just a small sample:

OpenAI called itself open, and traded on the notion of being open, but even as early as May 2016 knew that the name was misleading. And Altman himself chose not to correct that misleading impression.

OpenAI proceeded to make a Scarlett Johansson-like voice for GPT-4o, even after she specifically told them not to, behaving in an entitled fashion that has angered many—highlighting their overall dismissive attitude towards artist consent.

OpenAI has trained on a massive amount of copyrighted material, without consent, and in many instances without compensation. The actress Justine Bateman described this as the greatest theft in US history. (Many lawsuits on the matter are pending). CTO Greg Brockman personally downloaded YouTube videos, likely in violation of terms of service, as documented by the New York Times. Millions, perhaps billions, of documents likely have been taken without permission.

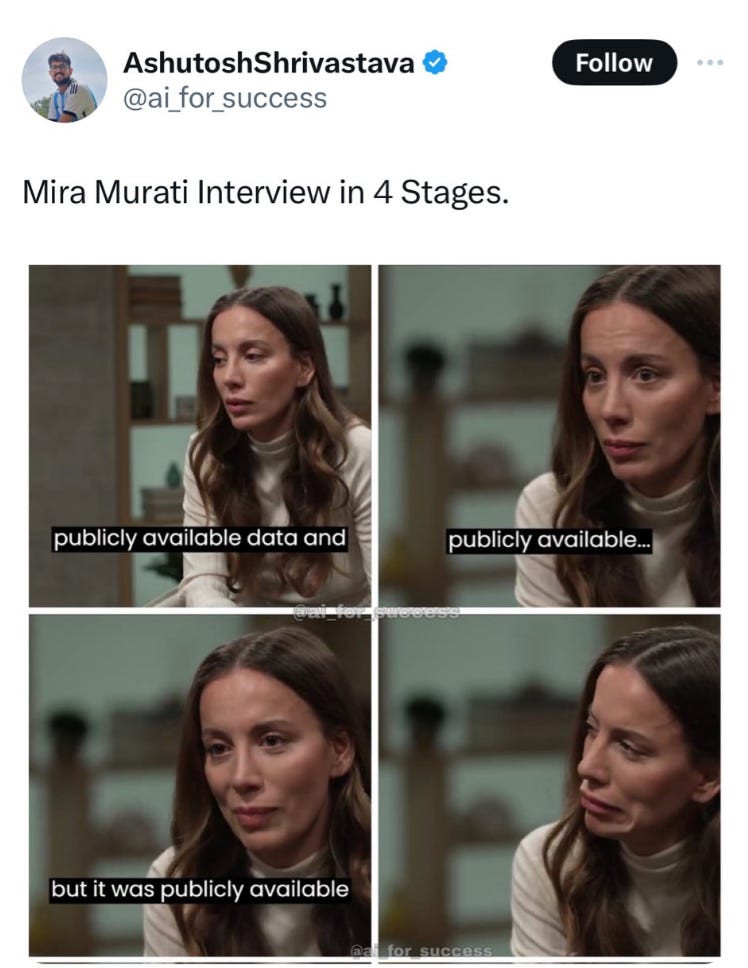

CTO Mira Murati embarrassed herself and the company in her interview with Joanna Stern of the Wall Street Journal, sneakily conflating “publicly available” with “public domain”, sticking to a prepared answer and dodging questions about sources on which Sora had been trained.

Altman appears to have misled people (including US Senators) about his personal holdings in OpenAI, reporting correctly that he has no direct equity, but neglecting to note his ownership in the OpenAI Startup fund, and his indirect holdings in OpenAI via his holdings in YCombinator; he also omitted the fact that he has many potential conflicts of interest between his role as CEO of the nonprofit OpenAI and other companies they might do business with, in which he has a stake.

OpenAI promised to devote 20% of its efforts to AI safety, but never delivered, according to a recent report by Jeremy Kahn at Fortune. Five safety related employees recently left, including two of the most prominent ones, apparently over concerns that AI safety was not being taken seriously.

As Kelsey Piper recently documented, OpenAI had highly unusual contractual “clawback” clauses designed to keep employees from speaking out about any concerns about the company. (Altman has apologized for this, but signed off on the original articles of incorporation that made this possible.) Employees likely were not clearly informed of all this at the time when they signed.

Altman once (in 2016) promised that outsiders would play an important role in the company’s governance; that key promise has not been kept. (“We’re planning a way to allow wide swaths of the world to elect representatives to a new governance board. Because if I weren’t in on this I’d be, like, Why do these fuckers get to decide what happens to me?”, as quoted in the New Yorker, 2016).

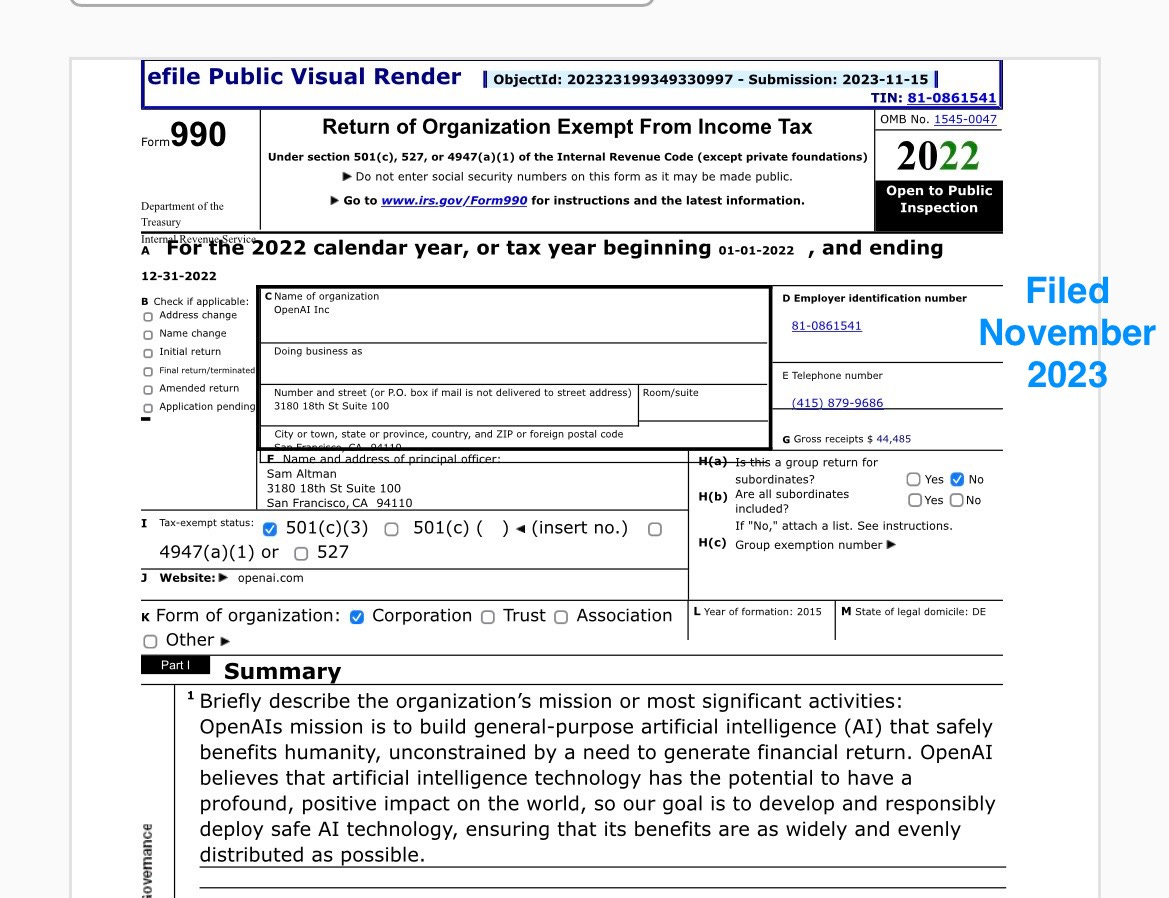

As recently as November 2023, OpenAI filed for non-profit tax exempt status, claiming that the company’s mission was to “safely benefit humanity, unconstrained by a need to generate financial return”, even as they turn over almost half their profits (if they make any) to Microsoft and seem to have back-burnered their safety work.

The list above is not complete, but it shows a significant gap between the rosy rhetoric and actual reality, and in my view that gap has only grown over time, particularly over the last year or so..

§

At this point, I think it is correct for the public to take everything OpenAI says with a grain of salt. But OpenAI still have massive power, and with it a chance to screw up, and possibly put humanity at risk. If they ever got to AGI, I would be worried.

§

Our government has been repeatedly complicit, treating Altman repeatedly as royalty. Homeland Security Secretary Alejandro Mayorkas, for example, “personally courted” Altman to his new AI Safety Board.

This is particularly disconcerting come from Homeland Security, given that the majority of the disconcerting facts above were already publicly known, aside from the ScarJo situation, the clawback clauses, and some of the recent departures. And given both the potential power of the board and Altman’s apparent indifference to apparent conflicts of interest. It’s one thing for the media to fail to adequately diligence Altman, another altogether for the Department of Homeland Security to do so.

If the first lesson is specific - that OpenAI should not be fully trusted – the second lesson is general. Just because someone is rich or successful doesn’t necessarily mean they should be trusted. (Cognitive psychology fans will recognize that this is a corollary of the halo effect, in which people who are attractive in one regard are regarded overly positive on others.)

§

The most important lesson, though, is a generalization of the first two, which comes from two former OpenAI board members who tried, unsuccessfully, to do something about OpenAI’s apparently problematic internal governance. They published this earlier today in The Economist:

They tried to warn us before, and few people listened. I hope the public will listen this time.

§

The one thing I would add is this: we can’t governments here, either; mosts governments, however well-intentioned, aren’t expert enough, and lobbying runs deep and at many levels. Regulatory capture is the default, as Karla Ortiz recently noted,::

Without independent scientists in the loop, with a real voice, we are lost.

As I argue, in my upcoming book Taming Silicon Valley, our only real hope is if citizens speak up.

Gary Marcus has been trying to warn people about OpenAI since 2019.

Citizen lack of awareness, understanding and interest is a real problem. We do not do our homework.

Not to mention AI is far from artificial intelligence. It should accurately be renamed for what is: Automatic Information. There is nothing intelligent or knowing about it.