Why California’s AI safety bill should (still) be signed into law - and why that won’t be nearly enough

Thursday broke my heart. California’s SB-1047, not yet signed into law, but on its way to being one of the first really substantive AI bills in the US, primarily addressed to liability around catastrophic risks, was significantly weakened in last-minute negotiations.

The technology ethicist Carissa Veliz posted a succinct summary of much of the damage:

The bill no longer allows the [Attorney General] to sue companies for negligent safety practices before a catastrophic event occurs; it no longer creates a new state agency to monitor compliance; it no longer requires AI labs to certify their safety testing under penalty of perjury; and it no longer requires “reasonable assurance” from developers that their models won’t be harmful (they must only take “reasonable care” instead)

None of that is to the good.

None of it is surprising either. As I argue in my forthcoming book, Taming Silicon Valley, the tech world often speaks out of two sides of its mouth (there are two whole chapters on their Jedi mind tricks, in fact).

When Sam Altman stood next to me in the Senate, he told the Senate that he strongly favored AI regulation. But behind the scenes his team fought to water down the EU AI Act. Most or all of the major big tech companies joined a lobbying organization that fought SB-1047, tooth and nail, despite broad public support for the bill. Many startups did too. The prominent venture capital firm Andreessen Horowitz and others (including at least one prominent academic they have heavily invested in) fought it continuously. To my mind, many of these arguments were based on misrepresentation, as I have discussed here in earlier essays. As Garrison Lovely noted at The Nation, it was a “masks off” moment for the industry.

We, the people, lose. In the new form, SB 1047 can basically only be used only after something really bad happens, as a tool to hold companies liable. It can no longer protect us against obvious negligence that might likely lead to great harm. And the “reasonable care” standard strikes me (as the son of a lawyer but not myself a lawyer) -as somewhat weak. It’s not nothing, but companies worth billions or trillions of dollars may make mincemeat of that standard. Any legal action may take many years to conclude. Companies may simply roll the dice, and as Eric Schmidt recently said, let the lawyers “clean up the mess” after the fact.

There’s another problem with this bill too – it’s too narrow, focused mainly on catastrophic harm that causes over a half a billion dollars damaage, and does too little to address other issues, which I fear people may forget, once it is – or is not – passed. Congresswoman Zoe Lofgren, who opposes the bill (often seen as supportive of Big Tech), noted in a letter to Scott Wiener, who has sponsored the bill, argued (in an otherwise somewhat problematic letter) that

SB 1047 seems heavily skewed toward addressing hypothetical existential risks while largely ignoring demonstrable AI risks like misinformation, discrimination, nonconsensual deepfakes, environmental impacts, and workforce displacement.

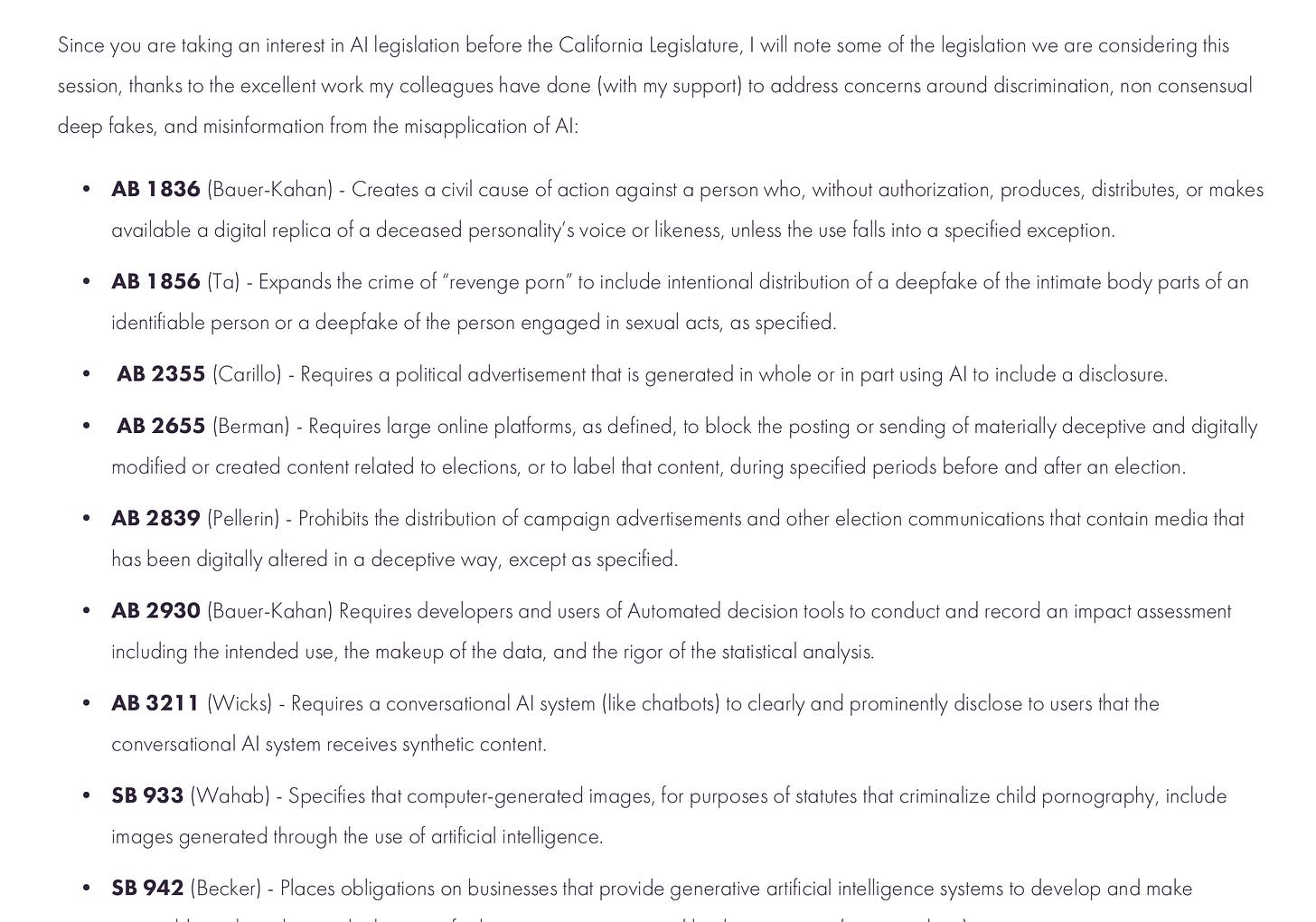

In a reply to Lofgren, Wiener himself happily acknowledged the fact that this bill is just one piece in a puzzle, and noted that SB-1047 (despite all the attention, positive and negative, heaped upon it) is just one bill among many still actively being considered in California (many of which, e.g., on deepfakes, he has supported):

Whether or not SB-1047 passes, so much of what those bills are about, ranging from political advertising and disclosure to deepfake porn still needs to be addressed. There will be a temptation, if the bill passes, for legislators to say “Great, AI has been taken care of”, but SB-1047, by design, only addresses a small part of what it needs to be addressed.

Nobody should forget that, whether or not SB-1047 passes.

§

Still I support the bill, even in weakened form. If its specter causes even one AI company to think through its actions, or to take the alignment of AI models to human values more seriously, it will be to the good. Companies like OpenAI that have talked big about investing in safety research and then stinted on their promises may finally be incentivitized to walk the walk that their talk has promised. For now, instead, we have CEOs of companies like Anthropic blithely saying that AI may well kill us all, maybe within a few years, and at the same time proceeding as fast as possible. If the bill makes companies think more carefully about these contradictions, I will be glad that it passed.

The bill also sends a message to big tech that the quality of safety protocols, and internal investigations of safety, are relevant to the reasonable care standard — which can help to avoid Ford Pinto-like situations in which companies that should have known better fail to act.

Which is to say that even if nobody is ever penalized under SB-1047 as such, it’s good that the AI industry realize sooner rather than later that are potentially liable, so that they more carefully consider the consequences of their actions. As Yale Law Professor Ketan Ramakrishnan told me on a phone call this morning, in many ways with respect liability SB-1047 mostly clarifies what would likely be already be true, but isn’t yet widely known. But there is considerable value in such clarification. As he put it, “The duty to take reasonable care and tort liability apply to AI developers, just like everyone else. [But] Tort law can’t deter people from behaving irresponsibly if they don’t know the law exists and applies to them.“ SB-1047’s strongest utility may come as a deterrent.

It also provides important whistleblower protections, which as many ex OpenAI employees have made clear, are critical.

Most importantly, we cannot afford to be defeatist here. If the bill doesn’t pass, the view will be that Big Tech always wins; nobody will bother. Future state and federal efforts will suffer. We can’t afford that.

So it must pass. I hope the full CA Assembly will support it, and that Governor Newsom will sign it into law, rather than veto it.

But it cannot be the last AI bill to pass, either in California, or the United States, if American citizens are to be protected from the many risks of AI, because it is but one link, already weakened, in the armor that we need in self-defense.

As I emphasized last year in front of the Senate, there is not one risk but many: bias, disinformation, defamation, toxicity, invasion of privacy, and so much more, to say nothing of risks to employment, intellectual property, and the environment, precisely because recent techniques can be applied in so many ways, still poorly understood.

We absolutely need a comprehensive approach, and SB 1047 is just a start. And we need federal legislation; a messy state-by-state patchwork is one thing that could actually stifle AI development. Nobody should want that.

Once the bill passes, as I hope it will, we will see, contra Andreessen Horowitz (aka a16z) and others in Silicon Valley, that life goes on — that innovation will continue just fine, that no major tech company actually leaves California, and that modest regulation that encourages companies to do the right thing is not fatal to the industry as they alleged. So much of what a16z and others alleged in their fight against SB-1047 will be debunked.

Hopefully, SB 1047 will be signed into law, and the perfectly rational, and in fact entirely ordinary, notion of regulating AI will be normalized, and the absurd idea that such regulation would kill off AI will have vanished. As Yoshua Bengio said to me in an email, passing the law will “show that you can continue having innovation while forcing some safety precautions.”

At that point, maybe we can finally get down to the great deal of work still left to be done.

Gary Marcus is author of Taming Silicon Valley (MIT Press), which will come out on September 17, sent to press a few months ago, correctly anticipating that Silicon Valley would, despite public statements otherwise, fight actual AI regulation tooth and nail.

The book talks about what legislation we should actually want, at a national and global level, and how Silicon Valley has played both the public and the government.

Given the events of the last couple weeks, its release could not be more timely. The most important part of the book is the epilogue, which says that you, the reader, need to stand up and be counted, if we are to get to an AI that works for the many and not just the few.

Notice the astroturfing even here, where the objectors to SB-1047 consistently have written no other comments, follow no subcriptions, etc.

My objection to this bill (CA SB-1047) is not that it would be fatal or even significant to the companies producing LLMs, it is that it is silly. How many LLMs does it take to change a light-bulb? Yeah, that's right, LLMs cannot change lightbulbs. They can't do anything but model language. They don't provide any original information that could not be found elsewhere. They are fluent, but not competent.

The bill includes liability for "enabling" catastrophic events. The latest markup revises that to "materially enable," but that is still too vague. Computers enabled the Manhattan project. Was that material? Could it have been foreseen by their developers?

The silliest provision is the requirement to install a kill switch in any model before training, "the capability to promptly enact a full shutdown" of the model.

The risks that it seeks to mitigate might be real for some model, some day, but not today. The current state of the art models do not present the anticipated risks, but the criteria for what constitutes a "covered model" are all stated relative to current models (e.g., the number of FLOPS or the cost of training). They would not necessarily apply to future models, for example, quantum computing models, or they may apply to too many models, which do not present risks. Future models may be trainable for less than $100 million, for example, and they would be excluded from this regulation. That makes no sense: Apply today's criteria to models that do not exist and may not be relevant to models that do present risks.

What this bill does is to respond to and provide government certification to the hype surrounding GenAI models. It supports and provides government certification that these models are more powerful than they are. Despite industry protestations, this bill is a gift to the industry. If today's models are not genuinely (and generally intelligent), they will be within a few years (or so the bill presumes) and so this specific kind of regulation is needed now. The state is contributing to the marketing based on science fiction. That is silly.

Finally, the bill creates a business model for "third party" organization to certify that the models are safe. For the foreseeable future, that party will be able to collect large fees without actually having to do any valuable work.

Today's models do not present the dangers that are anticipated by this bill and it is dubious whether any future model ever will. The California legislature is being conned and that is why I object to this bill. Stop the hype.