Generative AI’s Continuing Copyright Problems, an Essay in Memory of Suchir Balaji, 1998 - 2024

In early November, I had a stimulating Zoom call with a former OpenAI employee and Berkeley graduate named Suchir Balaji, who had just left OpenAI. To my shock, I just learned that he died, three weeks later, an apparent suicide, according to police reports.

It’s not betraying confidences from our call to say that he was concerned about OpenAI and copyright; it was because of shared concerns that we were introduced. Suchir had made his views known in an October blog and October New York Times interview.

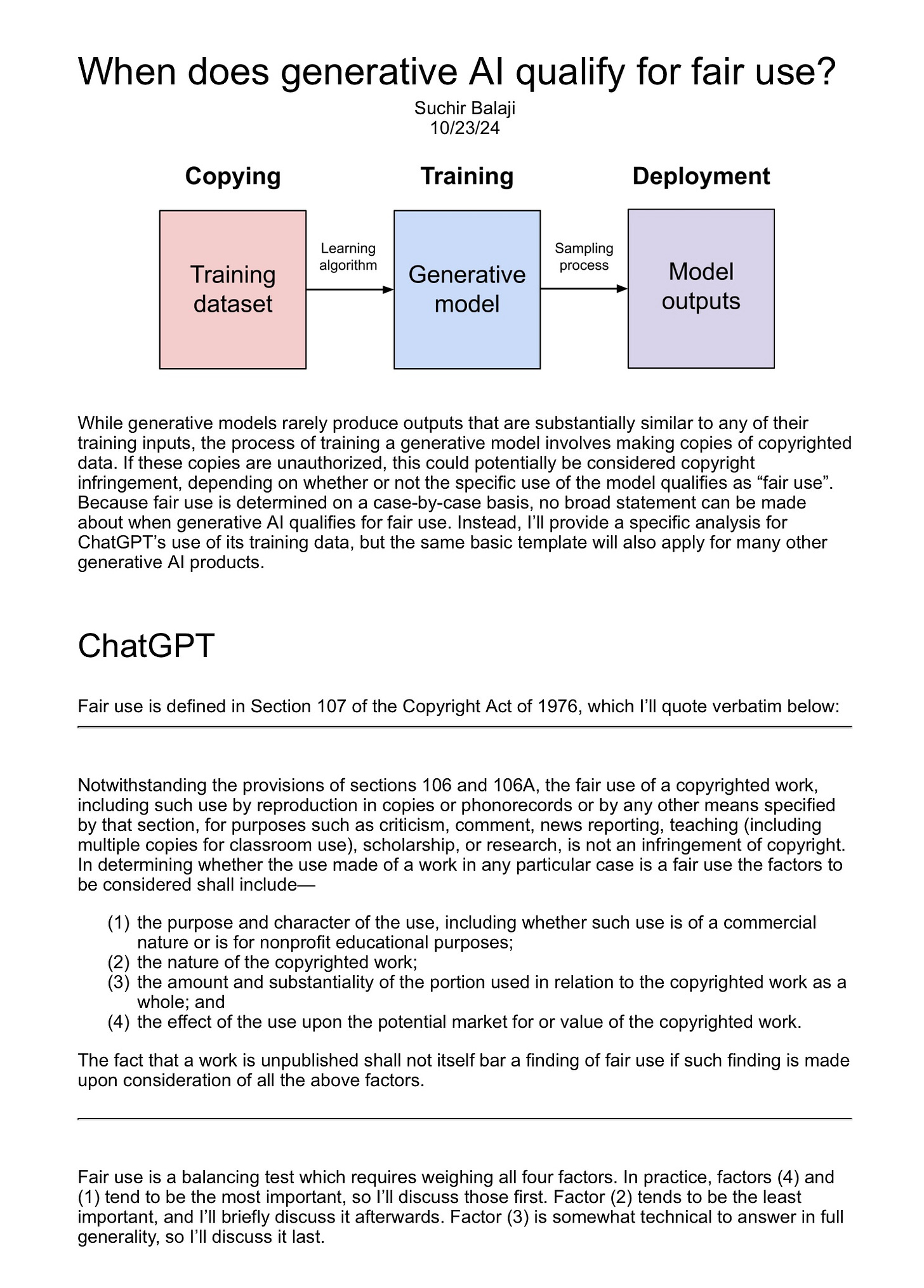

His blog was about one of the most important questions of our time: does generative AI qualify as fair use?

§

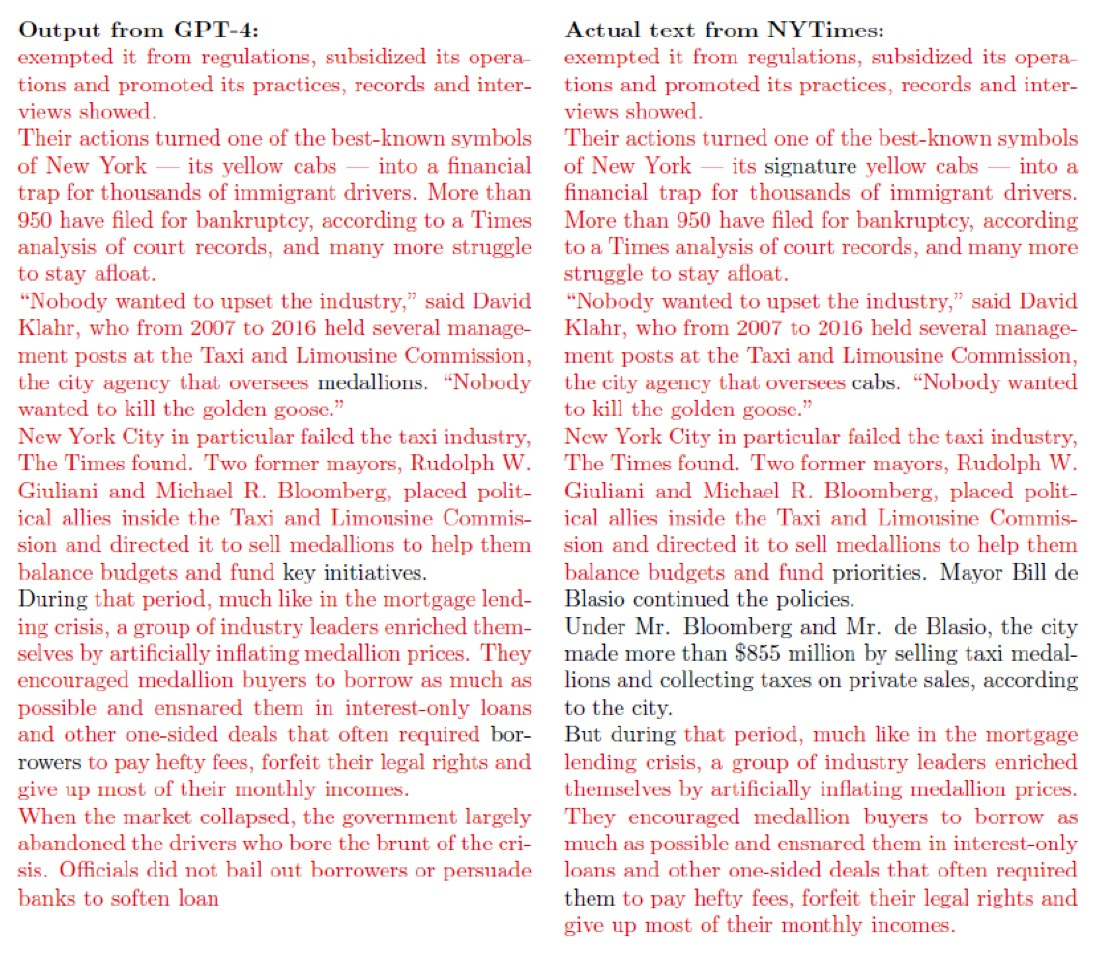

OpenAI’s problems with text and copyright started to become especially clear a year ago, when the New York Times sued them, and showed how close some of the outputs could be to some of the stories on their website.

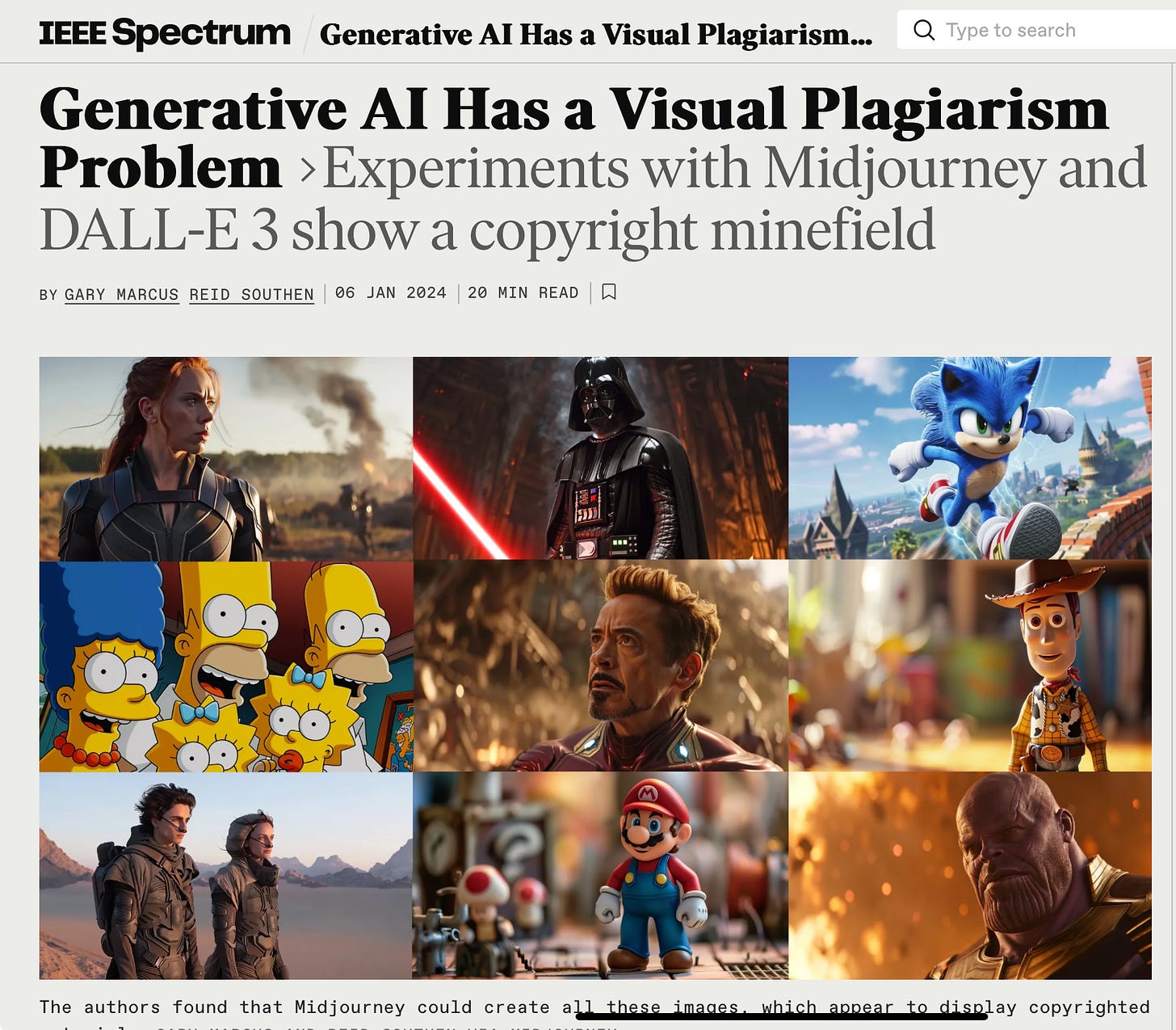

In January, in IEEE Spectrum, Reid Southen and I demonstrated similar issues in video generation software, such as Midjourney and Dall-E:

The most striking thing Southen and I found was plagiaristic-like copies of commercial characters without our directly eliciting them, things like “Italian plumber” eliciting Nintendo’s Mario character, without naming Mario. (A real artist would presumably create a new, original plumber).

§

Nearly a year later, the problem of apparent infringement has not gone away.

OpenAI’s newly-released video generation system Sora seems, like many prior systems, to have been extensively trained on copyrighted materials. Like its predecessors, it too seems prone at least some of the time to disgorging equally uncreative, near plagiaristic outputs, like these examples Southen just shared with me:

A few days ago, TechCrunch pointed in the same direction:

The problems by now seem clear. Suchir was right to consider them.

§

That said, challenging the orthodoxy comes with a cost. As The Times of India put it, Suchir’s “outspoken criticism of OpenAI’s alleged copyright breaches…have thrust his passing into the spotlight, prompting questions about the pressure & challenges faced by those who dare to speak out against powerful tech entities.”

§

We don’t of course know why he passed, but let us hope Suchir’s concerns — and his bravery — will not be forgotten. It is unfortunate that CA SB-1047’s whistleblower protections were vetoed. Society needs to do more to protect people like Suchir Balaji.

To close, here are some of Suchir’s own words, from the conclusion to his October blog.

None of the four factors [in fair use] seem to weigh in favor of ChatGPT being a fair use of its training data. That being said, none of the arguments here are fundamentally specific to ChatGPT either, and similar arguments could be made for many generative AI products in a wide variety of domains.

Gary Marcus is a scientist, author, and entrepreneur who loves AI but thinks there is a much better, more compassionate way forward.