AI Coding Fantasy meets Pac-Man

Guess who won?

Last week I dissected Kevin Roose’s claims that neophytes would soon be able to build the software of their dreams without knowing how to write a line of code, and also criticized Roose (and his Hard Fork sidekick Casey Newton) for drinking Anthropic’s “code-will-all-be-written by machines” hyperbole.

Anthropic’s CEO Dario Amodei obviously failed to read my rebuttal. A few days ago he triumphantly claimed (without evidence) that nearly all code would be written by AI within the next year:

§

I am kicking myself now for going too easy on both. I think most or all of the points I made – about challenges in building and debugging novel systems with sufficient generality- will stand the test of time.

But, wow, The Guardian really just went me one better.

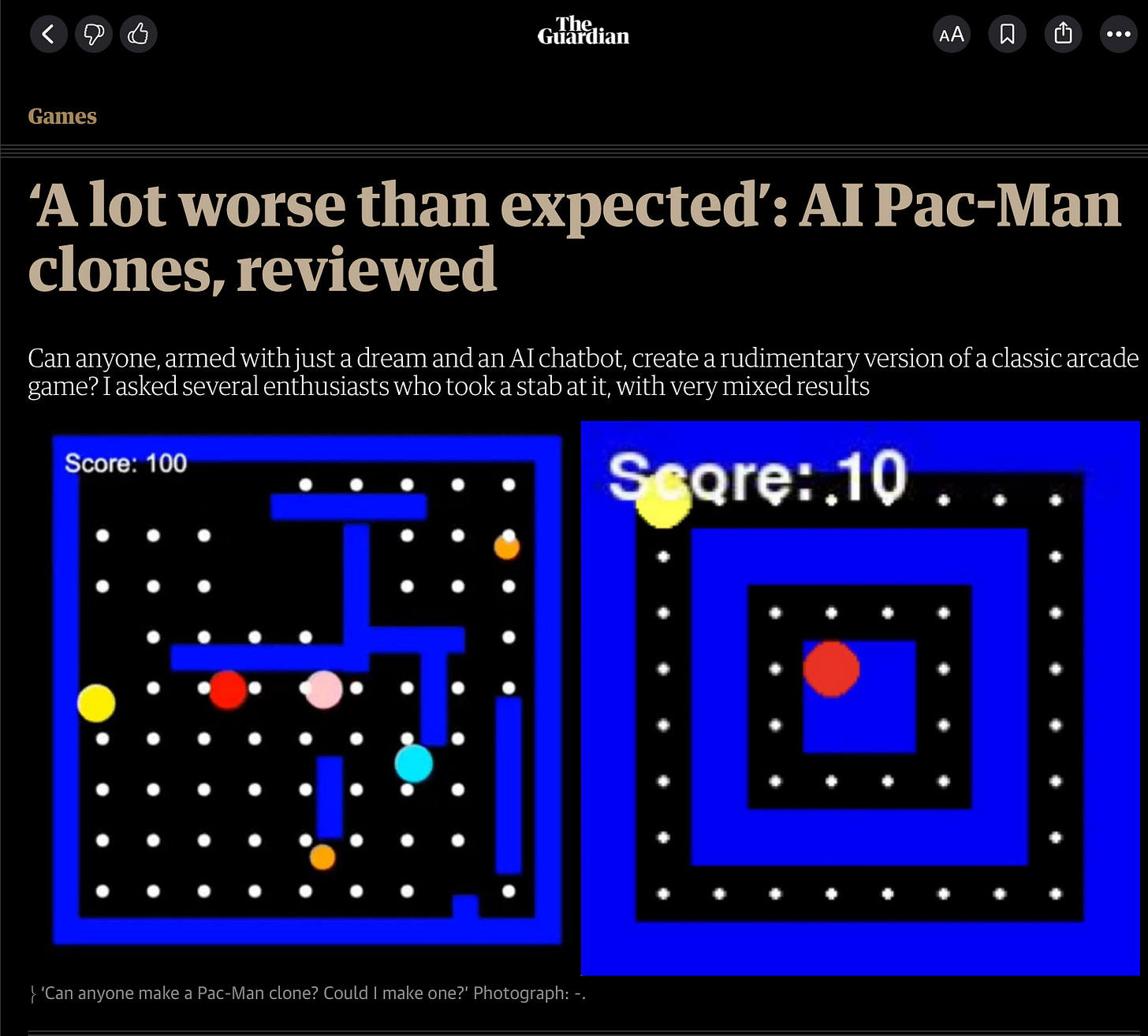

An enterprising reporter there, Rich Pelley, decided to put all these no-coding knowledge claims to a test. And I don’t mean a hard test – like asking newbies to build a new real-time, networked air traffic control system – I mean an easy test. The reporter asked seven people to build a clone of the 1980 arcade bestseller Pac-Man – a low resolution, 2D graphics game that absolutely pales in algorithmic complexity compared to almost any modern video game.

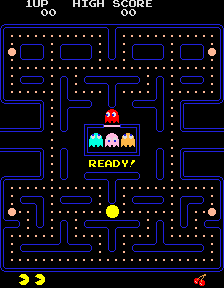

Remember it? Yellow character, controlled by person wanders maze, ghosts chase yellow user character; that’s pretty much it. The entire game play can be described in a page.

You would think this job (not even asking for variants like extra ghosts would be easy. I don’t want spoil all the fun, but … things didn’t go well:

To be sure, most or all trained coders will (if they are not already) soon use AI as a part of their workflow. As a fantastic, souped-up version of autocomplete, AI coding tools somewhat increase productivity. They can help a coder learn a new API, or maybe even a new programming language. But current AI tools don’t replace an understanding of debugging, nor an understanding of system architecture, nor an understanding of what clients want. And they don’t magically resolve the challenges of maintaining complex code bases over time. In some instances, an overdependence on the new tools will lead beginners to make giant mountains of difficult-to-maintain spaghetti code; security will be an issue as well. The idea that coders (and more generally, software architects) are on their way out is absurd. AI will be a tool to help people write code, just as spell-checkers are a tool to help authors write articles and novel, but AI will not soon replace people who understand how to conceive of, write, and debug code.

If you can’t get Pac-Man right in 2025 (full original source code to the Atari 2600 version available on github1), how on earth are you going to build the next high-quality Zelda clone next year?

Gary Marcus is enjoying this week’s third anniversary of “Deep learning is hitting a wall”, and will write an update if he can find the time.

The Atari source code is written in 6502 assembly language, one of the first programming languages I ever learned. My guess is that current AI coding tools would struggle to use 6502 well, for multiple reasons, including a lack of high-level libraries, fewer Stack Overflow queries to rely on, and a greater needed for the coding tool to understanding high level architectural concerns that might be side-stepped in higher level languages. (Want to know what coders then had to deal with back then? Check out Racing The Beam.)

I don't know about other people, but to me these constant salvos of AI hype are like watching an old silent comedy where people are throwing custard pies at each other. The first couple of pies are funny and then it just gets tedious.

Over 50 years I have written over a million lines of code in over 20 languages. AI gives me a step up in blocking out preliminary solutions especially in the earliest stages. However it sucks at debugging and I mean really sucks. The worst is an inhuman 'proximity bias' that often, when asked for a simple solution to a small issue will offer a complete refactoring, entailing starting from scratch and when challenged offers a transparently self congratulatory "applogy".The hazards barely exceed the opportunities.