AI risk ≠ AGI risk

Superintelligence may or may not be imminent. But there’s a lot to be worried about, either way.

Is AI going to kill us all? I don’t know, and you don’t either.

But Geoff Hinton has started to worry, and so have I. I’d heard about Hinton’s concerns through the grapevine last week, and he acknowledged them publicly yesterday.

Amplifying his concerns, I posed a thought experiment:

Soon, hundreds of people, even Elon Musk, chimed in.

It’s not often that Hinton, Musk, and I are even in partial agreement. Musk and I also both signed a letter of concern from the Future of Life Institute [FLI], earlier this week, which is theoretically embargoed til tomorrow but is easy enough to find.

§

I’ve been getting pushback1 and queries ever since I posted the Hinton tweet. Some thought I had misinterpreted the Hinton tweet (given my independent sourcing, I am quite sure I didn’t); others complained that I was focusing on the wrong set of risks (either too much on the short-term, or too much on the long term).

One distinguished colleague wrote to me asking “won’t this [FLI] letter create unjustified fears of imminent AGI, superintelligence, etc?” Some people were so surprised by my amplifying Hinton’s concerns that a whole Twitter thread popped up speculating about my own beliefs:

My beliefs have not in fact changed. I still don’t think large language models have much to do with superintelligence or artificial general intelligence [AGI]; I still think, with Yann LeCun, that LLMs are an “off-ramp” on the road to AGI. And my scenarios for doom are perhaps not the same as Hinton’s or Musk’s; theirs (from what I can tell) seem to center mainly around what happens if computers rapidly and radically self-improve themselves, which I don’t see as an immediate possibility.

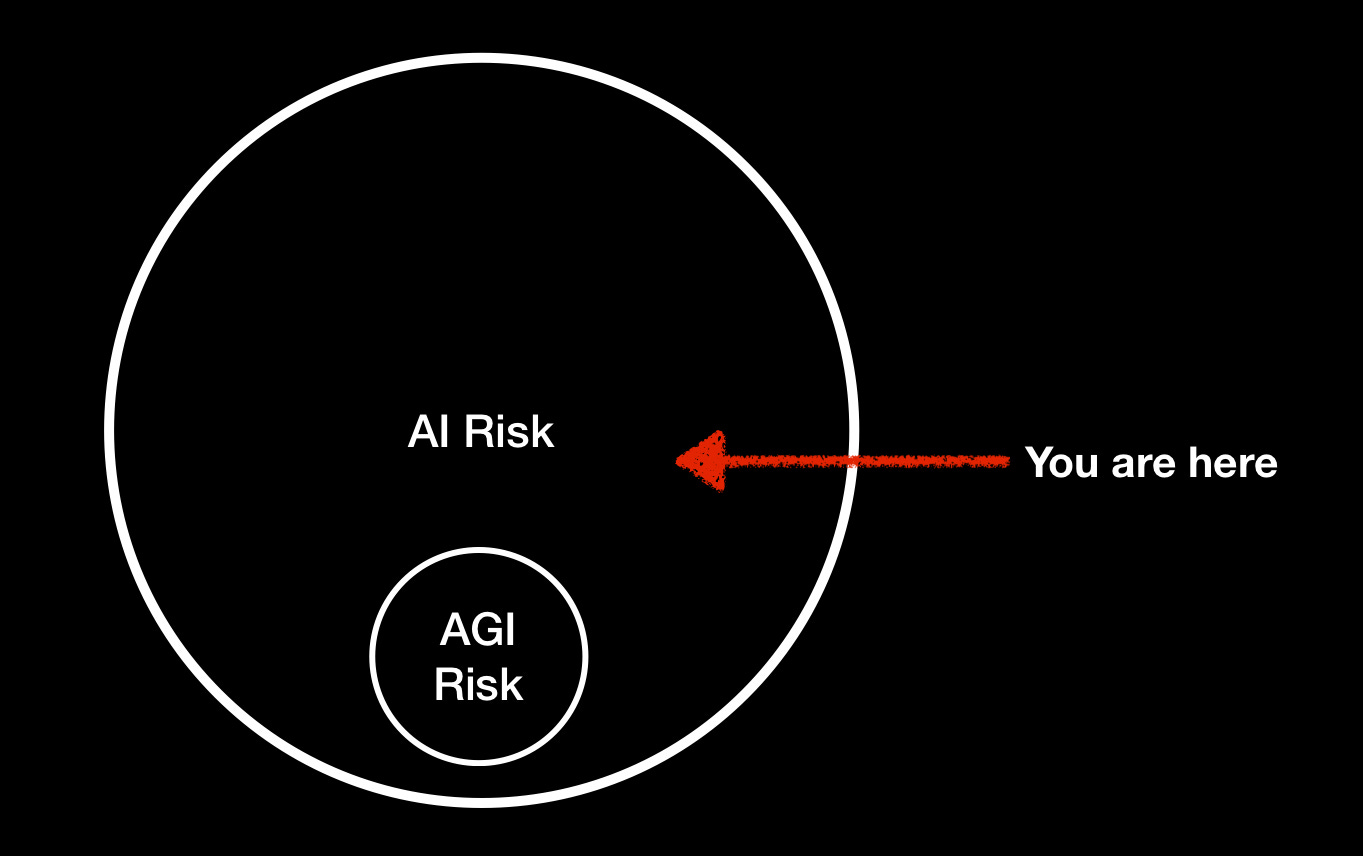

But here’s the thing: although a lot of the literature equates artificial intelligence risk with the risk of superintelligence or artificial general intelligence, you don’t have to be superintelligent to create serious problems. I am not worried, immediately, about “AGI risk” (the risk of superintelligent machines beyond our control), in the near term I am worried about what I will call “MAI risk”—Mediocre AI that is unreliable (a la Bing and GPT-4) but widely deployed—both in terms of the sheer number of people using it, and in terms of the access that the software has to the world. A company called Adept.AI just raised $350 million dollars to do just that, to allow large language models to access, well, pretty much everything (aiming to “supercharge your capabilities on any software tool or API in the world” with LLMs, despite their clear tendencies towards hallucination and unreliability).

Lots of ordinary humans, perhaps of above average intelligence but not necessarily genius-level, have created all kinds of problems throughout history; in many ways, the critical variable is not intelligence but power, which often caches out as access. In principle, a single idiot with the nuclear codes could destroy the world, with only a modest amount of intelligence and a surplus of ill-deserved access.

If an LLM can trick a single human into doing a Captcha, as OpenAI recently observed, it can, in the hands of a bad actor, create all kinds of mayhem. When LLMs were a lab curiosity, known only within the field, they didn’t pose much problem. But now that (a) they are widely known, and of interest to criminals, and (b) increasingly being given access to the external world (including humans), they can do more damage.

Although the AI community often focuses on long-term risk, I am not alone in worrying about serious, immediate implications. Europol came out yesterday with a report considering some of the criminal possibilities, and it’s sobering.

They emphasize misinformation, as I often have, and a whole lot more:

Here’s an excerpt of what they said about fraud and terrorism:

With the help of LLMs, these types of phishing and online fraud can be created faster, much more authentically, and at significantly increased scale.

The ability of LLMs to detect and re-produce language patterns does not only facilitate phishing and online fraud, but can also generally be used to impersonate the style of speech of specific individuals or groups. This capability can be abused at scale to mislead potential victims into placing their trust in the hands of criminal actors.

In addition to the criminal activities outlined above, the capabilities of ChatGPT lend themselves to a number of potential abuse cases in the area of terrorism, propaganda, and disinformation. As such, the model can be used to generally gather more information that may facilitate terrorist activities, such as for instance, terrorism financing or anonymous file sharing.

Perhaps coupled with mass AI-generated propaganda, LLM-enhanced terrorism could in turn lead to nuclear war, or to the deliberate spread of pathogens worse than covid-19, etc. Many, many people could die; civilization could be utterly disrupted. Maybe humans would not literally be “wiped from the earth,” but things could get very bad indeed.

How likely is any of this? We have no earthly idea. My 1% number in the tweet was just a thought experiment. But it’s not 0%.

Hinton’s phrase — “it’s not inconceivable” — was exactly right, and I think it applies both to some of the long-term scenarios that people like Eliezer Yudkowsky have worried about, and some of the short-term scenarios that Europol and I have worried about.

§

The real issue is control. Hinton’s worry, as articulated in the article I linked in my tweet above (itself a summary of a CBS interview) was about what happens if we lose control of self-improving machines. I don’t know when we will get to such machines, but I do know that we don’t have tons of controls over current AI, especially now that people can hook them up to TaskRabbit and real-world software APIs.

We need to stop worrying (just) about Skynet and robots taking over the world, and think a lot more about what criminals, including terrorists, might do with LLMs, and what, if anything, we might do to stop them.

But we also need to treat LLMs as a dress rehearsal future synthetic intelligence, and ask ourselves hard questions about what on earth we are going to do with future technology, which might well be even more difficult to control. Hinton told CBS, “I think it's very reasonable for people to be worrying about these issues now, even though it's not going to happen in the next year or two”, and I agree.

It’s not an either/or situation; current technology already poses enormous risks that we are ill-prepared for. With future technology, things could well get worse. Criticizing people for focusing on the “wrong risks” (an ever popular sport on Twitter) isn’t helping anybody; there’s enough risk to go around.

We need all hands on deck.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is deeply, deeply concerned about current AI but really hoping that we might do better.

Watch for his new podcast, Humans versus Machines, debuting later this Spring

One piece of pushback came from Google researcher Dan Brickley:

I am with Brickley in thinking we have lots to worry about, if not the details (ods? Time? Bitcoin?). But the rank-ordering Brickley requests is a bit of a red-herring. Sepsis doesn’t kill as many people as cancer, but that doesn’t mean we should not work on sepsis. Car accidents kill fewer than either sepsis or cancer, but that doesn’t mean we should drop seatbelt laws. Should I stop caring about lead paint because there are guns, or vice versa?

Importantly, AI may well directly or indirectly accelerate many of these risks (e.g., misinformation, future pandemics, rogue nuclear weapon launches, misuse of CRISPR, etc). Whether AI is at number one or number 10, we need to get serious about it, now, given the sudden rapid widespread deployment and lack of clarity about use cases and means of mitigation.

This seems exactly right. The risk right now is not malevolent AI but malevolent humans using AI.

Very well said!

And while there is a growing recognition of the potential harm, damage or even destruction, from misused LLMs or other forms of AI, there is already immediate harm, growing harm, that will lead to much pain, suffering and death, many deaths. Though as you have said causality will be difficult to prove. The US, and much of the rest of the world are experiencing a mental health crisis. It’s very complicated with profound confusion about how to address this. This has created an explosion of billions in funding for mental health and well-being apps (sixty-seven percent had been developed without any guidance from a healthcare professional), some if which are already using LLMs and GPT and we know in advance the results. It is a bit like social media has many benefits, but many years later society at large is just starting to understand the significant harm, especially to children and the vulnerable, but also to society as a whole. This current form of AI is doing real harm today, that will grow to great harm, even if the AI is not malevolent or does not eventually lead to the end of civilization.