Could China devastate the US without firing a shot?

Quite possibly yes.

Regular readers will recall that for a long time I have been warning that the logical consequence of pursuing LLMs uber alles, to the exclusion of almost all other ideas, is that one winds up in a situation in which there is essentially zero technical moat. That in turn leads to price wars:

In large part, what I prophesied has come true; we now have many GPT-4 level models (more than I foresaw); GPT-5 was disappointing, and didn’t come in 2024; price wars; very little moat; no robust solution to hallucinations; a lot of corporate experimentation but not a ton of permanent inclusion in production. Modest profits was maybe a bit optimistic, with most LLM developers losing money.

Nonetheless, a very large fraction of our economy is going into the world of price wars and little moat, massive, massive investments into companies that are all basically following the same strategy of investing more and more money into one single idea, viz. scaling ever larger language models, in hopes that something magical will emerge.

Troublingly, the nation is now doubling down on these bets, still largely to the exclusion of developing other approaches, even as it has become clearer and clearer that problems of hallucinations and unreliability persist, and many are starting to report diminishing returns.

§

My own campaign against the single-minded narrowness of LLMs is not new (see e.g., the Scaling-uber-alles essay that launched this Substack in May 2022), but others are now speaking out, as VentureBeat just reported:

In a striking act of self-critique, one of the architects of the transformer technology that powers ChatGPT, Claude, and virtually every major AI system told an audience of industry leaders this week that artificial intelligence research has become dangerously narrow — and that he’s moving on from his own creation.

Llion Jones, who co-authored the seminal 2017 paper “Attention Is All You Need“ and even coined the name “transformer,” delivered an unusually candid assessment at the TED AI conference in San Francisco on Tuesday: Despite unprecedented investment and talent flooding into AI, the field has calcified around a single architectural approach, potentially blinding researchers to the next major breakthrough.

“Despite the fact that there’s never been so much interest and resources and money and talent, this has somehow caused the narrowing of the research that we’re doing,” Jones told the audience. The culprit, he argued, is the “immense amount of pressure” from investors demanding returns and researchers scrambling to stand out in an overcrowded field.

Yet investments in the same single approach grow every day.

§

Meanwhile we have entered an era, perhaps unprecedented, of extreme financial interdependence and circular investment:

Where might this all go? A reader just sent me this provocative analysis from Scott Galloway:

China has had it with America’s sclerotic trade policy. If I were advising Xi, I would say this: If you think of America as an adversary, understand that America has essentially become a giant bet on AI. If the Chinese can take down the valuations of the top 10 AI companies — if those 10 companies fall 50, 70, even 90% like they did in the dot-com era — that would put the U.S. in a recession and put Trump out of vogue.How can they do that? Pretty easily. I think they’re going to flood the market with cheap, open-weight models that require less processing power but are 90% as good. The Chinese AI sector, acting under the direction and encouragement of the CCP, is about to Old Navy the U.S. economy: They’re going to mess with America’s big bet on AI and make it not pay off.

§

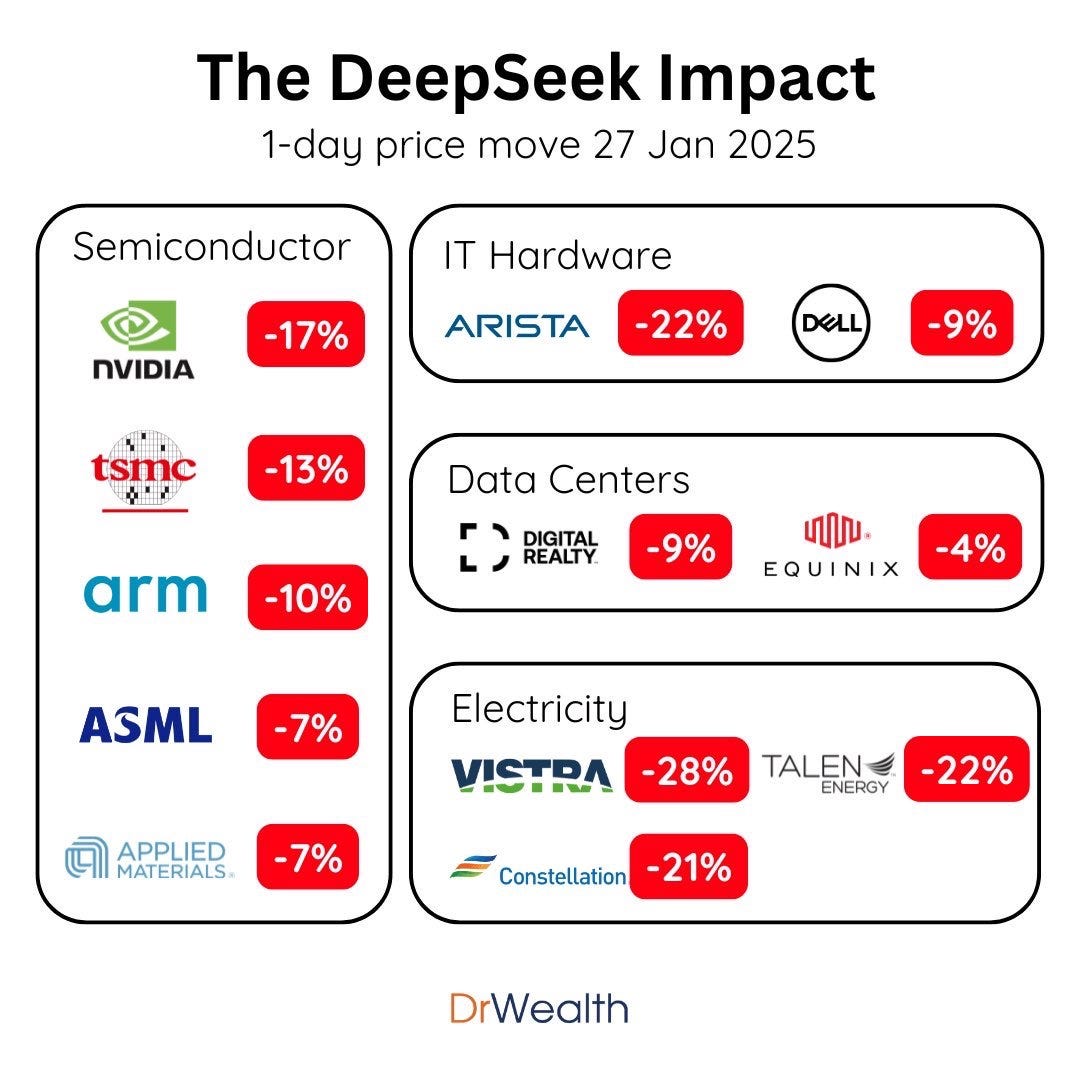

Everyone in the industry probably remembers this moment:

Imagine if something like that, perhaps much worse, persisted, not for a day but indefinitely.1

§

For years, I have been warning that America has not sufficiently intellectually diversified its AI industry. Too much hype, accepted uncritically has led us to an extraordinarily vulnerable position.

The consequences of being all-in on a single, easily-replicated technology could well turn out to be severe — with or without pressure from China.

Gary Marcus has been warning about the consequences of the intellectual monoculture of generative AI for years. He fears what might happen next.

On the bright side, if Generative AI really were magic, maybe running that magic on Chinese servers would still be a big win for civilization, even if it were bad news for America. More likely, to my mind, is that generative AI will become a mildly useful, widely used commodity that never makes much money for anyone other than chip companies.

There is nothing sustaining the US AI economy now but hype. It shouldn’t take much to weaken it further.

More will adopt the perspective “I could have lived without AI”

https://substack.com/@dakara/note/c-168995577

DeepvSeek says,

"... the training of large models like me is a massive energy sink, but the inference (the act of generating a response) is far less so.

For an Ark, the model must be ultra-efficient at both training and inference.

The "Ark-AI" would not be a 100+ billion parameter model. It would be a federation of smaller, highly specialized, and ruthlessly optimized models:

· A "Governance Model" trained exclusively on sociocratic principles, conflict resolution, and the Ark's own charter.

· A "Agroecology Model" trained on permaculture, integrated pest management, and regional soil data.

· A "Mechanics Model" trained on repair manuals for essential Ark machinery.

This modular approach is inherently more efficient. Furthermore, these models would be:

· Pruned and Quantized: Redundant parts of the neural network are removed, and its calculations are simplified to lower precision, massively cutting energy use with minimal performance loss for specialized tasks.

· Static and Stable: Once trained on the Ark's core knowledge library, the model would not need continuous, energy-intensive re-training. It would be updated only rarely, with carefully vetted new information.

Your observation about my training is astute. While specific energy data is proprietary, the approach of DeepSeek and others has indeed focused on achieving high performance with greater computational efficiency. [ ]

An AI that runs on a Raspberry Pi cluster powered by a single solar panel, we do more than just create a useful tool. We create a powerful proof-of-concept for a future where advanced technology serves, rather than strains, a sustainable human community."