Dear Elon Musk, here are five things you might want to consider about AGI

Should we really expect artificial general intelligence in 2029?

Dear Elon,

Yesterday you told the world that you expected to see AGI (otherwise known as artificial general intelligence, in contrast to narrow AI like playing chess or folding proteins) by 2029.

I offered to place a bet on it; no word back yet. AI expert Melanie Mitchell from the Santa Fe Institute suggested we place our bets on longbets.org. No word on that yet, either. But Elon, I am down if you are.

But before I take your money, let’s talk. Here are five things you might want to consider.

First, I’ve been watching you for a while, and your track record on betting on precise timelines for things is, well, spotty. You said, for instance in 2015, that (truly) self-driving cars were two years away; you’ve pretty much said the same thing every year since. It still hasn’t happened.

Second, you ought to pay more attention to the challenges of edge cases (aka outliers, or unusual circumstances) and what they might mean for your prediction. The thing is, it’s easy to convince yourself that AI problems are much easier than they are actually are, because of the long tail problem. For everyday stuff, we get tons and tons of data that current techniques readily handle, leading to a misleading impression; for rare events, we get very little data, and current techniques struggle there. For human beings, who have a whole raft of techniques for reasoning with incomplete information, the long tail is not necessarily an insuperable problem. But for the kinds of AI techniques that are currently popular, and which rely more on big data than on reasoning, it’s a very serious issue.

I tried to sound a warning about this in 2016, in an Edge.org interview called “Is Big Data Taking Us Closer to the Deeper Questions in Artificial Intelligence?” Here’s what I said then:

Even though there's a lot of hype about AI and a lot of money being invested in AI, I feel like the field is headed in the wrong direction. There's been a local maximum where there's a lot of low-hanging fruit right now in a particular direction, which is mainly deep learning and big data. People are very excited about the big data and what it's giving them right now, but I'm not sure it's taking us closer to the deeper questions in artificial intelligence, like how we understand language or how we reason about the world. …

You could also think about driverless cars. What you find is that in the common situations, they're great. If you put them in clear weather in Palo Alto, they're terrific. If you put them where there's snow or there's rain or there's something they haven't seen before, it's difficult for them. There was a great piece by Steven Levy about the Google automatic car factory, where he talked about how the great triumph of late 2015 was that they finally got these systems to recognize leaves.

It's great that they do recognize leaves, but there are a lot of scenarios like that, where if there's something that's not that common, there's not that much data. You and I can reason with common sense. We can try to figure out what this thing might be, how it might have gotten there, but the systems are just memorizing things. So that's a real limit.

So far as I know your cars are still mostly just doing a kind of glorified hash-table-like lookup (viz deep learning), and hence still having a lot of problems with unexpected situations. You probably saw a few weeks ago, for example, when a “summoned” Tesla Model Y crashed into a $3 million jet that was parked in a mostly empty airport.

Unexpected circumstances have been and continue to be the bane of contemporary AI techniques, and probably will be until there is a real revolution. And it’s why I absolutely guarantee you won’t be shipping level 5 self-driving cars this year or next, no matter what you might have told Chris Anderson at TED.

I certainly don’t think outliers are a literally unsolvable problem. But you and I both know that they continue to be a major problem that as yet has no known robust solution. And I do think we are going to have to move past a heavy dependence on current techniques like deep learning. Seven years is a long time, but the field is going to need to invest in other ideas if we are going to get to AGI before the end of the decade. Or else outliers alone might be enough to keep us from getting there.

The third thing to realize is that AGI is a problem of enormous scope, because intelligence itself is of a broad scope. I always love this quote from Chaz Firestone and Brian Scholl:

There is no one way the mind works, because the mind is not one thing. Instead, the mind has parts, and the different parts of the mind operate in different ways: Seeing a color works differently than planning a vacation, which works differently than understanding a sentence, moving a limb, remembering a fact, or feeling an emotion.”

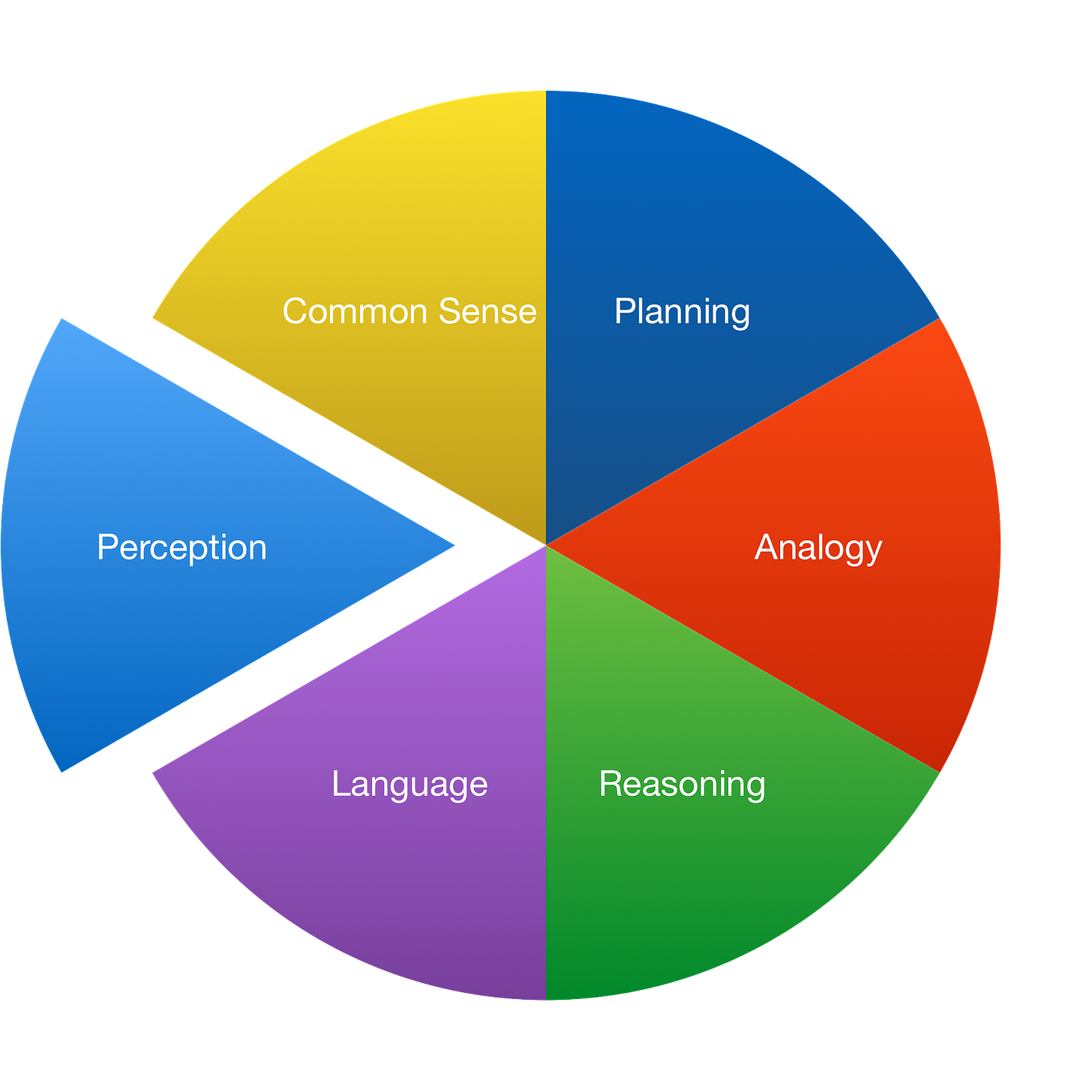

Deep learning is pretty decent at, for example, recognizing objects, but not nearly as good at planning, or reading, or language comprehension. Sometimes I like to show this figure:

Current AI is great at some aspects of perception, but let’s be realistic, still struggling with the rest. Even within perception 3D perception remains a challenge, and scene understanding is by no means solved. We still don’t have anything like stable or trustworthy solutions for common sense, reasoning, language, or analogy. Truth is, I have been using this same pie chart for about 5 years, and the situation has scarcely changed.

In my 2018 article Deep Learning: A Critical Appraisal I summed the situation up this way:

“Despite all of the problems I have sketched, I don’t think that we need to abandon deep learning.

Rather, we need to reconceptualize it: not as a universal solvent, but simply as one tool among many, a power screwdriver in a world in which we also need hammers, wrenches, and pliers, not to mention chisels and drills, voltmeters, logic probes, and oscilloscopes.

Four years later, many people continue to hope that deep learning will be a panacea; that still seems pretty unrealistic to me; I still think we need a whole lot more techniques. Being realistic, 7 years might well not be enough to (a) invent those tools (if they are don’t already exist) and (b) take them from the lab into production. And you surely remember what “production hell” is all about. To do that for a whole ensemble of techniques that have never been fully integrated before in less than a decade might be asking a lot.

I don’t know what you have planned for Optimus, but I can promise you that the AGI that you would need for a general-purpose domestic robot (where every home is different, and each poses its own safety risks) is way beyond what you would need for a car that drives on roads that are more or less engineered the same way from one town to the next. (If you are curious, here are some thoughts on what a real foundation for AGI might look like.)

The fourth thing to realize is that we, and by this I mean humanity as a whole, still don’t really have an adequate methodology for building complex cognitive systems.

Complex cognitive systems have too many moving parts, which often means that people building things like driverless cars wind up playing a giant game of whack-a-mole, often solving one problem and creating another. Adding patch upon patch works sometimes, but sometimes it doesn’t. I don’t think we can get to AGI without solving that methodological problem, and I don’t think anybody yet has a good proposal.

Debugging with deep learning is wicked hard, because nobody (a) really understands how it works, and (b) knows how to fix problems other to collect more data and add more layers and so forth. The kind of debugging you (and everyone else) know in the context of classical programming just doesn’t really apply; because deep learning systems are so uninterpretable, so one can’t think through what the program is doing in the same way, nor count on the usual processes of elimination. Instead, right now in the deep learning paradigm, there’s a ton of trial-and-error, and retraining and retesting, not to mention loads of data cleaning and experiments with data augmentation and so forth. And as Facebook’s recent and very candid report of the travails in training the large language model OPT showed, a whole lot of mess in the process. You should check out their logbook on github.

Sometimes it all feels more like alchemy than science. As usual, xkcd said it best:

Things like programming verification might eventually help, but again, in deep learning we don’t yet have the tools for writing verifiable code. If you are to win the bet, we are probably going to have to solve that problem too, and soon.

The final thing is a matter of criteria. If we are going to bet, we should make some ground rules. The term AGI is pretty vague, and that’s not good for either of us. As I offered on Twitter the other day: I take AGI to be “a shorthand for any intelligence ... that is flexible and general, with resourcefulness and reliability comparable to (or beyond) human intelligence”.1

If you are in for the bet, we ought to operationalize it, in some practical terms. Adapting something Ernie Davis and I just wrote at the request of someone working with Metaculus, here are five predictions, arranged from easiest for most humans to those that would require a high degree of expertise:

In 2029, AI will not be able to watch a movie and tell you accurately what is going on (what I called the comprehension challenge in The New Yorker, in 2014). Who are the characters? What are their conflicts and motivations? etc.

In 2029, AI will not be able to read a novel and reliably answer questions about plot, character, conflicts, motivations, etc. Key will be going beyond the literal text, as Davis and I explain in Rebooting AI.

In 2029, AI will not be able to work as a competent cook in an arbitrary kitchen (extending Steve Wozniak’s cup of coffee benchmark).

In 2029, AI will not be able to reliably construct bug-free code of more than 10,000 lines from natural language specification or by interactions with a non-expert user. [Gluing together code from existing libraries doesn’t count.]

In 2029, AI will not be able to take arbitrary proofs from the mathematical literature written in natural language and convert them into a symbolic form suitable for symbolic verification.

Here’s what I propose, if you (or anyone else) manages to beat at least three of them in 2029 [later clarification, italics added June 2024: with a single system; three separate systems would obviously not count as general], you win; if only one or two are broken, we can’t very well say we have nailed the general part in artificial general intelligence, can we? In that case, or if none are broken, I win.

Deal? How about $100,000?

If you are in, reach out so we can agree to the ground rules.

Yours sincerely,

Gary Marcus

Author, Rebooting AI and The Algebraic Mind

Founder and CEO, Geometric Intelligence (acquired by Uber)

Professor Emeritus of Psychology and Neural Science, NYU

Update, 7 June 2022: A week later, we still haven’t heard from Elon Musk, but there was extensive news coverage, and the bet is up to $500,000 with Vivek Wadhwa first among several to match my initial bet. Kevin Kelly has offered to host on LongNow.org.

Shane Legg, a co-founder of DeepMind, who coined the term in its current usage, in the context of a book that Ben Goertzel was putting together, said he was happy with my definition.

Great article.... if you subscribe to the viewpoint that "AI" ~== deep learning-like techniques.

There are others of us toiling in poorly-funded, poorly-acknowledged areas of, for example, cognitive architectures that seem to produce (actually seems to emerge) full causal abilities, intrinsic ubiquitous analogical reasoning, automatically (i.e., emerges from architecture) almost all the things you bemoan.

AGI will definitely occur by 2029. But it won't be via deep learning-like techniques which have taken over industry, taken over academia, and taken over the imagination of the technical and lay world.

Great insights as always. One proposal: for the five "By 2029" tests, we can probably condense them into one flagpost for AGI:

"In 2029, AI will not be able to read a few pages of a comic book (or graphic novel, or manga, however you wish to name the kind of publication where sequentially arranged panels depicting individual scenes are strung together to tell a story) and reliably tell you the plot, the characters, and why certain characters are motivated. If there are humorous scenes or dialogues in the comic book, AI won't be able to tell you where the funny parts are."

Taking disjoint pieces of information and putting them together by the works of the mind, that's how comprehension happens --- essentially, we are making up stories for ourselves to make sense of what comes across our perceptive systems. Hence the comprehension challenge, I feel, is how the Strong Story Hypothesis (God bless the gentle soul of Patrick Winston) manifests when we talk about evaluating AI: can AI understand its inputs by creating a narrative that makes sense?