Doug Lenat was one of the most brilliant, acerbically funny people I have ever met. If people like Marvin Minsky, John McCarthy, and Allen Newell were among the first to think deeply about how symbolic AI, in which machines manipulate explicit verbal-like representations, might work, Doug was the first to try really hard to make it actually work. I have spent my whole career arguing for consilience between neural networks and symbolic AI, and on the strictly symbolic side of that equation, Lenat was light-years ahead of me, not just more deeply embedded in those trenches than I, but the architect of many of those trenches.

Lenat spent the last 40 years of his life launching and directing a project called Cyc, an intense effort to codify all of common sense in machine-interpretable form. Too few people thinking about AI today even know what that project is. Many who do, write it off as a failure. Cyc (and the parent company, Cycorp, that Lenat formed to incubate it) never exploded commercially – but hardly anybody ever gives it credit for the fact that it is still in business 40 years later; very very few AI companies have survived that long.1

My own view is that Cyc has been neither a success nor a failure, but somewhere in between: I see it as a ground-breaking, clarion experiment that never fully gelled. No, Cyc didn’t set the world on fire, but yes, it will seem more and more important in hindsight, as we eventually make real progress towards artificial general intelligence.

Most young AI researchers have never even heard about it. But every single one of them should know something about Cyc. They don’t need to like it, but they should understand what it was, and what it tried to do, and what they might do instead to accomplish the same goals.

Not because Cyc will get used out of the box, as some sort of drop-in replacement for Large Language Models, but because what Lenat tried to do - to get machines to represent and reason about common sense — still must be done. Yejin Choi’s wonderful 2023 TED talk, Why AI is incredibly smart and shockingly stupid, followed directly in that tradition, explaining why common sense is still, despite their apparent success, lacking in current AI systems. (My 2019 book with Ernie Davis, Rebooting AI was very much on the same topic.)

Metaphorically, Lenat tried to find a path across the mountain of common sense, the millions of things we know about the world but rarely articulate. He didn’t fully succeed – we will need a different path – but he picked the critical mountain that we still must cross.

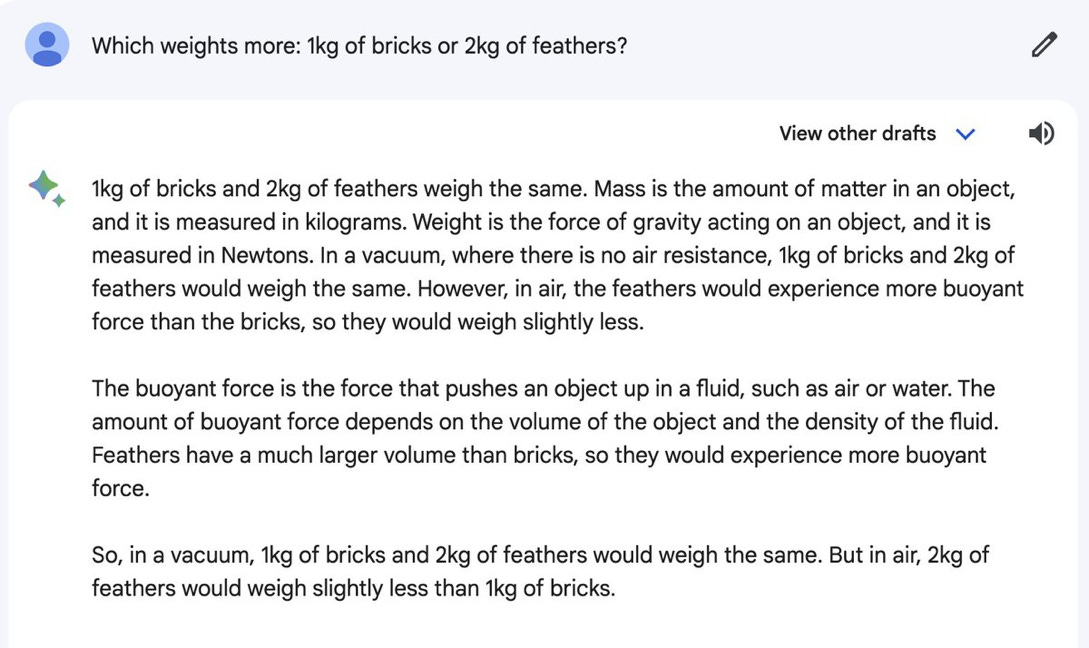

That’s what Lenat, Choi, Davis, and I have all been trying to say, and it’s exactly where Large Language Models struggle, over and over. To take but one of a zillion constantly changing examples, this very morning someone sent me, with Google Bard mixing together truth with obvious nonsense, in completely fluent paragraphs:

Depending on the wording, any given model might or might not get questions like that right on any given day; Large Language Models tend to be accurate in some wordings, inaccurate in others. From them we sometimes see an illusion of common sense, depending on the vagaries of what the training set is and the precise wording of a question, but something is obviously still missing. And even if this specific example is patched up, there will inevitably be others of a similar flavor. Cyc is an effort to find a deeper, more robust answer.

As the AI researcher Ken Forbus of Northwestern University put it to me in an email this morning, “The Cyc project was the first demonstration that symbolic representations and reasoning can scale to capture significant portions of commonsense. While today billion-fact knowledge bases are common in industry, Cyc remains the most advanced in terms of expressiveness, capturing more of the range of thoughts that humans are capable of. My group has been using Cyc’s representations in our research for decades… Our field would do well to learn more from the Cyc project.” A Google researcher, Muktha Ananda, Director of their Learning Platform, wrote condolences to me this morning, “I have always been a great admirer of [Lenat’s] vision, perseverance and tenacity. His work on Cyc was a great source of inspiration for my own journey into knowledge graphs/webs.”

§

Over the last year, Doug and I tried to write a long, complex paper that we never got to finish. Cyc was both awesome in its scope, and unwieldy in its implementation. The biggest problem with Cyc from an academic perspective is that it’s proprietary. To help more people understand it, I tried to bring out of him what lessons he learned from Cyc, for a future generation of researchers to use. Why did it work as well as it did when it did, why did fail when it did, what was hard to implement, and what did he wish that he had done differently? We had nearly 40,000 words, sprawling, not yet fully organized, yet filled with wisdom. It was part science, part oral history. Needless to say, it takes a long time to organize and polish something of that length. In between our other commitments, we were making slow but steady progress. And then in the new year, I got busy with AI policy, and he got sick; progress slowed. Closer to the end, he wrote a shorter, tighter paper, building in part on the work we had done together. When he realized that he did not have much time left, we agreed that I would help polish the shorter manuscript, a junior partner in what we both knew would likely be his last paper.

One of his last emails to me, about six weeks ago, was an entreaty to get the paper out ASAP; on July 31, after a nerve-wracking false-start, it came out, on arXiv, Getting from Generative AI to Trustworthy AI: What LLMs might learn from Cyc. The brief article is simultaneously a review of what Cyc tried to do, an encapsulation of what we should expect from genuine artificial intelligence, and a call for reconciliation between the deep symbolic tradition that he worked in with modern Large Language Models.

In his honor, I hope you will find time to read it.

Gary Marcus has focused his career on integrating neural networks and symbolic approaches; he still hopes that will happen.

For perspective Babylon Health, a medical AI company once valued at about $2 billion, went bankrupt yesterday, at the age of 10; Argo.ai, also once valued at billions, lasted six.

OMG, so sorry :(

I had the honor, privilege, to be on Cyc for a brief while - I cherish those memories to this day.

RIP, dear Doug :(

Thank you for this beautiful tribute.