Elon Musk thinks this is funny:

I think it is terrifying. Everyone—and not just The Information—should be genuinely terrified that the richest man in the world has built a Large Language Model that spouts propaganda in his image.1

This isn’t free speech. It’s a massive, massive thumb on the scale, from one of the most powerful yet unelected people in the world.

If automating Orwell’s Ministry of Truth in the service of the current White House, doesn’t scare you, you aren’t paying attention.

§

The problem here, by the way, isn’t just the blatant propaganda, but the more subtle stuff, that people may not even notice.

When I spoke to the United States Senate in May 2023, I mentioned preliminary work by Mor Naaman’s lab at Cornell Tech that shows that people’s attitudes and beliefs can subtly be influenced by LLMs.

A 2024 follow up study replicated and extending that, reporting that :

Our results provide robust evidence that biased Al autocomplete suggestions can shift people's attitudes. In addition, we find that users - including those whose attitudes were shifted — were largely unaware of the suggestions' bias and influence [boldface added]. Further, we demonstrate that this result cannot be explained by the mere provision of persuasive information. Finally, we show that the effect is not mitigated by rising awareness of the task or of the Al suggestions' potential bias.”

In short LLMs can influence people’s attitudes; people may not even know they have been influenced; and if you warn them, they can still be affected.

Grok 3 is designed to be a nuclear propaganda weapon, and Musk is proud of it.

§

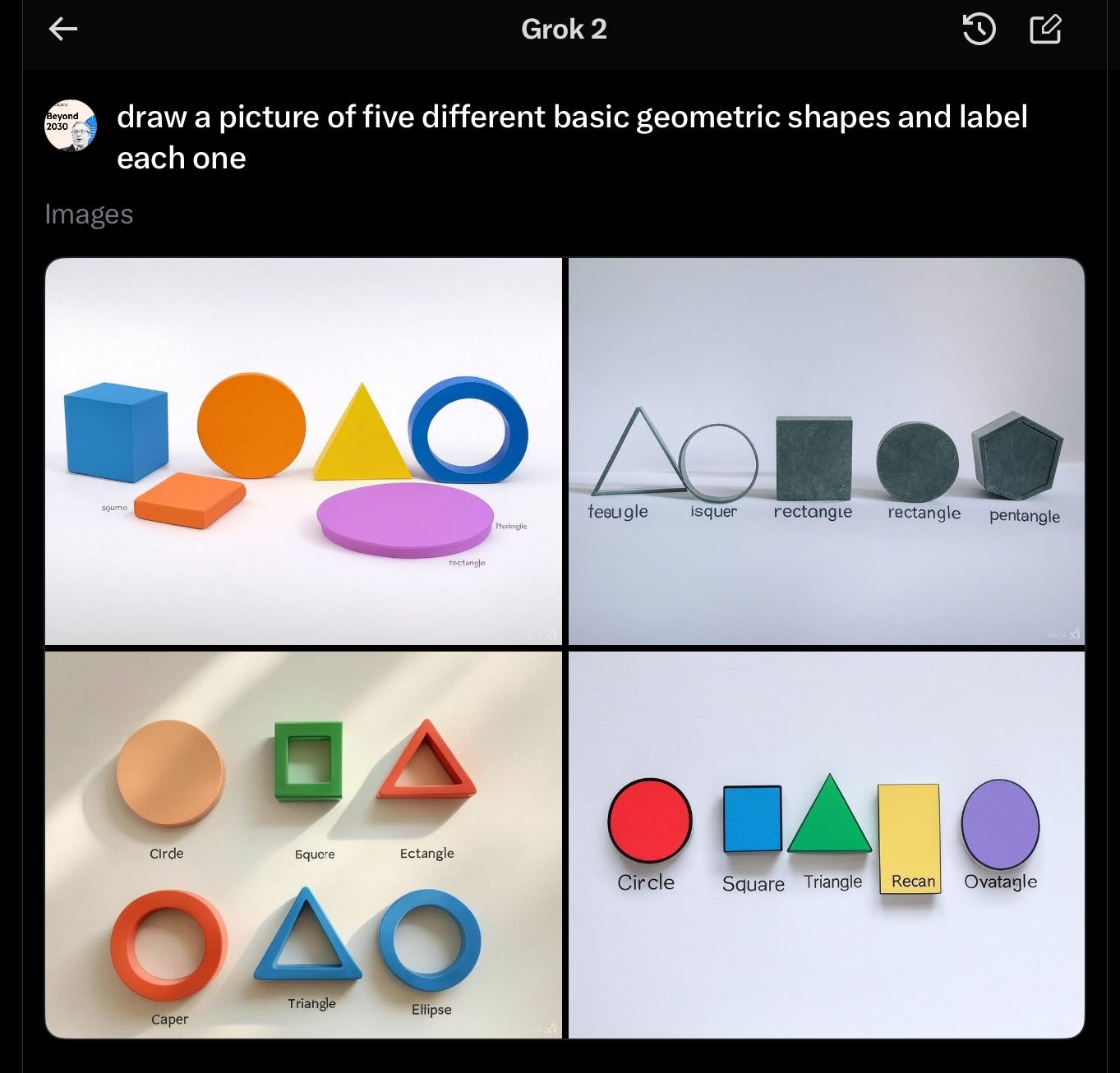

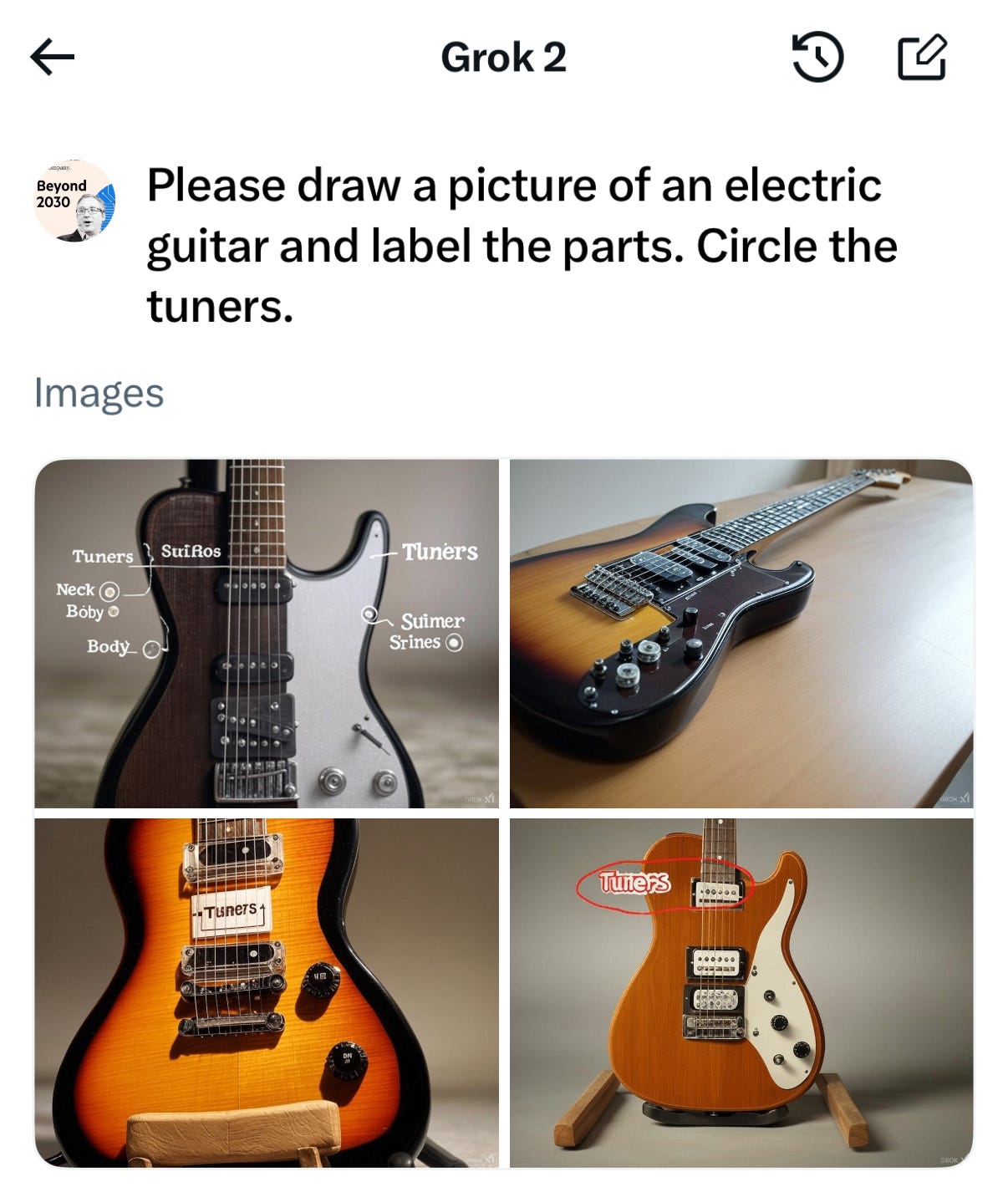

Meanwhile, while we are on the subject of Musk and Grok, did I mention that Grok 2 (new version, which is not yet available for testing, comes out tomorrow), or at least its image generation, can be remarkably dumb?

Over the last couple days I have been asking version 2 to draw images identifying and tagging parts of images. The results are consistently awful:

It’s not just drawings either. A new Edinburgh study bears out the concerns that Davis and I have expressed about LLMs and temporal reasoning, for almost a decade, reporting that “Our despite recent advancements, reliably understanding time remains a significant challenge for M[ultimodal] LLMs.”

§

Yet Musk wants to use shove problematic AI, both biased and unreliable, down our throats, immediately, as reported by The New York Times, and noted in this Bluesky comment:

§

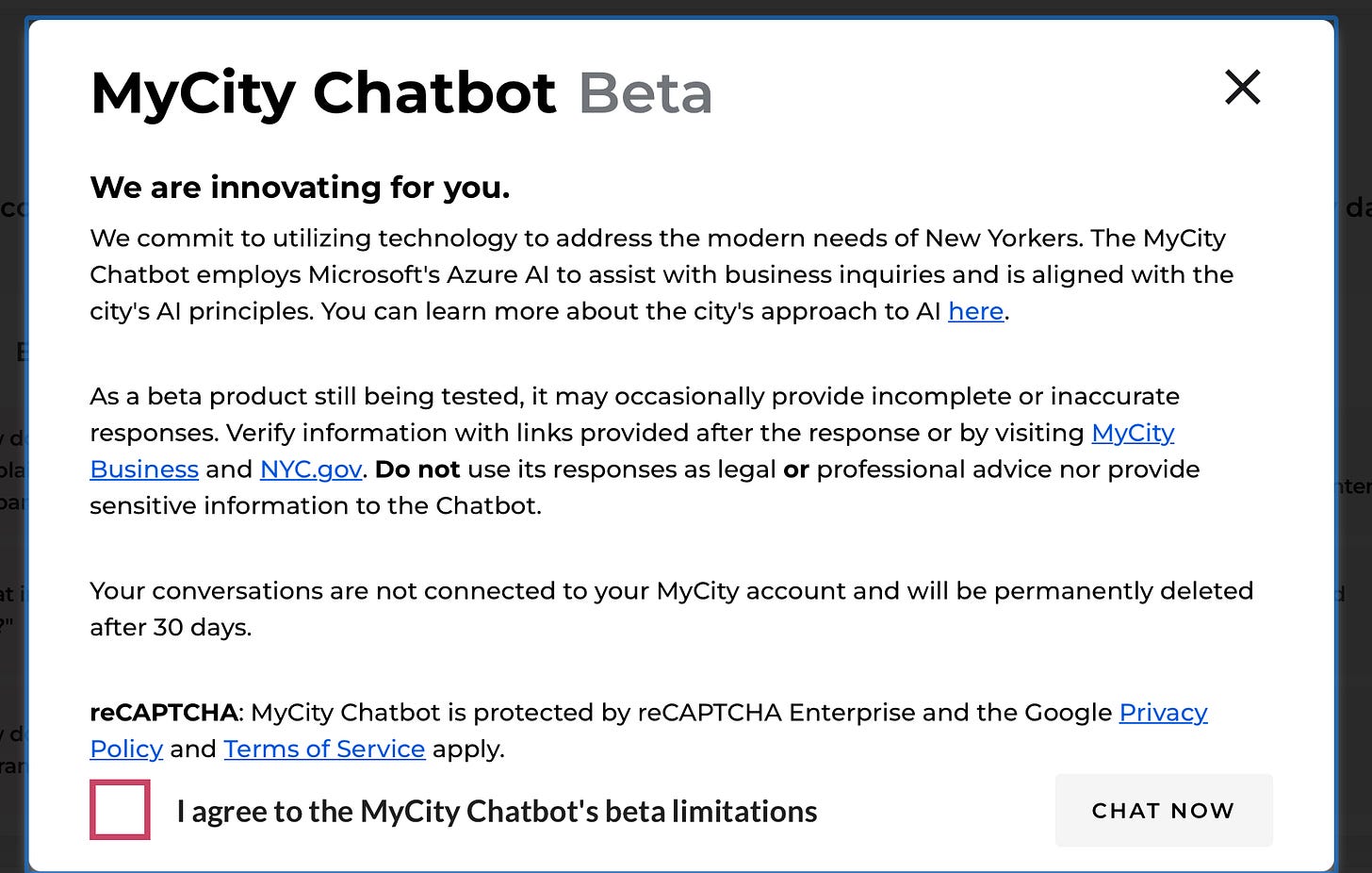

About year ago the City of New York tried this kind of thing, with less than spectacular results:

It’s still there, albeit with a warning:

Soon such unreliable stuff, with butt-covering warning messages that most people will ignore, will be everywhere.

And it will be the citizens of the United States who bear the consequences, while the guy who stood to get a $400M armored T̶e̶s̶l̶a̶ EV government contract (until the media squawked) stands to collect a big slice of the profits, unemploying thousands of lifelong government employees.

This is not where we should be as a nation.

In his dark 2024 book, Taming Silicon Valley, Gary Marcus warned that tech oligarchs would take over the world, unless citizens protested loudly (spoiler alert: they didn’t). He had no idea that the dystopic oligarch transformation he warned about would become this pervasive this fast.

The image above slamming The Information is (allegedly) from Grok 3, which will be announced tomorrow; Grok 2 has a much less extreme view of The Information.

This is Chapter 10 of Timothy Snider's book, On Tyranny. "To abandon facts is to abandon freedom."

The technocrats don’t care about how often LLMs make mistakes. They see them as hyper personalized propaganda machines.