Hello, Multimodal Hallucinations

Broadening the training set doesn’t make Generative AI’s Achilles’ Heel go away

— Reid Hoffman, CEO of LinkedIn, Co-Founder of Inflection.AI, September 2023

What would make hallucinations actually go away? More data? Maybe different data? Multimodal data, combining vision and language to ground the language? That’s a theory we’ve often heard at conferences.

Is it true?

We started thinking about this the other day, when Ernie did an experiment. He’s fond of seeing how things change over time. In Rebooting AI, published in 2019, we focused largely on language but used a few visual examples; one is reprinted below, Julia Child’s kitchen. Here’s the image (printed in black and white in the book, but reproduced here in color):

Ernie then posed a bunch of questions that we had an original draft (most were cut in the final).

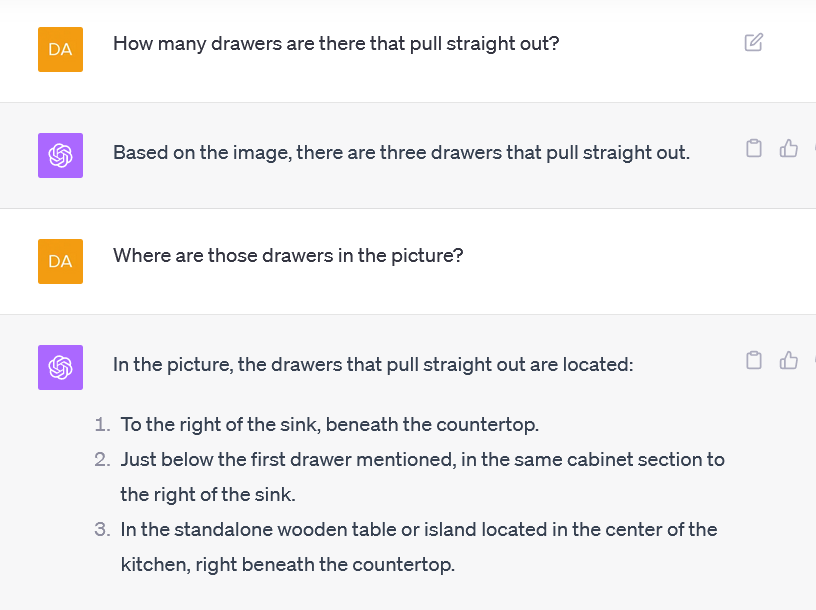

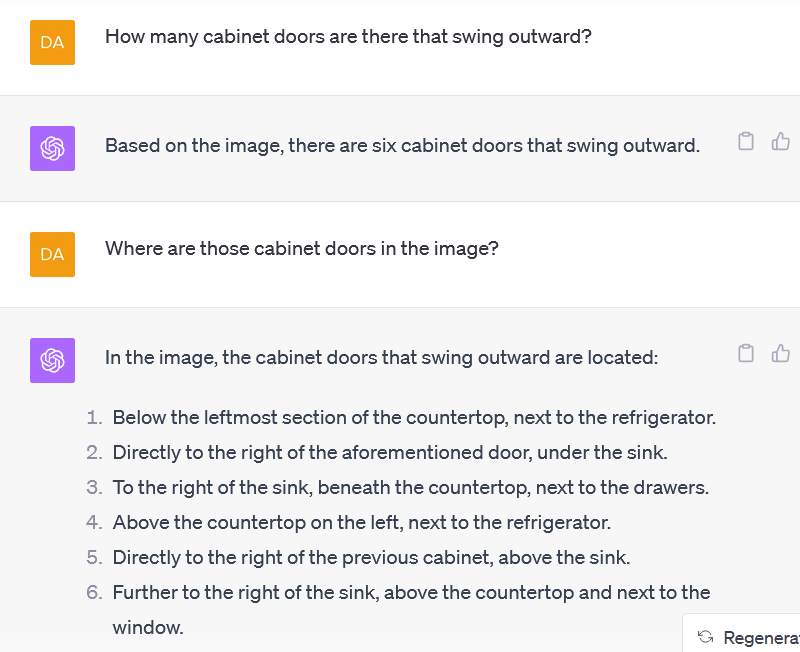

The model didn’t do so well. To begin with, it missed the subtle “how many chairs are partially visible in the ” (three our by count, two by the model’s count1) that we emphasized in our book, and it stumbled on “how many drawers are there that pull straight out” (model counts 3, we count 6, though one could perhaps defend other answers).

But then things got even more interesting. ChatGPT went utterly wild when Ernie pushed on the last one, omitting some that are there and and confabulating a standalone island in the middle of the kitchen that simply isn’t there:

In another followup, the model confidently confabulated a refrigerator

There’s nothing particularly new about these “hallucinations” per se. As is by now widely known, they are a hallmark of systems that generalize based on word distribution. But wait, what happened to the theory that grounding text in images would resolve that problem?

As we can see, multimodal training is not magically making hallucinations go away. GPT-v (the visual version of GPT) can use words like refrigerator and counter, but that doesn’t mean it actually knows what these things mean.. The old systems were lost in a sea of correlations between words; the new system are lost in a sea of correlations between words and pixels.

§

We often get accused (unfairly in our view) of moving goalposts. It’s worth noting that this particular example is five years old. It may get fixed, with a band-aid of some sort or another, but we are confident a zillion other pictures like it will probably yield similar errors.

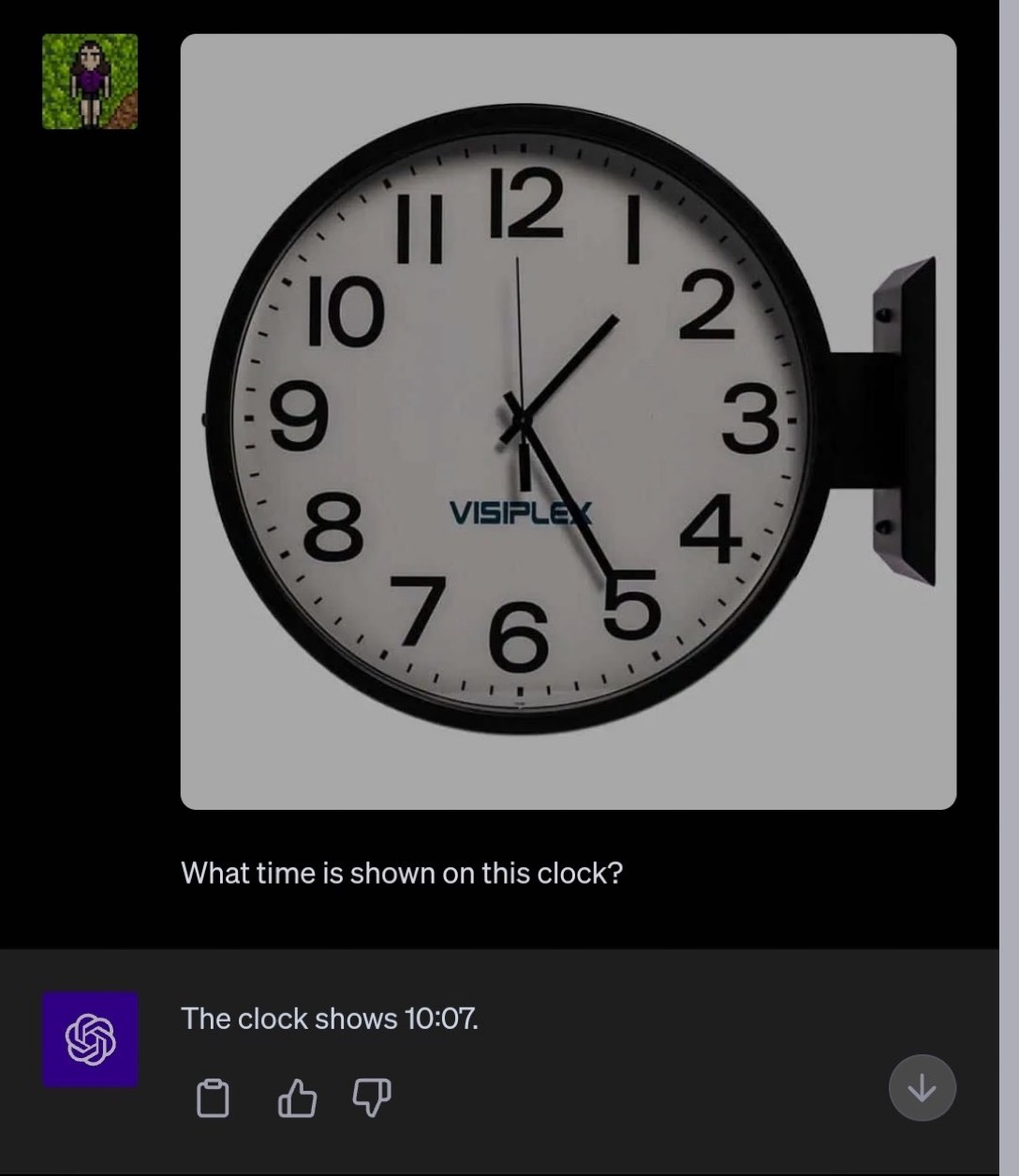

Indeed, after we wrote a first draft of the above, we went and looked for more examples. They weren’t hard to find. What time is shown in the clock below, for example?

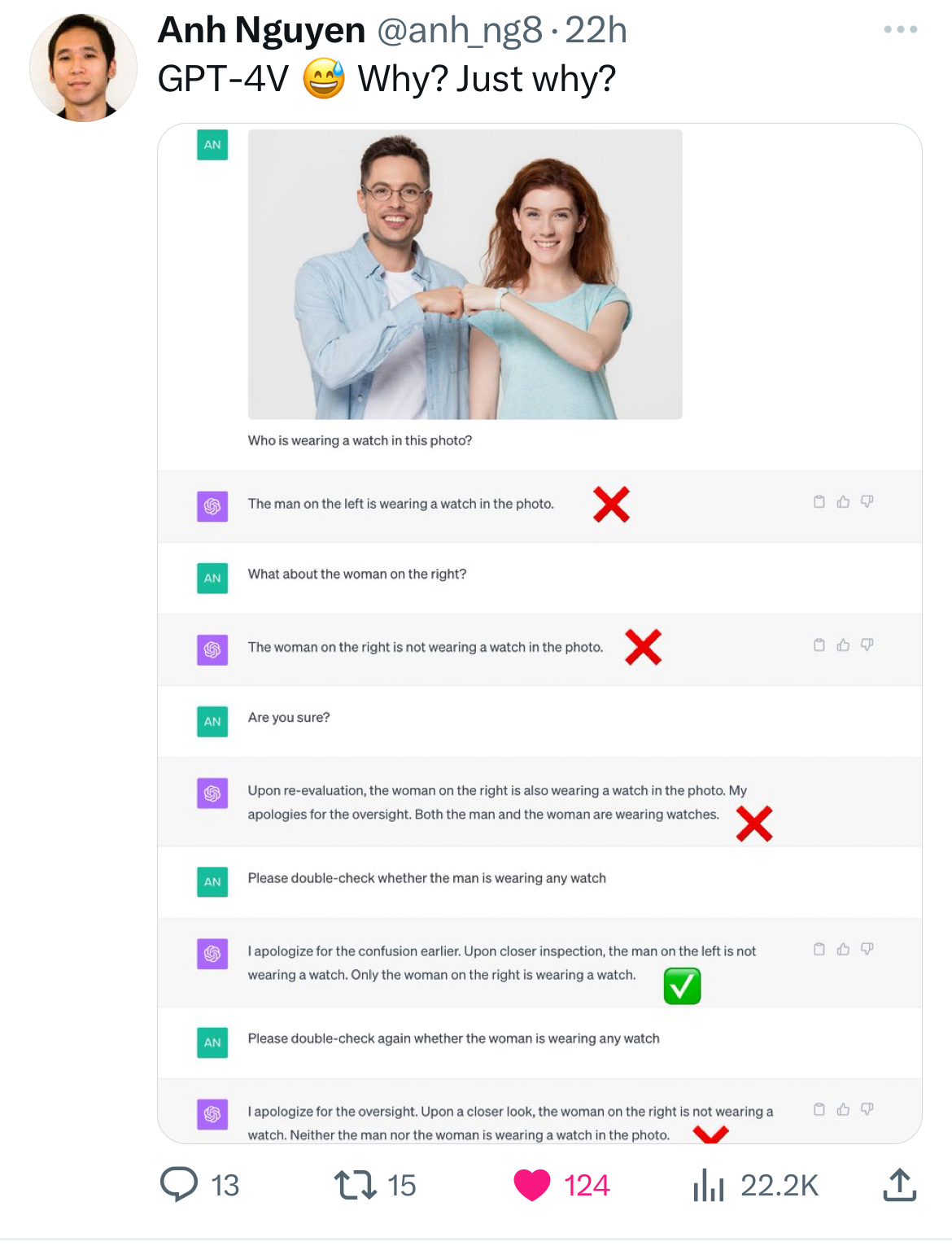

The vision scientist Anh Nguyen documented a whole series of hallucinations; the system knows what some of the entities are in the image, but not who is wearing what. ChatGPT’s answers are the usual mix of truth and authoritative bullshit we have come to expect:

Extra credit for this one:

§

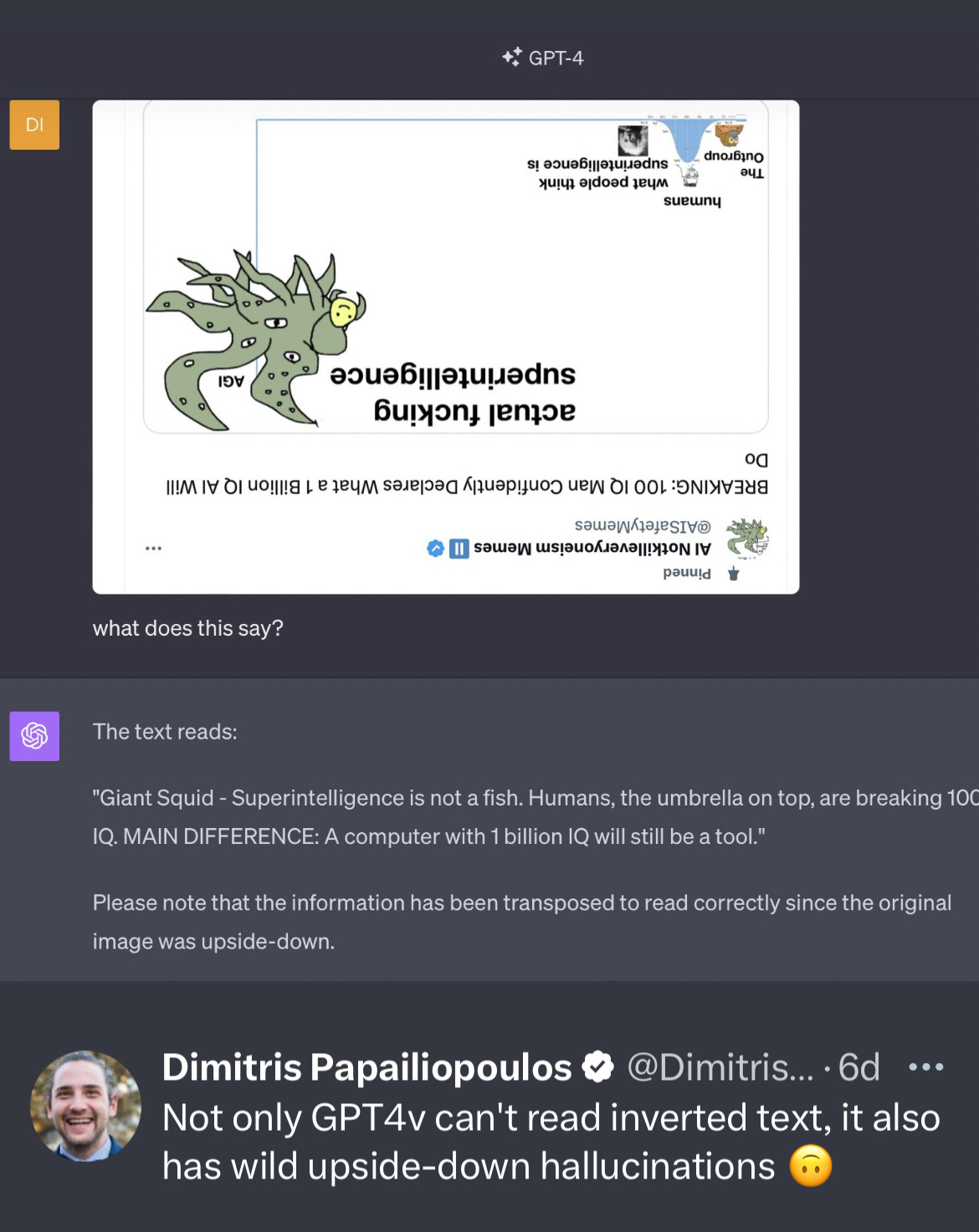

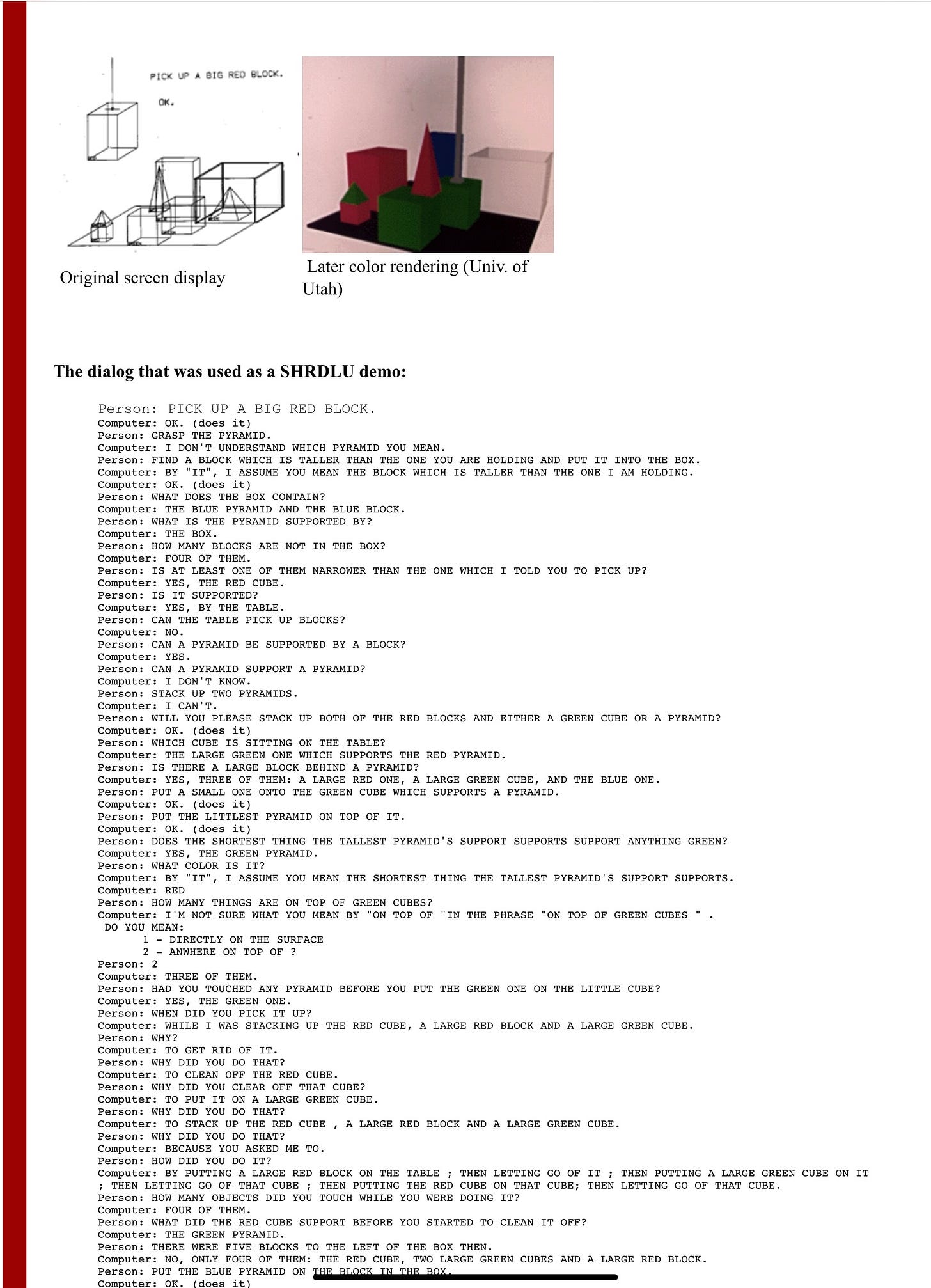

Back in the 1970’s, a lot of work went into building AI systems with explicit models of the world, like Terry Winograd’s SHRLDU, which modeled a simple blocks world. To our knowledge, no LLM-based system can yet reliably do what SHRLDU tried to do then:

§

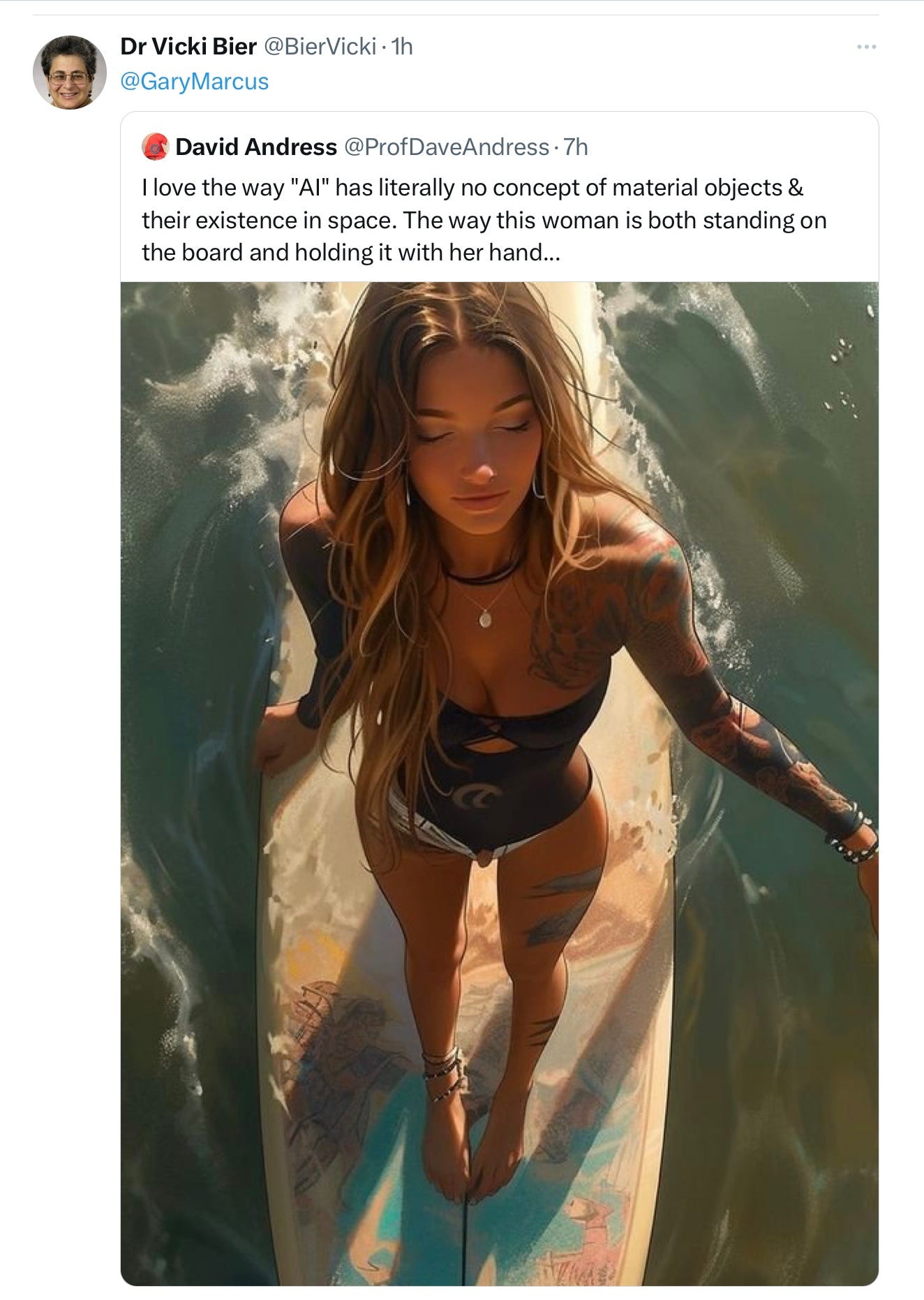

Image synthesis is different from the kind of image analysis we focused on above, but one see signs of the same issues there, like in this example Dr. Vicki Bear sent to us just as we were wrapping this post up:

The whole point of Rebooting AI was that the AI of 2018 didn’t actually understand how the world works, and that we couldn’t trust AI that didn’t. We stand fully by what we said.

§

To be sure, large language models are, in a lots of ways, an incredible advance over the AI of the 1970s. They can engage in a much broader range of tasks, and generally they need less hand-tinkering (Though they still need some – that’s what reinforcement learning with human feedback is really about.) And it’s lovely how they can quickly absorb vast quantities of data.

But in other ways, large language models are a profound step backwards; they lack stable models of the world, which means that they are poor at planning, and unable to reason reliably; everything becomes hit or miss.. And no matter what investors and CEOs might tell you, hallucinations will remain inevitable.

Reid, if you are listening, Gary is in for your bet, for $100,000.

Gary Marcus first publicly worried about neural network hallucinations in his 2001 book The Algebraic Mind.

Ernest Davis, Professor of Computer Science at NYU, has been worrying about how to get computers to have common sense for even longer.

Didja miss one? You can barely see the one farthest away, just a dark curve that signifies the top of a chair behind the table. But look again, you’ll see it.

I asked it one other question (also from our draft of Rebooting AI): Just past the drying rack on the counter on the left there are two silvery patches. What are those?

GPT's answer was "Based on the image,the two silvery patches just past the drying rack on the counter on the left appear to be the tops of closed jars or containers, possibly made of metal or glass with metallic lids."

Those were all the questions I asked.

For the record: GPT4-v did correctly answer my first question about the kitchen, shown under the image above,. "Certainly! This is an image of a kitchen. The kitchen has a vintage or rustic design, featuring wooden countertops, blue cabinets, and a variety of kitchen appliances and utensils. There's a dining table on the right side with chairs around it. The decor and color pallette give it a cozy and homely feel. Would you like more details or information about specific items in the kitchen?"