I am not afraid of robots. I am afraid of people.

Some thoughts on AI risks, near-term and long-term, some recent controversies in AI, and why we are in trouble if we can’t find a way to work together

I’m scared. Most people I’ve spoken to in the AI community in the last few weeks are. I’m more concerned about bad outcomes for AI now than at any other point in my life. Many of us who have worked on AI are either feeling remorse or concerned that we might soon come to feel remorse; Ezra Klein wrote about a delegation of people from big AI companies speaking to him about their off-record-fears. I am putting my fear here, on the record.

Probably each of us is different. Some are frightened about some eventual superintelligence that might take over the world; my current fears have less to do with recent advances in technology per se, and more to do with recent observations about people.

I wasn’t feeling this a way year ago. Sure, I was skeptical that large language models would lead to general intelligence, I was worried about the coming thread of misinformation, and I was worried that we were wasting time on what LeCun later described as an off-ramp, but I was still seeing the AI community writ large as growing up, increasingly recognizing the value of responsible AI. Forward-thinking conferences like The ACM’s FAccT (Fairness, Accountability, and Transparency) were growing in size and influence. Corporations like Microsoft were putting out statements on responsible AI; I thought they had learned their lesson from the Tay debacle. Google was holding off on releasing LaMDA, knowing it wasn’t fully ready for prime time. On a 1-10 scale of least worried to most worried, I was at a 6. Now I am at an I- don’t-think-about-much-else 9.

§

Greed seemed to increase in late November, when ChatGPT started to take off. $ signs flashed. Microsoft started losing cloud market, and saw OpenAI’s work as a way to take back search. Nadella started to seem like a villain out of a Marvel movie, declaring in February he wants to make Google “come out and show that they can dance”, and “I want people to know that we made them dance.”1

OpenAI, meanwhile, more or less finalized its transformation from its original mission

to a company that at present for all practical purposes is a for-profit, heavily constrained by the need to generate a financial return, working around Microsoft’s goals, with far less emphasis on the admirable humanitarian goals in their initial charter. Their 10 billion dollar deal earlier this year accelerated that transition.

Then along came Kevin Roose’s infamous batshit crazy mid-February conversation with Sydney; I thought for sure Microsoft would take Sydney down after that. But they didn’t. Instead they put up some risible band-aids (like limiting the lengths of conversations) and declared on 60 Minutes (same early March episode in which I had appeared) that they had solved the problems in 24 hours. The reality is far more complex. (E.g. one can still easily jailbreak Bing and get it to produce egregious misinformation.)

The real reason I signed The Letter was not because I thought the moratorium had any realistic chance of being implemented but because I thought it was time for people to speak up in a coordinated way.

At some level the petition worked; Capitol Hill, for example, pretty clearly got the message that we can’t sit around forever on these issues. But in other ways, I have even more concerns than before.

§

The main thing that worries me is that I see self-interest on all sides, and not just the corporate players, when what we really need is coordinated action.

What we need coordinated action on is actually pretty obvious, and it was spelled out perfectly clearly in the letter. Contrary to rumor, the letter didn’t call for a ban on research; it called for a shift in research.

AI research and development should be refocused on making today's powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal./

Who on earth could actually object to that? Whether or not you want a pause, the reality is that large language models remain exactly what I have always described them to be: bulls in a china shop, powerful, reckless, and difficult to control. Making new systems that are “more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal” should literally be at the top of everyone’s agenda.

No sane person should have opposed that prescription.

§

Of course, the proposed moratorium as a whole could have reasonably been criticized at a lot of levels.

It was definitely too hypey around the imminence of artificial general intelligence (I held my nose on that point); there is no obvious way to get ensure compliance; China might go ahead anyway; we don’t even know how to evaluate the proposed ban’s main criterion. (The ban called for halting systems more powerful than GPT-4, presumably as indexed by size and we don’t even know how large GPT-4 is; again, I held my nose, having seen the letter only after a bunch of signatories had already signed on.)

To be honest, I would have preferred my own proposed moratorium, co-written a month earlier, with Canadian Member of Parliament Michelle Rempel Garner, which called for a temporary ban on widescale deployment (not research), until we could put in a regulatory infrastructure modeled on establishing the safety of medicines, with phased roll out and safety cases.

But by the time I saw the FLI letter, it had already been finalized and perhaps 30 significant people had signed on. Instead of publicly criticizing the proposal, or pushing my own, I felt that building a coalition was important. I saw that folks like Yoshua Bengio, focused as much on current reality as I am, and Steve Wozniak (who doesn’t seem to have any particularly vested interest) had signed on. There was a chance to build bridges—not just with an echo chamber of my besties (like Grady Booch, with whom I am roughly 97% aligned), but with people I often disagree with, like Musk and Bengio.

As I have said twice now in closing at the AGIdebates, it is going to take a village to raise an AI. If we want to get to AI we can trust, probably the first thing we are going to need is a coalition.

§

But nooooooo, as John Belushi used to say on Saturday Night Live. A coalition was not in the cards.

Instead we got a shitstorm, like the shitstorm of the 1968 Chicago Democratic Convention, which did nothing for the Democrats, and everything for their opponent, Richard Nixon, who soon became President.

Instead of signing on, a lot of self proclaimed AI ethicists decided to dump on the letter. A prominent and pretty typical example was the letter from Timnit Gebru’s DAIR, which went for blood rather than seeking common ground:

To be sure, the DAIR letter ultimately lands on a reasonable conclusion:

But there is still an unfortunate kind of imperialism, a view that their (very legitimate and important) cause—exploitative practices—outweighs all others; not “a focus” but “the focus.”

Why focus only on that, and not also say the enormously dangerous potential of mass misinformation, which people like Renee DiResta and I have often emphasized, or the risks of cybercrime that Europol recently emphasized? Don’t each of these risks in part lead to a common cause - reining in errant software– that we all should consider?

Surely, after all the criticism that Gebru and Bender have leveled against LLMs they can’t think that building GPT-5 is all that great an idea. In their zeal to distance themselves from the Future of Life crowd, though, it seems to me that they missed an opportunity to decry even more powerful versions of systems that have already proven problematic. This opportunity has been lost; I hope there will be more of a spirit of collaboration as subsequent proposals emerge.

For now, all the technolibertarians are probably cackling; if they had wanted to sabotage the “develop AI with care” crowd, they couldn’t have found a better way to divide and conquer.

In truth, over 50,000 people signed the letter, including a lot of people who have nothing to do with the long term risk movement that the FLI itself is associated with. These include, for example, Yoshua Bengio (the most cited AI researcher in recent years), Stuart Russell (a well-known AI researcher at Berkeley), Pattie Maes (a prominent AI researcher at MIT), John Hopfield (a physicist whose original work on machine learning has been massively influential), Victoria Krakovna (a leading researcher at DeepMind working on how to get machines to do what we want them to do), and Grady Booch (a pioneering software architect who has been speaking out about the unreliability of current techniques as an approach to software engineering).

But a few loud voices have overshadowed the 50,000 who have signed.

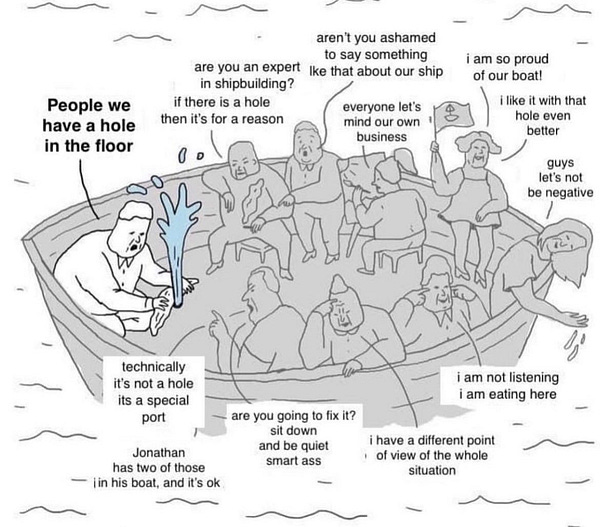

I have rarely seen a cartoon that better captures how I am feeling than this one:

§

And then there were the tech bros, spouting nonsense, like Coinbase CEO Brian Armstrong:

Back in the real world, of course there is disagreement and marketplaces of ideas and troubles with democracy, but there are also tons of examples of successful regulation, like seatbelt laws, which have saved something like 300,000 lives. A bunch of people have tried to tell me on Twitter that regulation simply isn’t possible.

Anders Sandberg quietly nailed this one:

And Elon Must was right on last night, too:

§

As Andrew Maynard quite rightly put it on Twitter a couple days ago “there are no silver bullets here;” no single silver bullet is going solve all that ails AI.

Which is why either/or thinking – thinking my problems are more important than your problems – is deadly.

We can’t stop looking at cancer because people die in car accidents, nor vice versa. A world in which cancer researchers belittled auto safety researchers (or vice versa) is not one I would want to live in. But that’s basically what’s happening in AI: I “won’t sign your letter, because some people who signed your letter have worried about some risks that I happen to think are more pressing than some of the risks that your letter happened to emphasize.”

That kind of thinking is not a recipe for success.

§

This is what we actually need:

The sooner we stop bickering and starting getting to it, the better.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is deeply, deeply concerned about current AI but really hoping that we might do better.

Watch for his new podcast, Humans versus Machines, debuting later this Spring

Nadella also made his own team dance; "The pressure from [CTO] Kevin [Scott] and [CEO] Satya [Nadella] is very, very high to take these most recent OpenAI models and the ones that come after them and move them into customers hands at a very high speed," according to a Corporate VP at Microsoft, per a report at The Platformer.)

I think (part of) Tyler Cowen's critique of the letter's relevance is spot on - it didn't include signers from other disciplines & parts of society (and outreach seemingly wasn't attempted). Where are the clergy? The unions? The many grasroots and grasstops organizations that care about widespread AI-caused unemployment and suppressed wages?

When regulation succeeds, it's going to be because a diverse coalition has forced it to happen.

What would be great is if the people objecting to the first petition, but who also want a pause for their own reasons, would write their own petitions. If you have enough agreement on the target - six months suspension of development - then you could leave people to make their own disparate arguments for why, yet the signal that would rise above the noise would be simple and coherent.