No, Sora has not “learned physics”

Some subtleties that eluded the All-In podcast

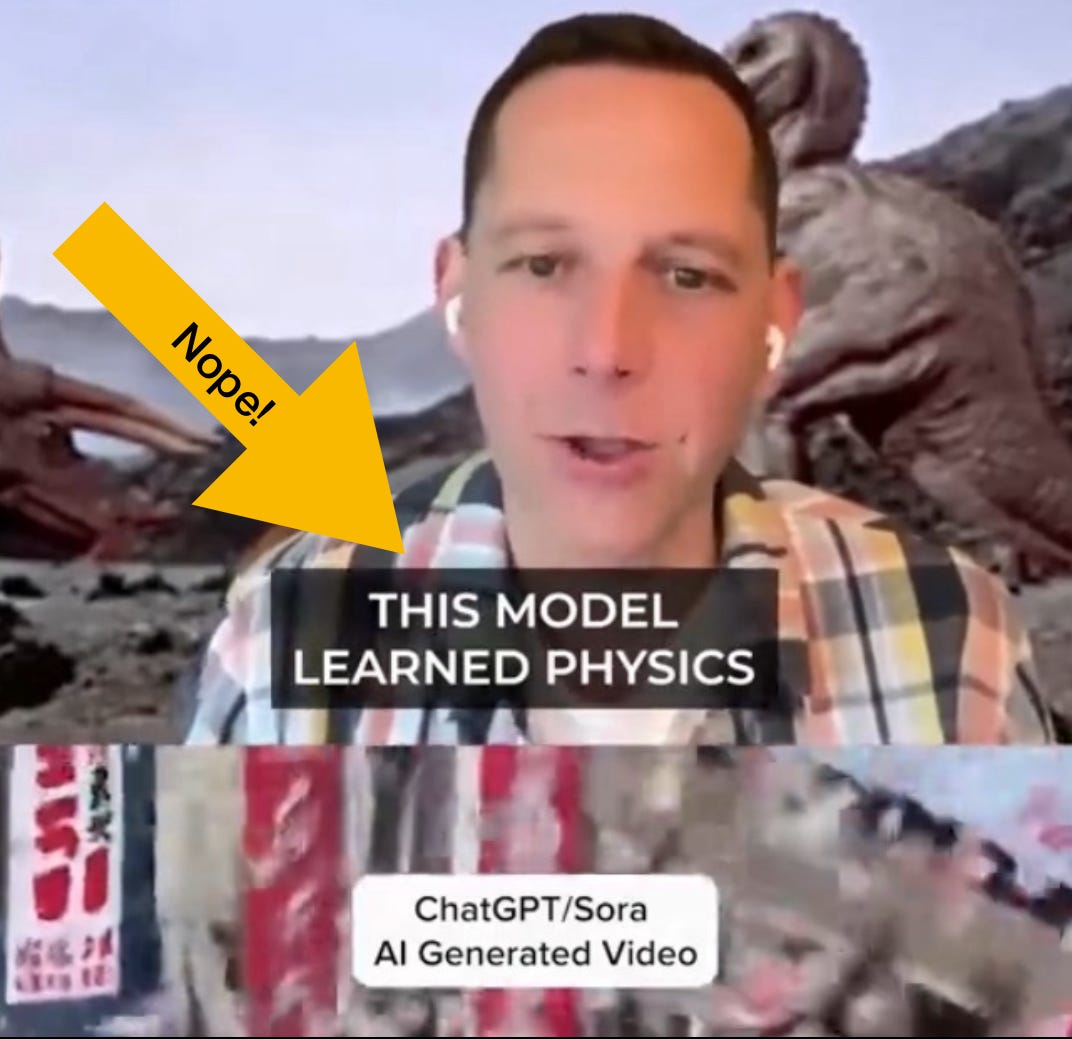

According to David Friedberg at the popular All-In Podcast, Sora has "learned physics, on its own”. (Brief excerpt here, h/t Axel Coustere).

I disagree. Sora has not learned physics, and is not a reliable guide to how the world works. It is at best a semi-reliable guide to how the world looks.

These two—how the world works, and how the world looks—are fundamentally different.

A physics engine takes a list of objects and locations etc. and computes temporal updates, and allows you to render views.

At the core is a bit of basic physics: the notion of object permanence, the fact that other things being equal objects tend to persist over time (even if e.g., they are occluded from some perspective).

Sora absolutely fails at object permanence 101. People, animals, limbs, etc routinely wink in and out of existence willy-nilly, as a function of predictions about pixels, rather than as the computations of the states of physical entities.

Other videos feature chairs levitating, basketballs passing through rims and exploding, glasses leaping spontaneously into the air, people spontaneously changing size, etc, in what I earlier called Sora’s Surreal Physics. No actual physics is ever modeled, and the entities depicted are not constrained by the laws of physics. They do as pixels do, not as collections of atoms must.

Room geometry is similarly flawed, e.g., if you watch the restaurant video carefully. Continuity of pixels always beats physical modeling. Image prediction, not physics.

You also wind up with humans with six fingers, humans with three arms, weird walking gaits, ants with four legs, etc because there is no underlying model of the physiological structure of an ant or a human being.

It is precisely because Sora is not a physics engine that a large fraction of Sora's videos are inconsistent with physics or biology, in very basic ways.

Whenever there is a conflict between physics and image sequence prediction, image sequence prediction wins. The laws of physics are about objects, not pixels; Sora knows only about pixels and image spaces, not objects.

Predicting image frames is (for some purposes) a fine short cut or approximation, but physics it ain't.

Gary Marcus admires Sora’s rapid video synthesis, but thinks that claims about how it models the world are confused.

I have a saying (which may or may not be entirely fair, but it's what I increasingly feel): "90% of everything that it said or written about AI [by humans] is crap. For AGI that number increases to 99%. For consciousness it increases to 99.9%". There is now so much BS out there that it's hard to cope, even for me, and I've been working in AI since the mid-80s. What's really worrying is that normal people, including policymakers, have no way of distinguishing AI BS from AI reality.

Here’s a test of whether people _really_ think Sora understands physics. Would they be willing to ride in an airplane piloted by Sora? I wouldn’t. Consider the fate of the 2 Boeing 737-MAX8 aircraft that crashed because their autopilot’s emergency software wouldn’t let the pilots safely override a bad sensor reading. And that was ordinary software, where the coders painstakingly gave each instruction. Imagine an AI getting the physical situation wrong and deciding it needed to modify the control signals to the control surfaces.