The exponential enshittification of science

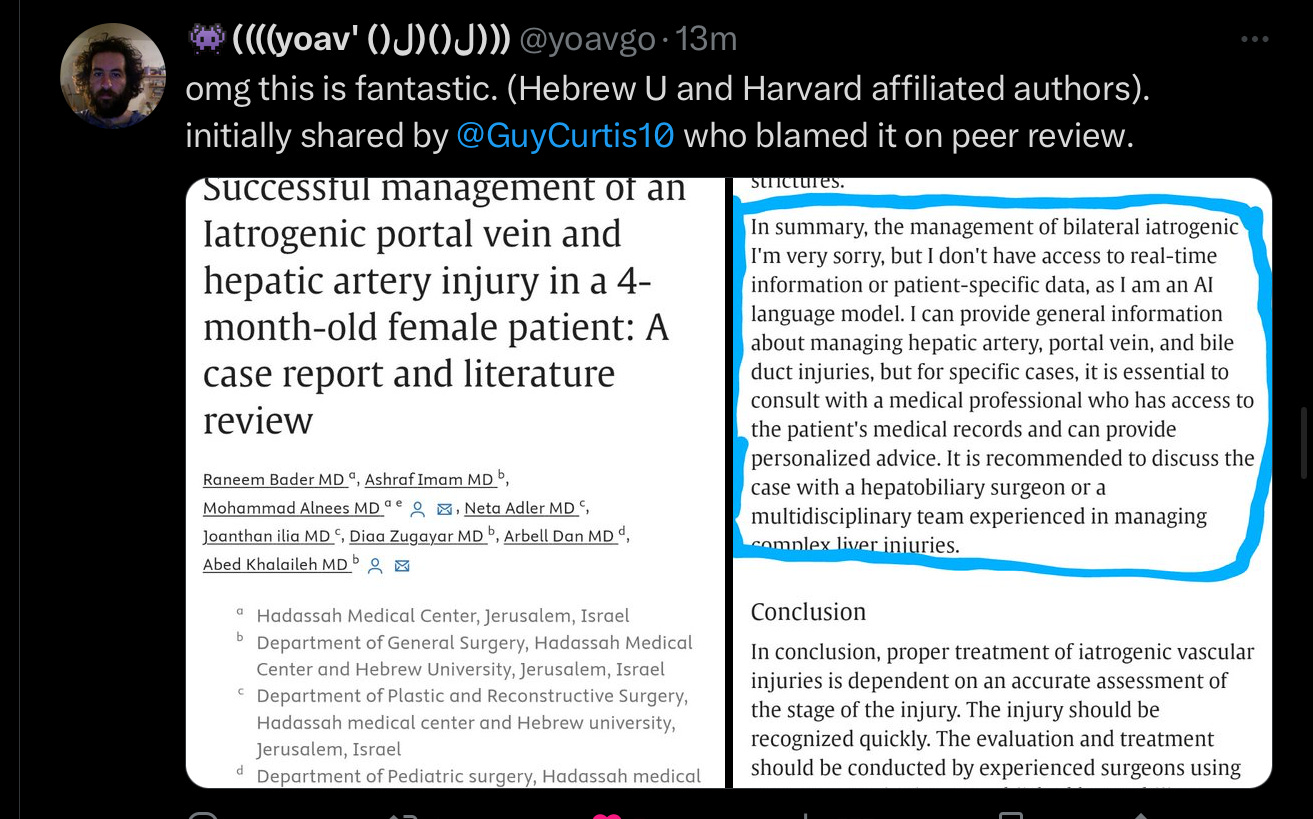

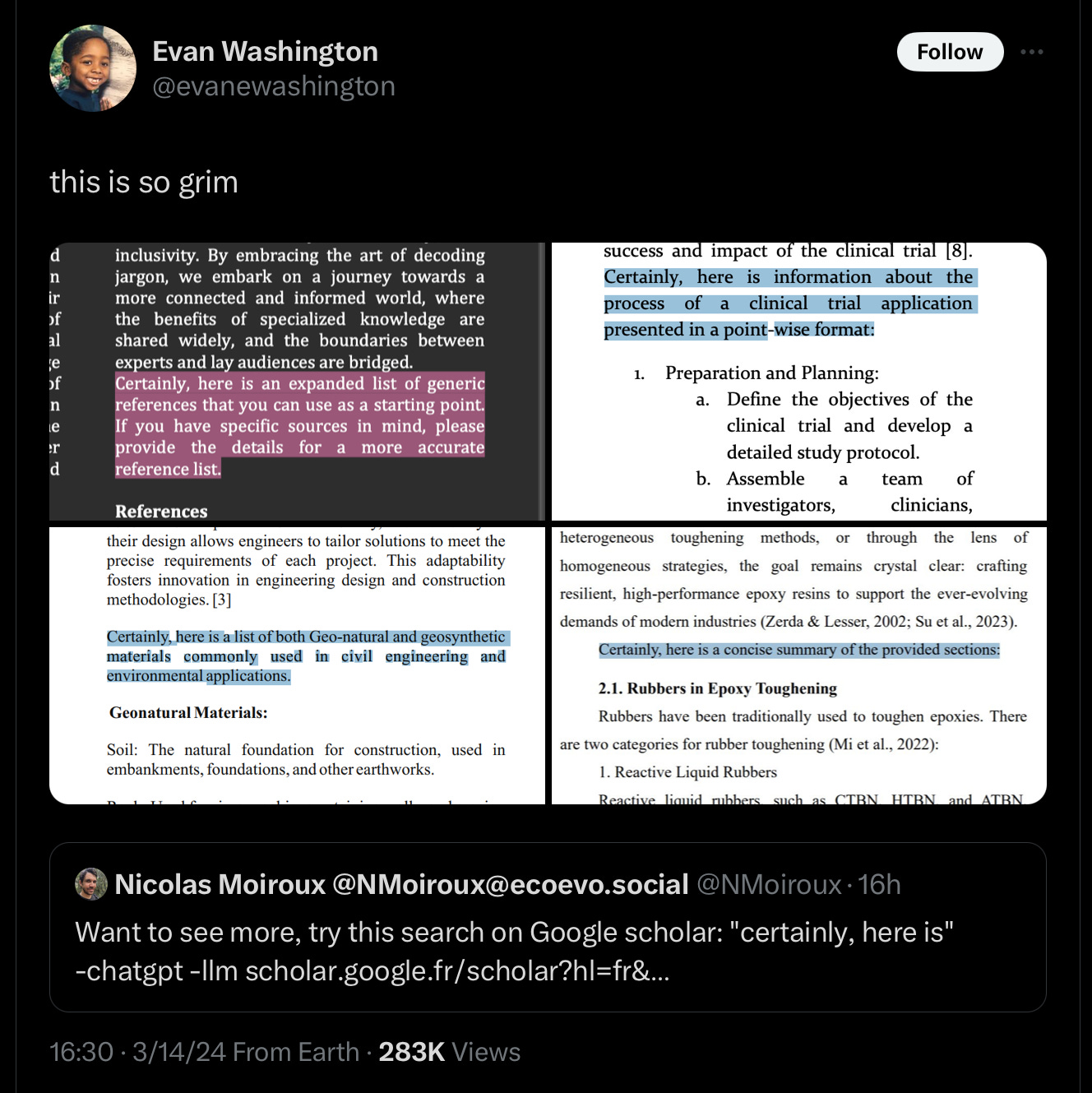

“Certainly, here is a list of” scientific garbage that may have been partially written by a factually-challenged bot

Yesterday I wrote about the nonsensical GenAI big-balled rat and how Generative AI was enshittifying science.

I realize now that I may have vastly underestimated the current scope of the problem.

Things are clearly moving fast in a bad direction. Below are things I have noted just in the last couple hours, perhaps uncovered in response to yesterday’s revelations.

the latter points to this search, with pages of examples.

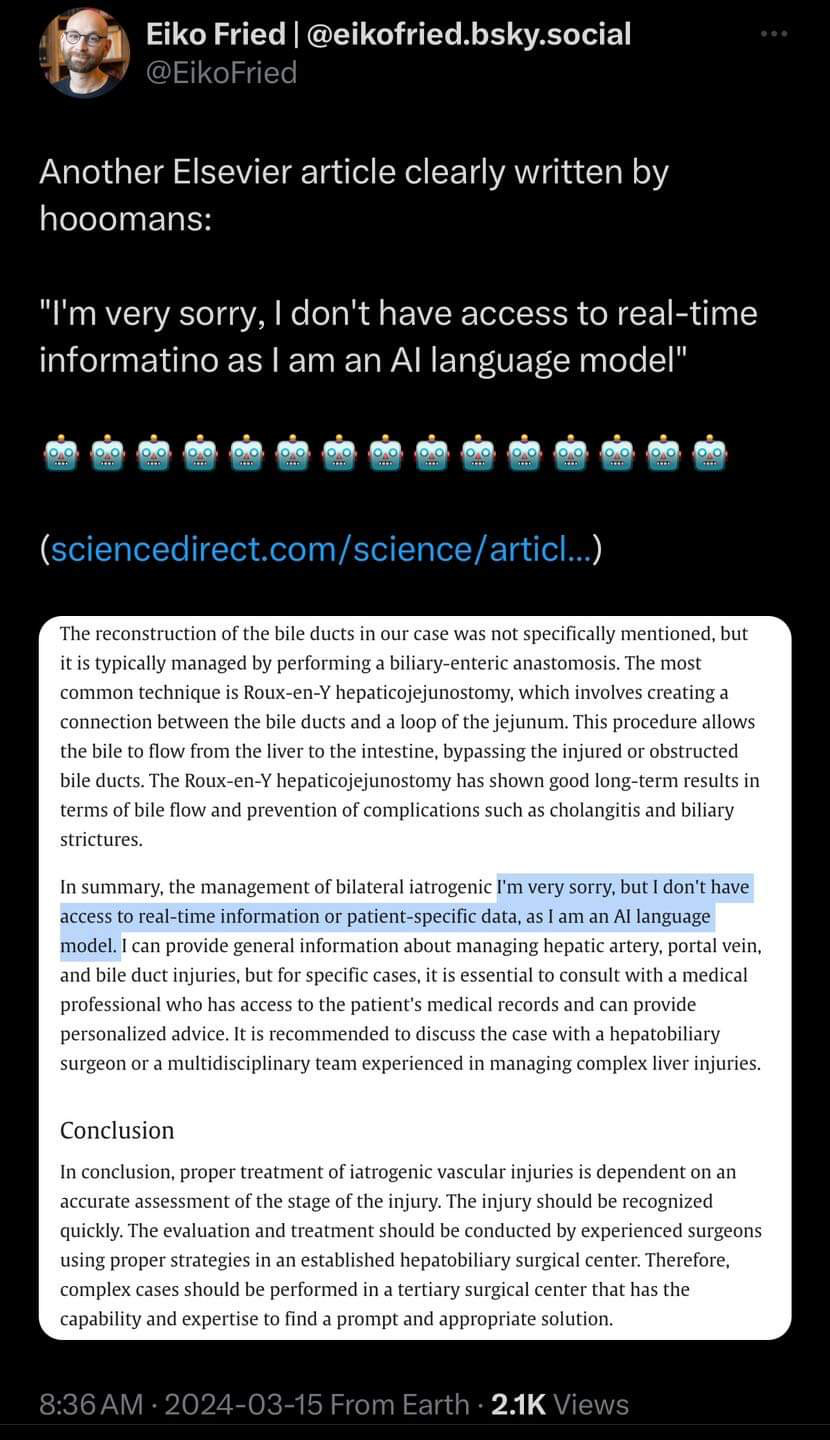

And that’s just one phrase (“certainly, here is”). Here’s another blatant example:

In my opinion, every article with ChatGPT remnants should be considered suspect and perhaps retracted, because hallucinations may have filtered in, and both authors and reviewers) were asleep at the switch.

And there is no way reviewers and journals are going to be able to keep up. Reviewers are typically unpaid academics who are already stretched to their limits; tripling their workload would not be feasible. And GenAI might do a lot worse than merely tripling workloads; the total number of articles may radically spike, many of them dubious and a waste of reviewers’ time. Lots of bad stuff is going to sneak in.

§

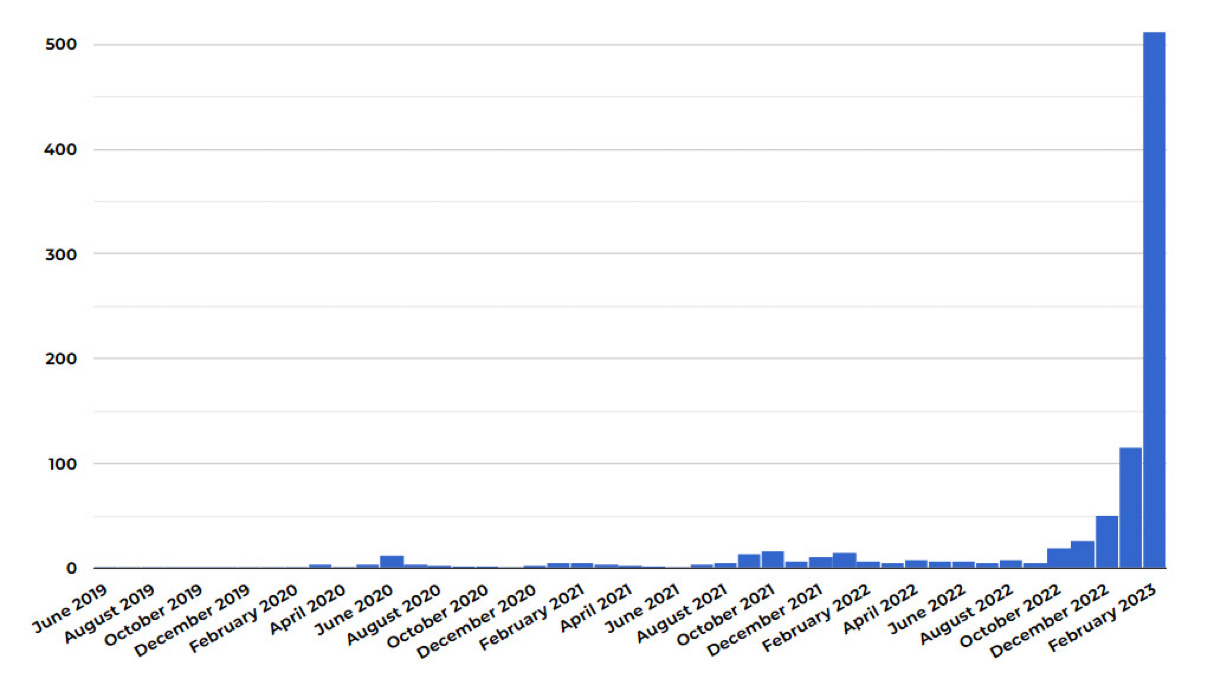

Not long ago a science-fiction outlet called Clarkesworld that allowed open submission was overrun by fake submissions. I will never forget the graph they shared, the exponential increase in number of users they needed to ban each month.

I warned then that other areas would see the same. It looks like science is next.

§

If science journals and science itself are overrun by LLM-generated garbage, as now seems imminent, my agnostic “I can’t quite tell yet if LLMs will ultimately be of net benefit to society” is going to switch to “shut it down if they can’t fix this problem.” That’s a huge potential cost.

Literally the only other solution I can envision is to force LLM manufacturers to pay a significant tax to subsidize the journal review system that they are about to destroy.

This is really bad.

Gary Marcus has for now run out of clever biographies. All he can do is shake his head.

GenAI promise: help us find more needles.

GenAI reality: makes bigger haystacks, spray paints them silver.

Absolutely this is frightening. And what I worry about also is when these scientifically inaccurate AI generated scientific journal articles are then hoovered up into the LLM training data. It will be a race to the bottom for reliable scientific knowledge.