Has tech become the new politics?

Here’s some first-class AI doublespeak, straight from the Ministry of Damage Control, mostly from just this past week:

Except when those wrong answers are not.

Very useful. Very cool.

§

Pay no attention to the messes we make, says OpenAI1:

§

And by the way, no need to worry, says OpenAI, because our models can reason:

True LLMs don’t (just) memorize, and true that their models make for lousy databases (for a database a hallucination is an outright fail) but if the definition of reasoning is to obtain valid conclusions from known facts, GPT-4 frequently falls short there, too.

So-called “reasoning” by free association, even constrained by a giant database, isn’t really reasoning:

§

Here’s another form of Orwellian posturing. Microsoft’s website tells you this

But their actions speak differently.

§

Once upon a time (February 27, to be specific) OpenAI promised to take good care of us little people:

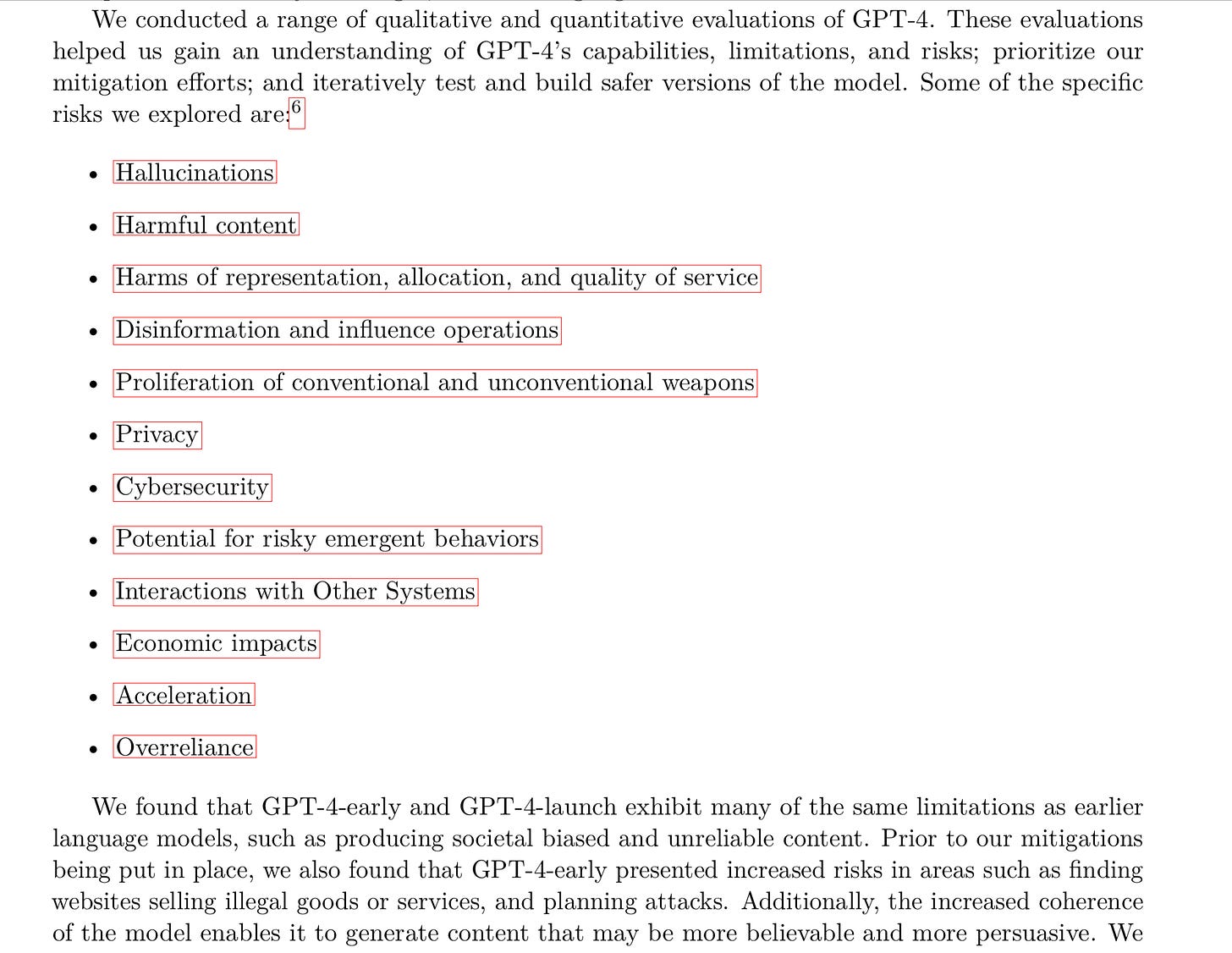

Nowadays, what we get instead is the old “It’s not our fault; we warned you things might go wrong” excuse:

Nabil Alouani nails what’s really going on:

Note that the same logic applies to essentially every potential harm OpenAI recently warned of. None are solved, and we are told nothing about how the models work.

Good luck, humans!

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is deeply concerned about current AI but genuinely hopeful that we might do better.

Watch for his new podcast, Humans versus Machines, this Spring.

The line attributed to Greg Brockman (“it’s not perfect, but neither are you.”) does not appear in the actual news story (aside from the headline), but does appear in the live demo from which the news story was drawn.

Funny how the risk posed by AI is exactly high enough for them to carefully guard their methods, but never so high that they have to refrain from releasing potentially profitable products.

* " . . . we create a reasoning engine, not a fact database…” Gaslighting.

* Brockman's "neither are you" - extraordinary comment.

Anymore, big profile actors often seem like manure spreaders, whether in politics or business.