The best argument that Tesla might not survive if its massive bet on self-driving fails comes from Elon Musk himself. He knows the score, and just said it out loud, declaring in a recent interview with three adoring fans, “Solving Full-Self Driving .. is really the difference between Tesla worth a lot of money and being worth basically zero.”

Can Tesla solve self-driving?

This morning’s fresh data dump from NHTSA should be a bit of a wakeup call to those who have gone long on Tesla, if they are thinking that a solution to Full Self Driving is in sight. The data aren’t devastating, but they aren’t so promising either, and they raise of lot of questions.

§

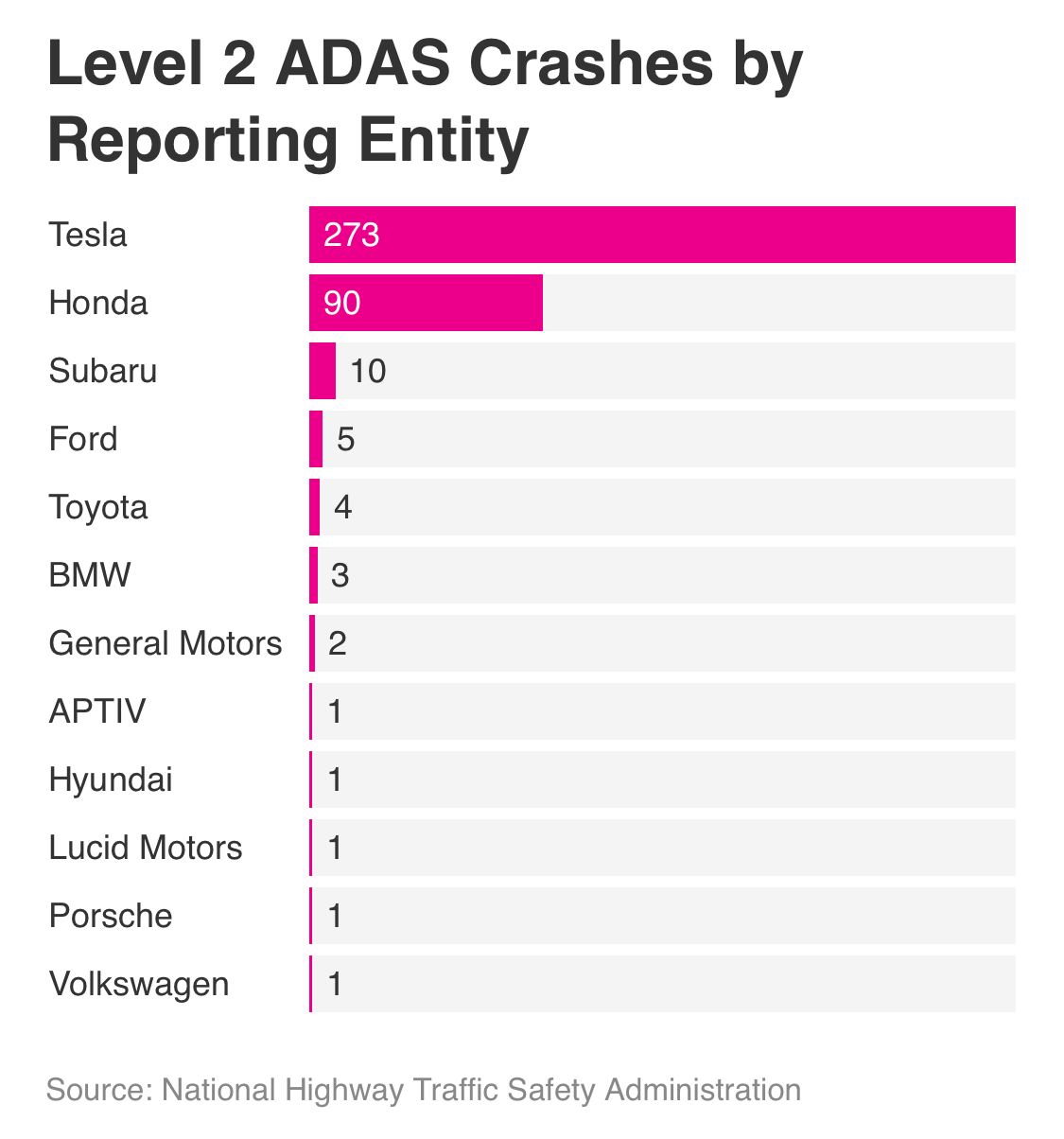

The biggest headline catcher is probably this graph:

Which Lora Kolodny and co at CNBC capture vividly:

Not pretty for Tesla that over the span measured - since July of 2021 - they have the most incidents with their “ADAS” driver-safety system.

That said, the graph is far from game over, for lots of reasons; as reporters like Kolodny and Andrew Hawkins, separately, at The Verge and the NHTSA themselves all rightly note, the new data lack context.

For one thing, it’s actually no surprise to anybody that Tesla has the most ADAS accidents; they are driving the most miles, and essentially we have a numerator here without a denominator. For all we know Tesla may be better eg than Honda, which might well have a lot fewer relevant miles. The data mainly pertain to incidents, not miles driven.

For another, there’s just no real comparability here. We don’t know much about what kind of miles Tesla or anyone else drove, how much was highway, how much was in good weather, etc

One of the biggest takeaways here (I’ll give another in a second) is how impoverished even these new data are. It’s fantastic that NHTSA is now pushing manufacturers to release incident data, and but we need soooo much more data (eg about miles driven) if we are to look at the money question—how safe is all this, really—in a truly scientific way.

But, ok, what can we learn from today’s data dump?

§

Well, there is one important denominator, and some context around it. That denominator is the number of days that the data cover; it’s a bit less than a year.

So we can conclude that Tesla has had roughly 3 accidents roughly every four 4 days.

Is that good, or bad? I don’t know it, but it’s enough to be concerning. Why? Because .75 incidents a day comes from a very limited trial of computer-assisted driving relative to the ambition of full-self-driving. That trial is limited in a whole bunch of ways:

Only so many vehicles (perhaps a million, in very round numbers) even have Tesla’s autopilot features installed.

None of those vehicles use the Autopilot full-time; it’s simply not available in all circumstances.

None of those vehicles is ever supposed to be driven without human intervention. The whole point of “Full” self-driving is that the car should be able take you from point A to point B without requiring a human to pay attention at all. That’s hugely different from automatic braking or lane keeping, and so forth, in which a human is still in the loop.

If we want to be serious about envisioning a future in which all our cars drove themselves, we have to ask what would happen if the entire fleet of US vehicles were driven with software like Tesla and its peers are currently testing.

Clearly, the number of incidents would go up, as the number of vehicles went up, and would also go up significantly as the systems were more regularly challenged with more difficult driving conditions (bad weather, more erratic pedestrians, etc), and likely jump radically if we took humans out of the loop altogether.

Current L2 Self-driving is Easy Mode; the real world is Expert Mode; it looks like we aren’t even close to ready for that.

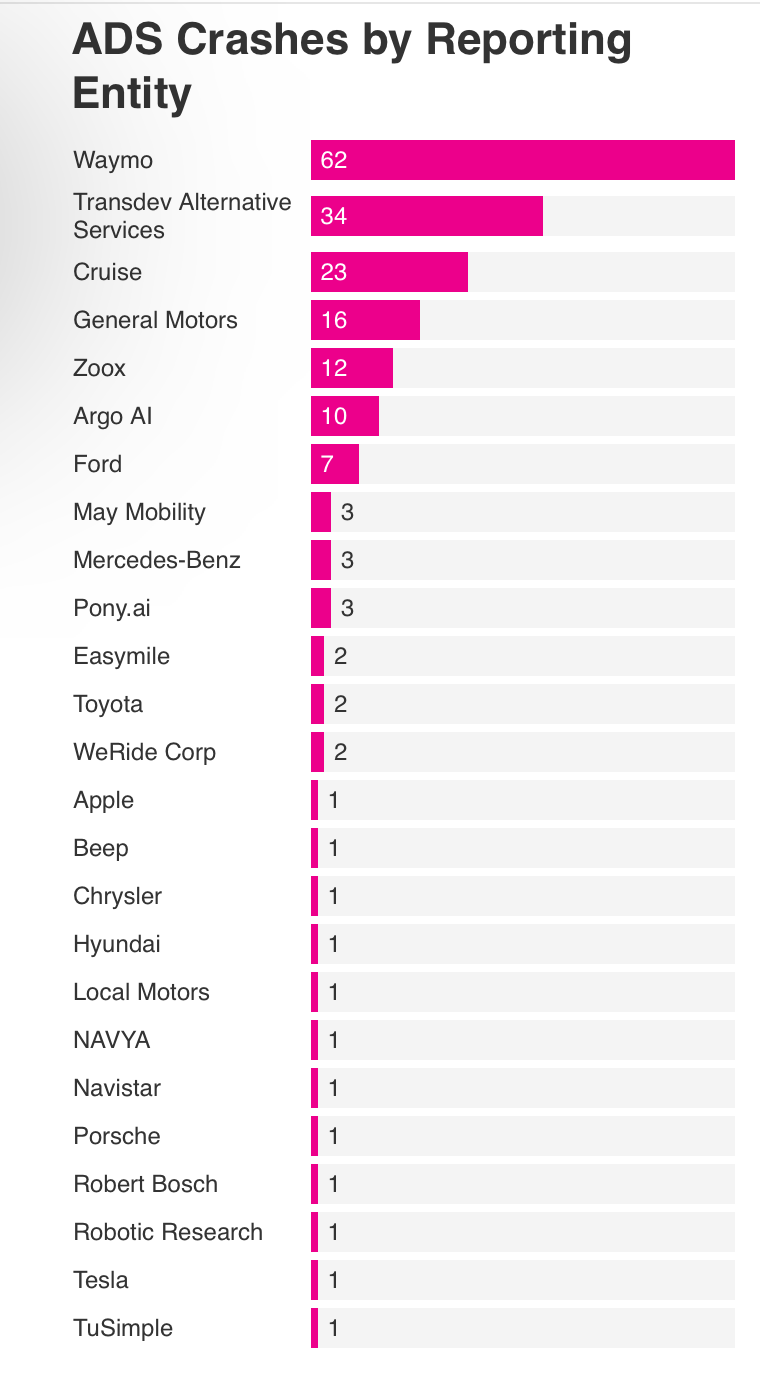

In fact in some ways the worst news for Tesla may be a graph that they aren’t even in, a separate graph on teams that actually are trying to compete on Level 5 [ADS] driving

Why should Tesla—and everybody else who might be working towards Level 5 full self driving—worry about this one?

Because it shows that the people who really claim to be working on full self-driving (which Tesla waffles on1) are having plenty of problems of their own. Waymo, who many insiders feel is closest, appears to be having a little over one incident a week— driving far fewer miles than Tesla is, and under much more limited circumstances, in just a tiny handful of locations.

My informal read (still lacking a tremendous amount of information that I as a scientist would really like to see) is that neither Tesla nor Waymo is anywhere close to something we could use every day, all the time, wherever one wanted to go. And if those two aren’t close, given their massively level of investment in the technology, I seriously doubt anyone else is close, either.

As I emphasized in a $100,000 bet that I recently offered Elon, the core issue is outliers, the endless list of unusual circumstances, like a person carrying stop sign, that aren’t in anybody’s database, obvious to humans, yet baffling to current AI.

As far as I can tell, nobody has a real solution to the outlier problem of coping with unusual circumstances; without it, full self driving will remain a pipe dream.

Indeed, the NHTSA report data show that even some pretty ordinary circumstances, like avoiding stopped vehicles, are continuing to prove problematic.

Level 5 has to handle it all; everyday stopped vehicles, weird outliers and so forth. We’re just not there.

And Level 2 driver-assist has some unique problems of its own. For example, we lack data on whether drivers use such systems as directed, and need to enforce vigorous mechanisms to make sure drivers don’t tune out. Problems still abound.

§

Does that mean that society should give up on building driverless cars? Absolutely not. In the long run, they will save lives, and also give many people, such the blind, elderly, and disabled, have far more autonomy over their own lives.

But our experiments in building them need to be strictly regulated, especially when nonconsenting human beings become unwilling participants. And our requirements on data transparency need to be vastly stricter.

More than that we just shouldn’t expect fully autonomous (Level 5) driverless cars—safely taking us from any old point A to any old point B—any time soon.

Tesla waffles on what it is doing. It calls its stuff “Autopilot” and “Full Self Driving”, but it tells the state of California that it doesn’t need to supply self-driving intervention rates because it’s software is actually only Level 2. That bothers me. A lot.

Because customers are led to believe, by the names of the product, that the cars are far more capable than they really are, and may lead people to be inattentive, to have accidents, and in some cases lead to deaths.

the peskiness of RL, so different from simulations, curve fitting can never solve it, even causality won't help, we need a model building loop, and demote the DL stuff to a useful signal to add to the others

Theres a far bigger question that needs answering I'm my opinion: As a society, do we actually NEED FSD technology? Maybe what we really need is a super efficient public transport infrastructure that would obviate to need to solve the potentially unsolvable and obviate the rhetoric of safety concerns because it would naturally lead to less road use and therefore less deaths. For those still concerned about those on the road, it has been shown that the two lane roundabout can reduce the biggest cause of accidents which occur on intersections, by up to 80%. The experiment has already been run. They're relatively cheap passive devices that require little attention in terms of maintenance, whats not to like? Oh, yeah, they're not very technologically sexy.... FSD is not the solution, what's needed is a societal reframing.