Casey (Newton) Strikes Out

His continued skepticism of AI skepticism continues to miss the mark

There will be no joy in Mudville today. Hard Fork’s Casey Newton has struck out.

In particular, Newton has replied to me and others in a paywalled rejoinder and Bluesky post below, defending his initial reply to his glib and misleading characterization of the field of AI skepticism, with ... yet another glib and wildly misleading characterization:

Aside from being needlessly disrespectful, this falls wide of the mark for many reasons.

Few AI skeptics would deny that there WERE, in the era of 2020-2022, rapid improvements. My whole point about diminishing returns is that current gains are smaller than those initial gains. Never did any of us say there no gains at all.

The question, which Newton sidesteps, is whether improvements continue at the same pace. (And of course we all acknowledge that GenAI can be used for coding and brainstorming; the question is always whether the constantly-extolled benefits outweigh the costs.)

In these paragraphs, Newton also completely sidesteps the extent to which he misrepresented virtually the entire field (AI skepticism) that he intended to criticize.

And it is a simply a lie to say that few of us interested in whether scaling laws might get us to superintelligence. That question has been a driving question, maybe the driving question here, since the very first post in this Substack, The New Science of Alt Intelligence. The person who is apparently being “intellectually dishonest” (to use Casey’s invidious phrasing) here is Casey. Onswego (quoted below) says this quite directly. I find it hard to disagree.

The paragraph on bloggers and financial analyses, likely aimed at Ed Zitron, and perhaps myself, is just as empty. What exactly is staring these unnamed people in the face? The lack of profits? The rapidly growing competition? The poor results with OpenAI-powered Copilot? The rapidly increasing costs that endless scaling engenders? Oh wait, those are the unaddressed facts Zitron and I have emphasized that are staring Newton in the face. The 2024 Nobelist Daron Acemoglu’s views on the matter would also seem to be ignored.

Newton is also conveniently ignoring an absolutely fundamental distinction, between early period of reliable, rapid progress from 2019-2022 (when training for GPT-4) was completed, and a more recent regime in which by many measures, progress has (reportedly) slowed. Those of who are not in the thrall of hype are free to look at the evidence.

That evidence comes in many forms.

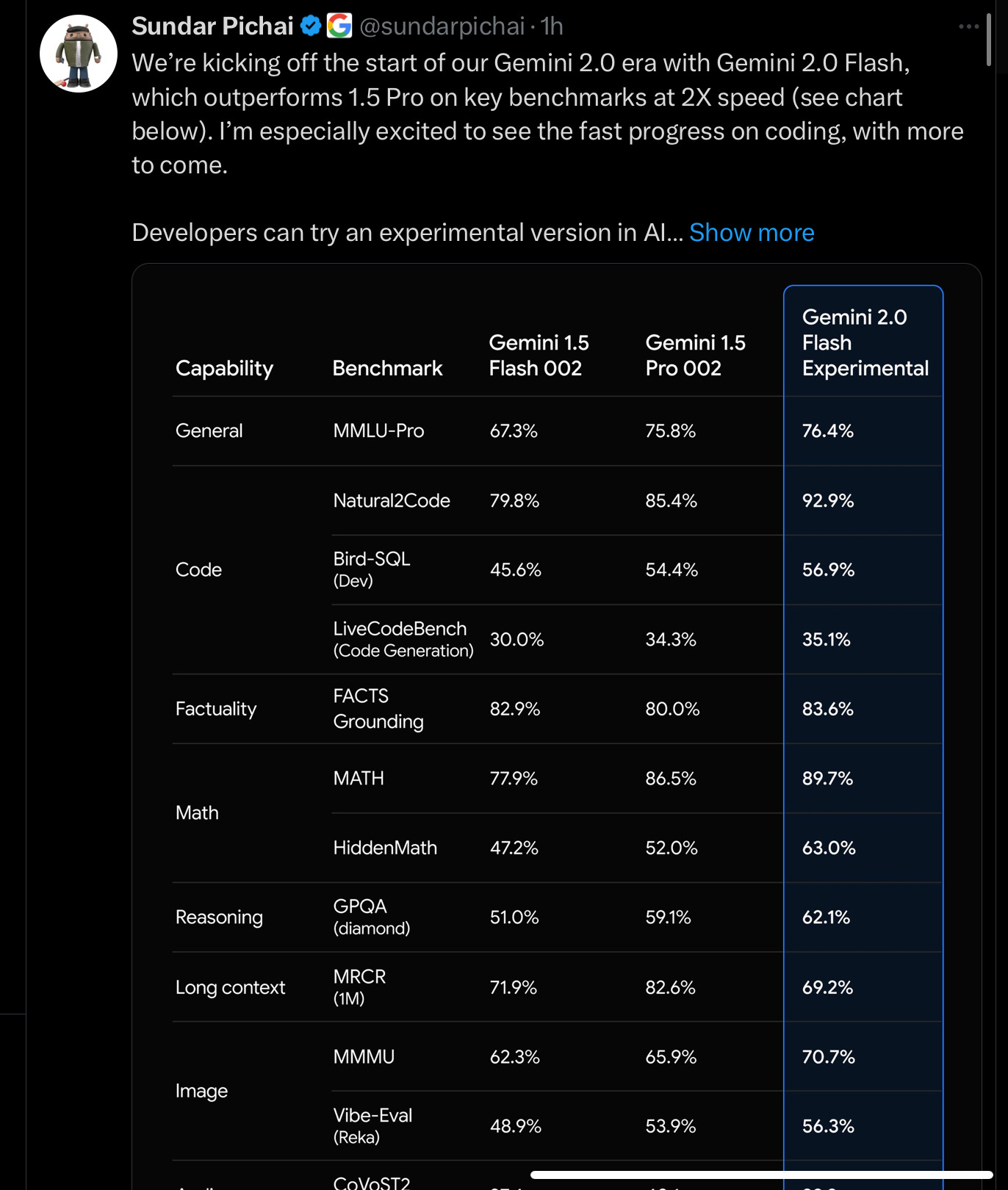

18 months of results that look more incremental than exponential. Here’s the latest, which I saw earlier today, showing that Gemini 2 is only modestly better than Gemini 1.5. I tiny bit better, but once again no quantum leap. (No it’s not just because benchmarks are “saturating” with correct performance; the leap from 34.3 to 35.1 on LiveBench is hardly a dramatic improvement, and of a piece with the others.)

Many recent reports over the last six weeks, from prominent figures, such as Nadella, Sutskever, and Andreessen, all with direct access to data that neither I nor Newton have direct access to, all have converged on the fact that the field is reaching a point of diminishing returns. Nadella and Pichai both said we will likely need new breakthroughs. Diminishing returns doesn’t mean zero improvement, it means that the “laws” of scaling no longer hold, and that the ROI on adding more data and compute are no longer are predictive in the way that they used to be, hence the need for new approaches. It’s intellectually dishonest to ignore all these recent and relevant reports.

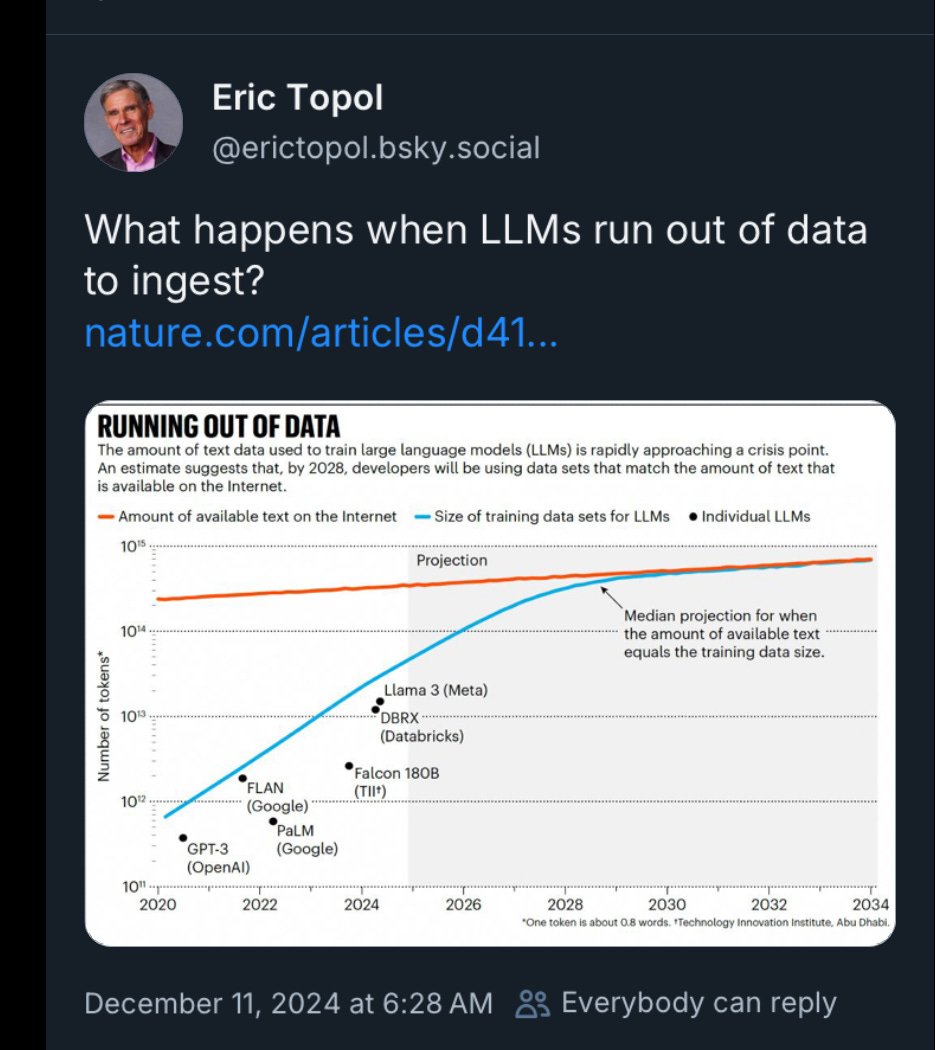

There is also growing evidence that we just don’t have enough data to feed the beast. Some recent evidence that I saw on that point, from this very morning:

§

You can imagine that I am quite disappointed in Newton’s initial misrepresentations, for which he has not apologized, and his new and completely unwarranted accusations of intellectual dishonesty.

But please don’t take my word for it; obviously I have a horse in the race. Here’s a sampling of public commentary, from people that saw Newton’s original essay and (in most or all cases) my own reply:

In a Substack post called Weighing in on Casey Newton's "AI is fake and sucks" debate, George Washington journalism Professor David Karpf wrote

Casey wrote a follow-up last night. He… still thinks the glib framing is totally fair, and that these guys are kidding themselves.

I am generally a Casey Newton fan. His reporting is generally excellent. He is well-sourced and tenacious. He has developed a solid bullshit-meter when dealing with crypto folks and Musk acolytes. His Hard Fork podcast is great.

But I think he’s missing the point here. And there’s some real depth to what he’s missing.

In a taxonomy of what AI skeptics actually believe (and how diverse their actual views are) educator Benjamin Riley writes:

Last week Casey Newton, who writes about technology for Platformer and co-hosts the “Hard Fork” technology podcast, wrote about the emerging movement of AI Skepticism. Newton’s take: AI Skepticism has two main “camps,” the “AI is real and dangerous” camp versus “AI is fake and it sucks” camp. The first group, which Newton puts himself in, believes that AI is going to transform life as we know it, bestow great benefits as well as harms, and may soon lead to artificial general intelligence. In contrast, Newton contends, the fake-and-sucks group is so fixated on the current limitations of AI, they fail to appreciate the advances of this technology and its many benefits, benefits he believes will only continue to grow.

Oh, and Newton blames Gary Marcus for inspiring these nattering nabobs of AI negativity.

The basic problem with this essay is that nearly everything about it is, well, wrong. David Karpf skewers it here, Edward Ongweso Jr. whacks it here, and Gary Marcus fires back here. My take: Newton is wrong both on the Who of AI Skepticism, in terms of who is participating in this nascent intellectual movement, and the What, in terms of what people within this movement actually believe. I appreciate that Newton covers technology broadly, not just AI, and thus is unfamiliar with the nuances of AI Skepticism as a movement. But in this instance he didn’t just oversimplify things, he failed to grasp its core essence.

Tech writer Edward Ongweso, Jr. absolutely savages Newton and views on AI skepticism, opening a long, detailed takedown that starts thusly:

Casey Newton’s latest essay on why we should be skeptical of artificial intelligence skeptics/critics is one of the more lazy and intellectually dishonest ones I’ve read in a long while.

The core thrust of Newton’s piece is that the entire range of AI discourse boils down to two groups: critics external to firms and organizations directly working on or studying AI (they believe “AI is fake and sucks”) and internal critics who understand “AI is real and dangerous.”

It’s not clear why anyone should entertain Newton’s false dichotomy, especially if they spend more than a second thinking about this.

Among the many other damning points in Ongweso’s essay is the superficiality of Newton’s analysis of the advantages of GenAI:

[It] seems like Newton’s insistence on AI being “real” comes at the cost of anything resembling a material analysis. Does Newton’s framework offer any insight into why so many people are using generative AI products, into what workplaces are rolling them out and why, into what firms are utilizing them and why? No. Does it offer any insight into what the aforementioned quarter-trillion dollars is being spent on and why? No. Does his focus on whether critics believe AI is “real” or “fake” tell us anything about its resource intensity, technical limitations, potential applications, or harmful impacts? No. Thus far, we are just being sold something indistinguishable from corporate copy.

The brilliant AI researcher Abeba Birhane wrote a long thread on Bluesky, concluding that

what Casey does is build a strawman only to spend an entire article attacking it

An old proverb tells us “If three people at a party tell you you are drunk, lie down”.

Casey refuses to read the memo.

§

But let’s take a step back. The point is not just that Newton is wrong or lazy or lacking in objectivity. It’s that the optimists for whom he is cheerleading are frequently off the mark. We still don’t have driverless cars in 97% of the world after a decade’s promises; hallucinations haven’t gone away. Home humanoid robots are nowhere in sight.

Optimists never make predictions about specific failure modes, and never say they’re sorry when their predictions are wrong. Cruise put 9 billion into driverless cars, presumably based on optimism, and just yesterday shut their entire robotaxi operation down. OpenAI has yet to make a profit, and doesn’t anticipate making one before 2029.

All the while, people Microsoft’s CTO in July are always telling us returns aren’t diminishing. (In no way did his statements anticipate what his own boss told us 5 months later.)

Journalists like Casey weren’t challenging these statements; skeptics were.

§

Part of the value in skepticism is correctly anticipating road blocks, and suggesting alternative directions (as I have for decades with neurosymbolic AI, which is at last coming into vogue, as noted in Fortune earlier this week).

On the road block side:

• In 1998 I warned that neural networks would have trouble dealing with abstract reasoning and outlier cases, a finding that has held steady for the last 26 years, including the recent Apple study that was widely discussed.

• In 2016 I warned that driverless cars would endlessly face edge cases; it’s still a serious problem. As yet there is no generalizable solution.

• In 2022 I warned that there would be chatbot related deaths such as suicides. This began to happen in 2023 and continued in 2024.

• In 2022 I warned that scaling would not hold, and that we would need alternative approaches; by most accounts, scaling has begun to diminish, and Nadella and Pichai both affirmed the need for new breakthroughs. A massive investment of hundreds of billions is unlikely to pay off.

• In February of this year, the day after Sora was released, I warned that Sora would have problems with things like object permanence. This has held true of all models, including the new release of Sora this week.

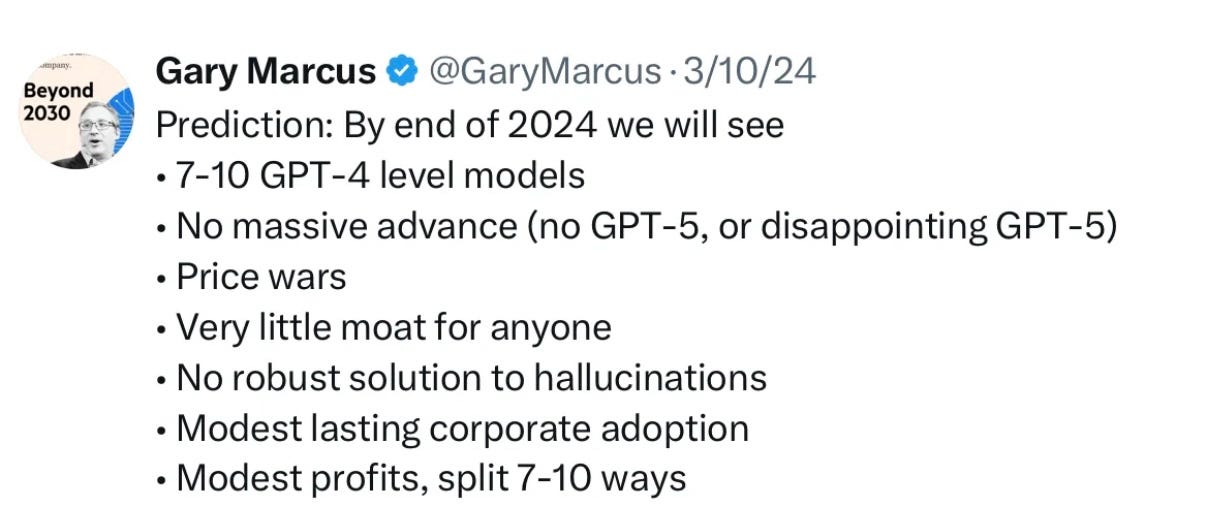

• In March of this year, I warned that the economics would be problematic, in multiple ways:

All 7 of these economic predictions have held strong.

(I’m not always right, I was wrong for exxample about Winograd Schemas were beaten more quickly than I would have imagined, as some of us discuss in this 2023 technical article).

Fan boys like Newton don’t counter these kinds of predictions with anything specific other than the bland and uncontroversial notion that some things (never specified) will improve. I don’t doubt this, when stated so vaguely, but you can’t improve the engineering if you can’t identify the problems and weak points, such that you can address them. Any engineer knows that.

§

Part of the issue may be that journalists try too hard to be close to industry without sufficient distance. As Ed Zitron illustrates it, consistent with Onswego, Jr’s concerns noted above that Newton might be straying to closely to corporate cheerleading:

Then there are people like Casey Newton, who happily parrot whatever Mark Zuckerberg wants them to under the auspices of “objectivity,” dutifully writing down things like “we will effectively transition from people seeing us as primarily being a social media company to being a metaverse company” and claiming that “no one company will run the metaverse — it will be an “embodied internet,” a meaningless pile of nonsense, marketing collateral written down in service of a billionaire as a means of retaining access.

I will say one positive thing. Newton does generally seem concerned about the risks of superintelligence, and I think that’s legitimate. But ignoring all the evidence that suggests that we won’t be there in the very near term does a disservice to his pretense towards objectivity. Our best defense against the possible dangers of superintelligence must begin with a realistic appraisal of where we are and how much time we have.

§

After my first response, a subscriber, one of my friends (who happens to be a well-known entertainer with a strong background in journalism) wrote to me and said my critique was “f*ing brilliant”, in his next message saluted me for having “balls of steel, my friend.”

If Casey had balls, he would have me - and how about Birhane and Ongweso, too — on Hard Fork to discuss all this interactively, instead of hiding behind BlueSky tweets and paywalled rejoinders.

Gary Marcus longs for the days of academic fair play, in which strawmanning people was considered an intellectual crime, and people participated in open debates in search of truth.

Seriously, thank you. You, the Eds, Jathan Sadowski, and Paris Marx are doing God's work out there.

I’m still surprised no one has taken a second look at Hard Fork’s 5/12/23 episode trying out the now defunct GM Cruise self-driving car.

Starting at 28 minutes the self-driving car is driving erratically and almost gets into an accident then you hear another driver pull up shouting “Did you see what happened!? You should probably want to report that thing!” Of course, in classic Casey Newton fashion he makes a sarcastic comment saying“We’re going to bring this thing to justice.” Followed by more downplaying of the incident.

Later in the episode they interview the Cruise CEO and never mention what happened, giving the softest of soft ball interviews. At the time, I was so surprised, especially with the bad reputation Cruise’s self-driving tech had at the time.

Then fast forward 5 months later where Cruise loses its license for another malfunction (we all know the story) and now Cruise is completely shut down by GM.

Casey has no business reporting on AI and it’s sad how many people take him seriously. Kevin is slightly better but not much.