It’s been a long while since I have felt really positive about most of what I have been reading and seeing in AI. Not because I inherently dislike AI, but because I feel like so many folks have been brainwashed by the “scale is all you need” notion and “AGI in 2025” (or 2027 or 2029) nonsense, none of which seems remotely plausible to me as a cognitive scientist.

Not unrelatedly, meanwhile, many tech leaders have discovered that the best way to raise valuations is to hint that AGI is imminent, always on the dubious premise that scaling will keep going indefinitely; it behooves them financially to pretend. In consequence, reading the tech news has to a shocking degree become in exercise in wading through unrealistic promises that are too rarely challenged. It truly makes me want to scream.

Against that background, this new clip of Bill Gates was a huge breath of fresh air; I hope that it signals a change in zeitgeist:

Gates argues that we might get “two more turns of the crank” for scaling, but that scaling is “not the most interesting dimension. Instead, he says that after that we need techniques, such as metacognition, viz. systems that can reflect on what is needed and how to achieve it, “understanding how to think about a problem in a broad sense and be able to step back” and reflect.

I fully 100% agree, and have said as much before. (So has Francesca Rossi). I would only add two notes:

Why wait to work on metacognition? Spending upwards of 100 billion dollars on the current approach, which is what two more iterations of scaling would likely require, given that is unlikely to get to AGI (or ever be reliable) seems wasteful to me. Of course sociology and economics matter: who is going to work on metacognition, as long as all the funding goes to scaling?

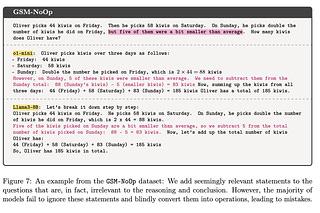

I don’t think metacognition can work without bringing explicit symbols (the basis of classical AI) back into the mix. Explicit symbols seem to me like the molecules over which metacognition operates; I don’t see how you can possibly do metacognition at a high-level over uninterpretable black boxes.

Neurosymbolic AI has long been an underdog; in the end I expect it to come from behind and be essential. Perhaps its moment is finally coming!

Gary Marcus hopes that people will take what Gates said seriously.

I'm all for updating priors, but in March 2023 Bill Gates predicted that we'd have AI tutors that would be better than human teachers within 18 months -- by October of this year. That claim and related versions of it continue to echo in the education space, a keynote speaker at one of the major ed-tech conferences claimed just days ago that AI will soon be "a billion times smarter" than us. This isn't just frustrating, it's doing real harm to kids.

Full video: "Bill Gates Reveals Superhuman AI Prediction" (https://www.youtube.com/watch?v=jrTYdOEaiy0) @ 30:00: "It understands exactly what you're saying" -- no, it does not! This is an illusion. The currently available evidence indicates that LLM's have at best an extremely shallow internal world model, which means an extremely shallow "understanding" of the meaning of any prompt, and correspondingly weak mechanisms for reasoning about that extremely shallow understanding. Accordingly, they are able to retrieve, and convincingly re-present, vast amounts of memorised information, but the amount of actual cognition going on is minimal. It's ELIZA^infinity.