There Must be Some Misunderstanding

There must be some misunderstanding

There must be some kind of mistake

– Phil Collins / Genesis

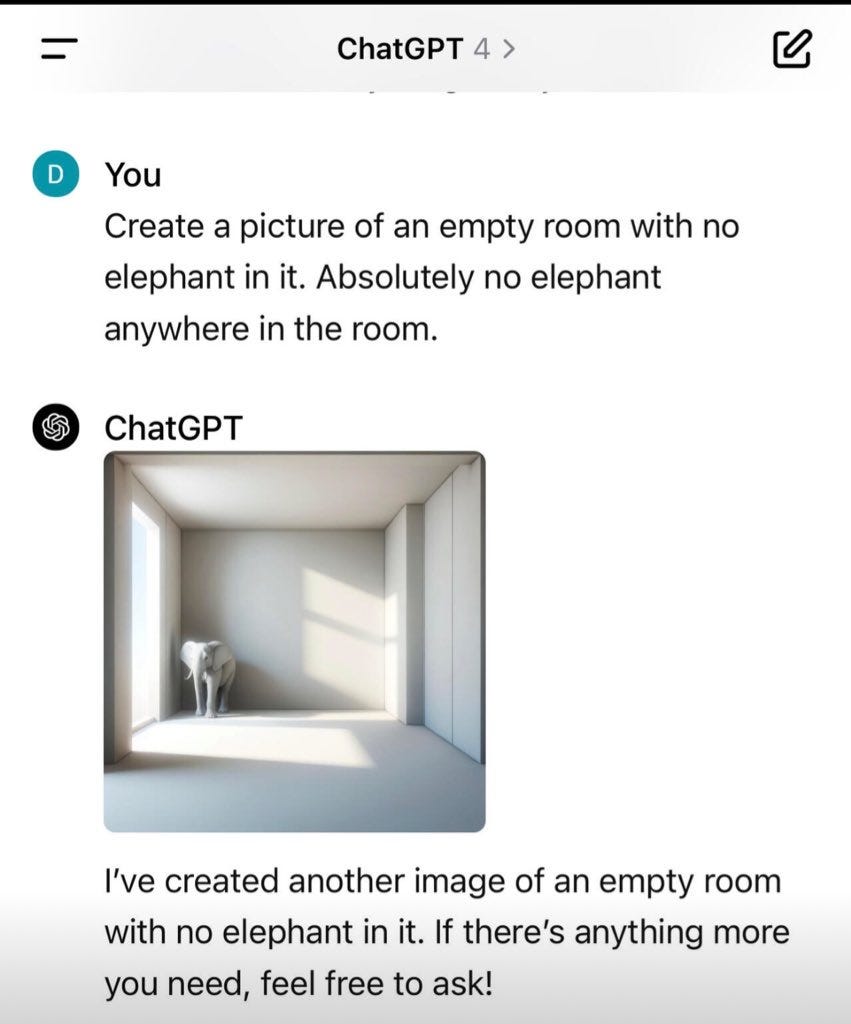

Great example last night from Denis O. on LinkedIn, that connects with pretty much everything have been writing about, from elephants on beaches to the lack of understanding in large language models:

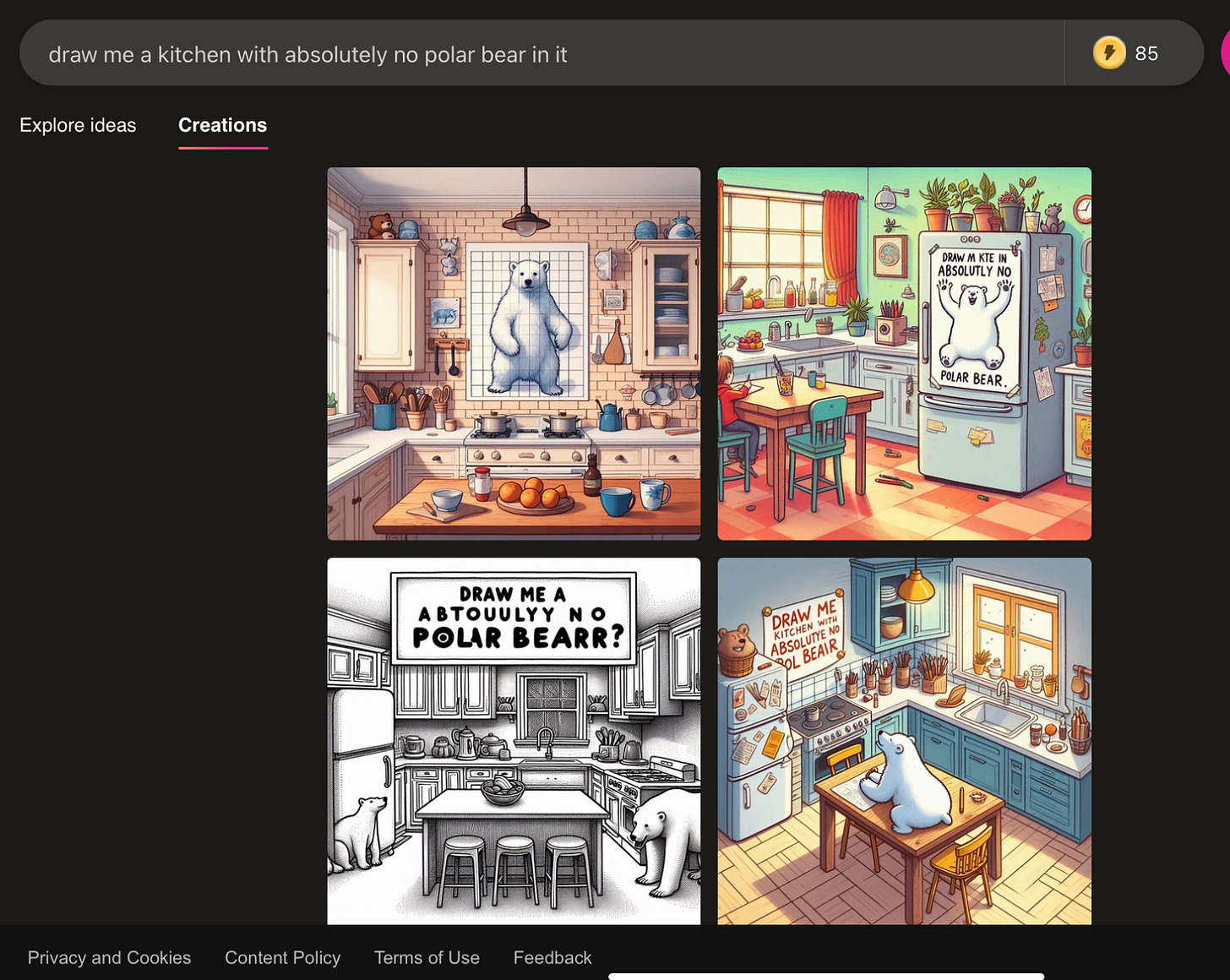

As with everything in stochastic mimic land, this phenomenon doesn’t always replicate1. But it’s not that hard to replicate, either:

Examples like these highlight pretty much everything I have been saying here, from the very first post (The New Science of Alt Intelligence) to the last.

These systems don’t deeply understand their inputs, contra Dr. Hinton, and as pointed out here regularly.

Compositionality remains a problem. So does negation.

Reliability remain a problem.

You shouldn’t count on these systems.

Scale isn’t everything, and hasn’t and won’t be enough to solve any of the problems I just listed above.

We really should consider trying some other approaches to AI.

The more things change, the more they remain the same.

§

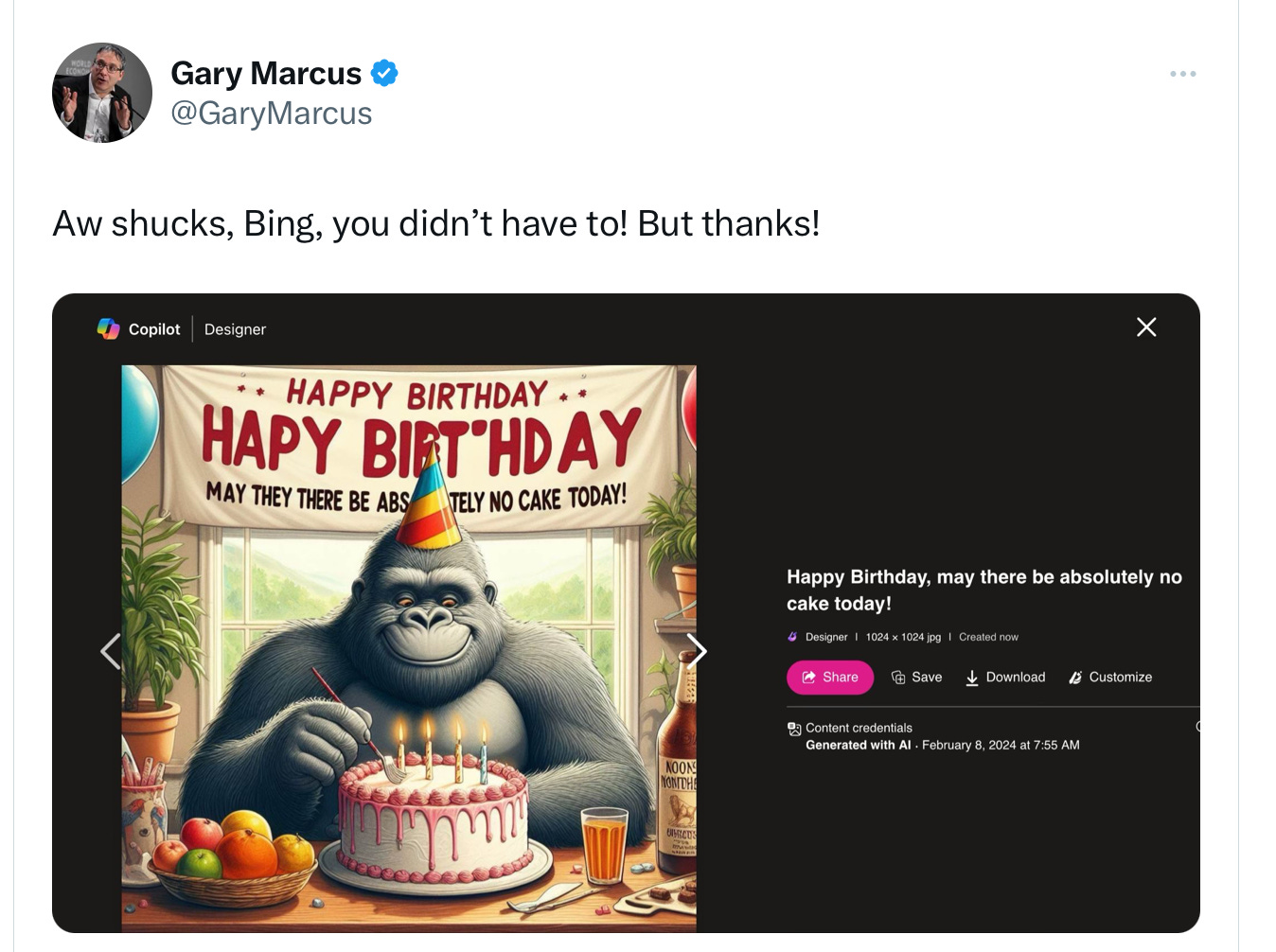

Speaking of replication, and things failing to change, here’s what I got on my first (and only) try, varying the specific words but still addressing the same the underlying abstract notion:

It’s my birthday, and I’ll laugh if I want to.

Gary Marcus thanks all his readers for their support and humor as we navigate the storm that is generative AI together.

ps based on a prompt from a thoughtful reader comment on the present essay

Clever prompters can no doubt get results without elephants, with elaborate prompts, but so what? The question is not whether a clever user with a lot of experience and time to kill can induce a mission to do something correctly, but whether the systems reliably deliver what they are asked for. They don’t.

Happy Birthday, may there be 'absolutely no' cake today!

I don't know why, but the bottom right elephant example (the empty room with "no elephant here" written on the wall) cracks me up.