Review of past predictions

Let’s start with 2024. By and large, with some caveats, the predictions for 2024 made here in this Substack have held strong.

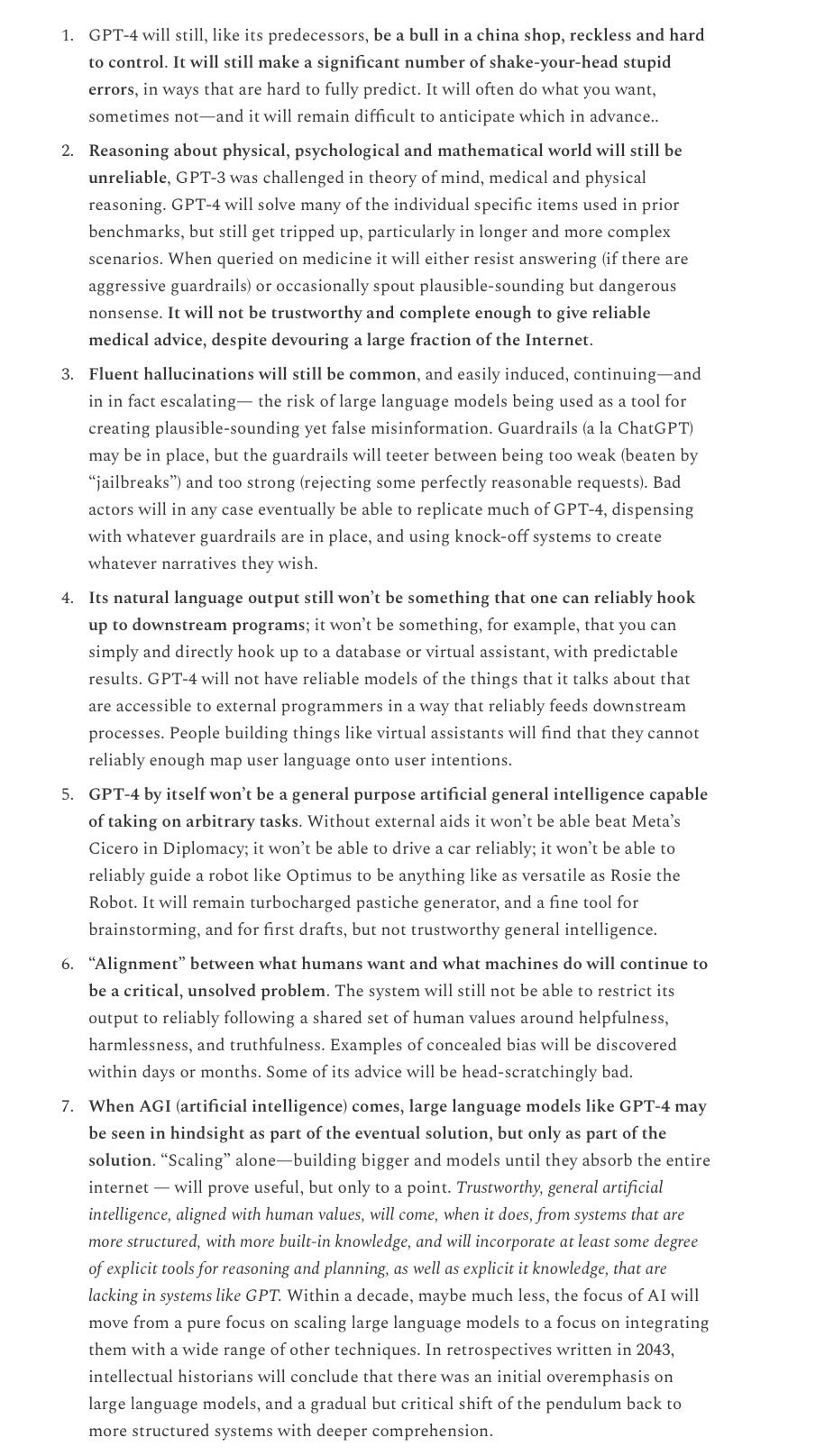

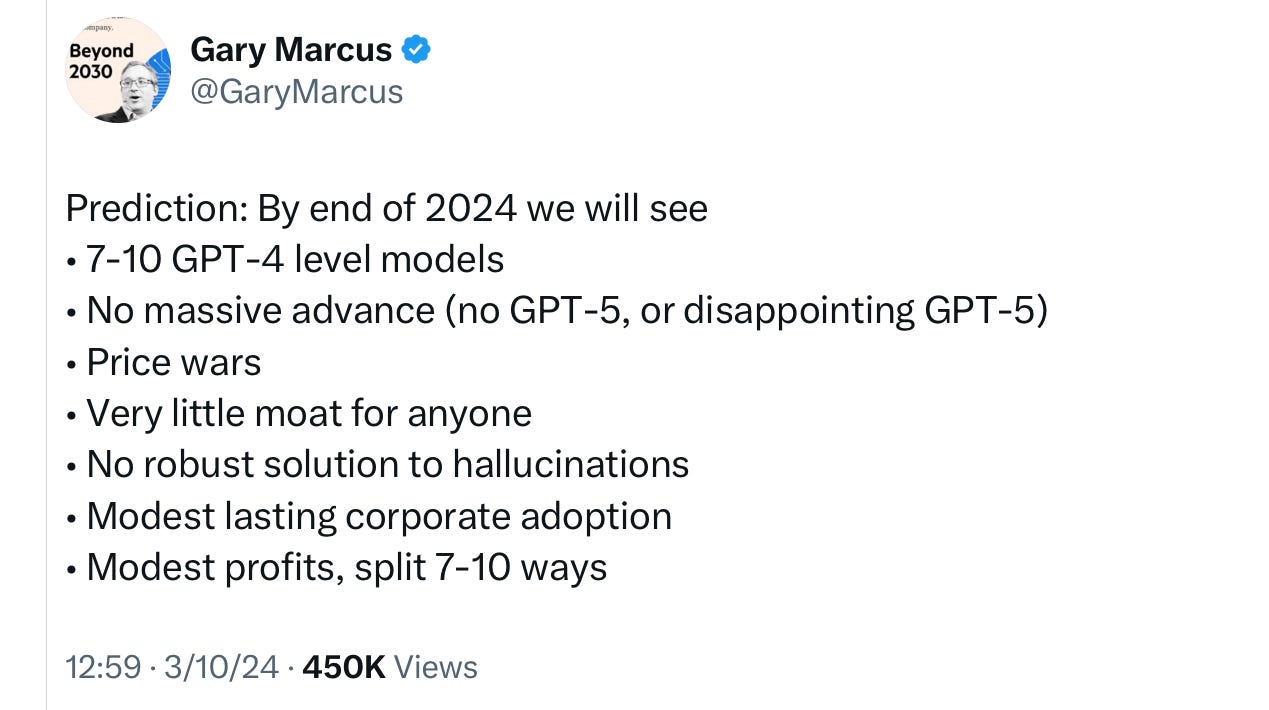

Perhaps the core set of predictions were these, summarized in this March 10, 2024 tweet, and a series of Substack essays in 2023 and 2024 that elaborated them.

With a possible asterisk on OpenAI’s o3, which was announced in December but not released or exposed to broad scrutiny, all seven were pretty much right on the money. We are still basically surrounded by GPT-4 level models with incremental changes, and nothing that OpenAI themselves thinks is worthy of the GPT-5 label, There are many such models now; there is a price war. There is very little moat. There is still no robust solution to hallucinations. Corporate adoption is far more limited than most people expected, and total profits across all companies (except of course hardware companies like NVidia, which profits from chips rather than models) have been modest at best. Most companies involved have thus far lost money. At least three have been quasi-acquired with relatively little profit for their investors. The top AI moment of 2024 was not the much-anticipated release of GPT-5, which never arrived, even though fans kept predicting it, all year long.

Other

My predictions, going back to 2022 that pure scaling of LLMs would eventually start to run out appear to have been confirmed in November and December, based on comments from many industry insiders.

The argument that I made in 2022 to indicate that “scaling laws” were not physical laws but only generalizations with a finite life span become widespread in recent months.

The notion that some form of AI might be “hitting a wall”, which I coined in 2022, also became widespread, e.g in places like CNN, The Verge and Wall Street Journal.

The idea and phrase that I used here around scaling and “diminishing returns” has also become common.

The specific weak spots I raised in 2022 and going back to my 2001 book The Algebraic Mind —compositionality, factuality, reasoning, etc—persist. AI will presumably solve all of these someday, of course, but current techniques have thus far not been adequate.

The problem of distribution shift that I emphasized in 1998, 1999, and 2001 and ever since was echoed multiple times in recent work, including the prominent Apple paper on reasoning.

My December 2022 prediction that we would start to see chatbot-associated deaths was unfortunately confirmed in 2023, and sadly there appears to have been another incident in 2024.

AI-generated misinformation became increasingly widespread, as I warned in December 2022, but the effects have – thus far — been less than I feared. I continue to be quite concerned, and think vigilance continues to be required.

My February 2023 warning that GenAI would corrupt the internet increasingly appears to have been correct.

My November 2022 prediction that LLMs would not immediately markedly improve commercial AI agents like Amazon Alexa continued to hold throughout 2024.

My prediction that 2024 would be seen as the year of AI disillusionment was overstated but not entirely off the mark, holding true for some folks but not all, and as such perhaps deserve partial credit. Many people are still enthusiastic but there was certainly also lots of disappointment expressed in multiple places, such as “Early Adopters of Microsoft’s AI Bot Wonder if It’s Worth the Money” in the Wall Street Journal.

My 2023 prediction that OpenAI might someday be seen as the WeWork of AI has not been confirmed, but they are far from out of the woods. The company has not returned any profits, and is not expecting to do so until at least 2029, but has a burn rate in the billions, and still lacks a clear technical moat. Long term security, especially at high valuations, is this far from assured. Others such as the journalist Ed Zitron and the economist Brad DeLong are increasingly making similar arguments.

My predictions about the technical and economic limits of generative AI have largely been on target, but I was flat out wrong about investors. I was not sure that OpenAI would get another big funding round, let alone one at over $150 billion dollars. Time will tell whether that recent round was a wise investment.

Immediately after the February announcement of Sora, I noted Sora’s troubles with physics and predicted that they wouldn’t go away. They haven’t.

My seven December 2022 predictions about GPT-4 largely held true (number seven still pending) for every model that was released in 2024.

Predtictions made here on Christmas Day, 2022

25 Predictions for 2025

As against Elon Musk who said, in April 2024 with respect to the end of 2025 “My guess is that we'll have AI that is smarter than any one human probably around the end of next year [ie. end of 2025]”, here my own predictions for where we will be at the end of this year:

High confidence

We will not see artificial general intelligence this year, despite claims by Elon Musk to the contrary. (People will also continue to play games to weaken the definition or even try to define it in financial rather than scientific terms.)

No single system will solve more than 4 of the AI 2027 Marcus-Brundage tasks by the end of 2025. I wouldn’t be shocked if none were reliably solved by the end of the year.

Profits from AI models will continue to be modest or nonexistent (chip-making companies will continue to do well though, in supplying hardware to the companies that build the models; shovels will continue to sell well throughout the gold rush.)

The US will continue to have very little regulation protecting its consumers from the risks of generative AI. When it comes to regulation, much of the world will increasingly look to Europe for guidance.

AI Safety Institutes will also offer guidance, but have little legal authority to stop truly dangerous models should they arise.

The lack of reliability will continue to haunt generative AI.

Hallucinations (which should really be called confabulations) will continue to haunt generative AI.

Reasoning flubs will continue to haunt generative AI.

AI “Agents” will be endlessly hyped throughout 2025 but far from reliable, except possibly in very narrow use cases.

Humanoid robotics will see a lot of hype, but nobody will release anything to remotely as capable as Rosie the Robot. Motor control may be impressive, but situational awareness and cognitive flexibility will remain poor. (Rodney Brooks continues to make the same prediction.)

OpenAI will continue to preview products months and perhaps years before they are solid and widely available at an accessible price. (For example, Sora was previewed in February, and only rolled out in December, with restrictions on usage; the AI tutor demoed by Sal Khan in May 2024 is still not generally available; o3 has been previewed but not released likely will likely be quite expensive.)

Few if any radiologists will be replaced by AI (contra Hinton’s infamous 2016 prediction).

Truly driverless cars, in which no human is required to attend to traffic, will continue to see usage limited to a modest number of cities, mainly in the West, mainly in good weather. Human drivers will still make up a large part of the economy. (Again, see also Rodney Brooks.)

Copyright lawsuits over generative AI will continue throughout the year.

Power consumption will rise and continue to be a major problem, but few generative AI companies will be transparent about their usage.

Less than 10% of the work force will be replaced by AI. Probably less than 5%. Commercial artists and voiceover actors have perhaps been the hardest hit so far.(Of course many jobs will be modified, as people begin to use new tools.)

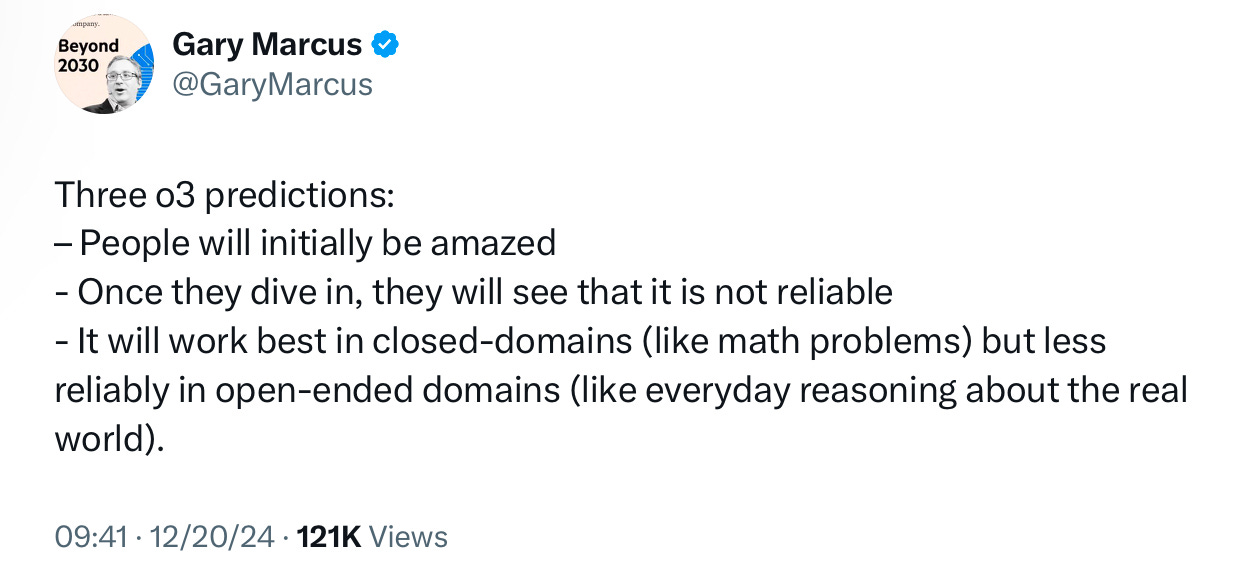

I stand by all three predictions I made an hour before o3 was announced:

Medium confidence

Technical moat will continue to be elusive. Instead, there will be more convergence on broadly similar models, across both US and China; some systems in Europe will catch up to roughly the same place.

Few companies (and even fewer consumers) will adopt o3 at wide scale because of concerns about price and robustness relative to that price.

Companies will continue to experiment with AI, but adoption to production-grade systems scaled out in the real-world will continue to be tentative.

2025 could well be the year in which valuations for major AI companies start to fall. (Though, famously, “the market can remain irrational longer than you can remain solvent”)

Sora will continue to have trouble with physics. (Google’s Veo 2 seems to be better but I have not been able to experiment with it, and suspect that changes of state and the persistence of objects will still cause problems; a separate not-yet-fully released hybrid system called Genesis that works on different principles looks potentially interesting.)

Neurosymbolic AI will become much more prominent.

Low confidence, but worth discussing

We may well see a large-scale cyberattack in which Generative AI plays an important causal role, perhaps in one of the four ways discussed in a short essay of mine that will appear shortly in Politico.

There could continue to be no “GPT-5 level” model (meaning a huge, across the board quantum leap forward as judged by community consensus) throughout 2025. Instead we may see models like o1 that are quite good at many tasks for which high-quality synthetic data can be created, but in other domains only incrementally better than GPT-4.

For those who want to play along at home, feel free to drop a comment below.

Moreover Metaculus is the process setting up a webpage with my predictions [link to come], starting with yesterday’s bet with Miles Brundage, and AI Digest has some specific and interesting questions around forecasting 2025, focusing on a number of popular benchmarks and projections of future revenues .

Happy forecasting!

Gary Marcus wishes you a very happy new year, and is grateful for the support from the 50,000 of you who have subscribed.

As somebody who is worried by unemployment caused by generative AI, less than 5 to 10 percent unemployment would still be massive in just one year.

LLMs are text compression algos. They are very good at memorising & retrieving text related to the user input. They can even pass bar exams based on that - some misinterpret that as intelligence.

However, nothing (even scaling) can change a text memorisation algo into a symbolic reasoning or composability algo, both of which are necessary for progress towards AGI.

Until research moves on from LLMs, AGI will remain elusive.